All documentation links

ProActive Workflows & Scheduling (PWS)

-

PWS User Guide

(Workflows, Workload automation, Jobs, Tasks, Catalog, Resource Management, Big Data/ETL, …) PWS Modules

Job Planner

(Time-based Scheduling)Event Orchestration

(Event-based Scheduling)Service Automation

(PaaS On-Demand, Service deployment and management)

PWS Admin Guide

(Installation, Infrastructure & Nodes setup, Agents,…)

ProActive AI Orchestration (PAIO)

PAIO User Guide

(a complete Data Science and Machine Learning platform, with Studio & MLOps)

1. Overview

ProActive Scheduler is a comprehensive Open Source job scheduler and Orchestrator, also featuring Workflows and Resource Management. The user specifies the computation in terms of a series of computation steps along with their execution and data dependencies. The Scheduler executes this computation on a cluster of computation resources, each step on the best-fit resource and in parallel wherever its possible.

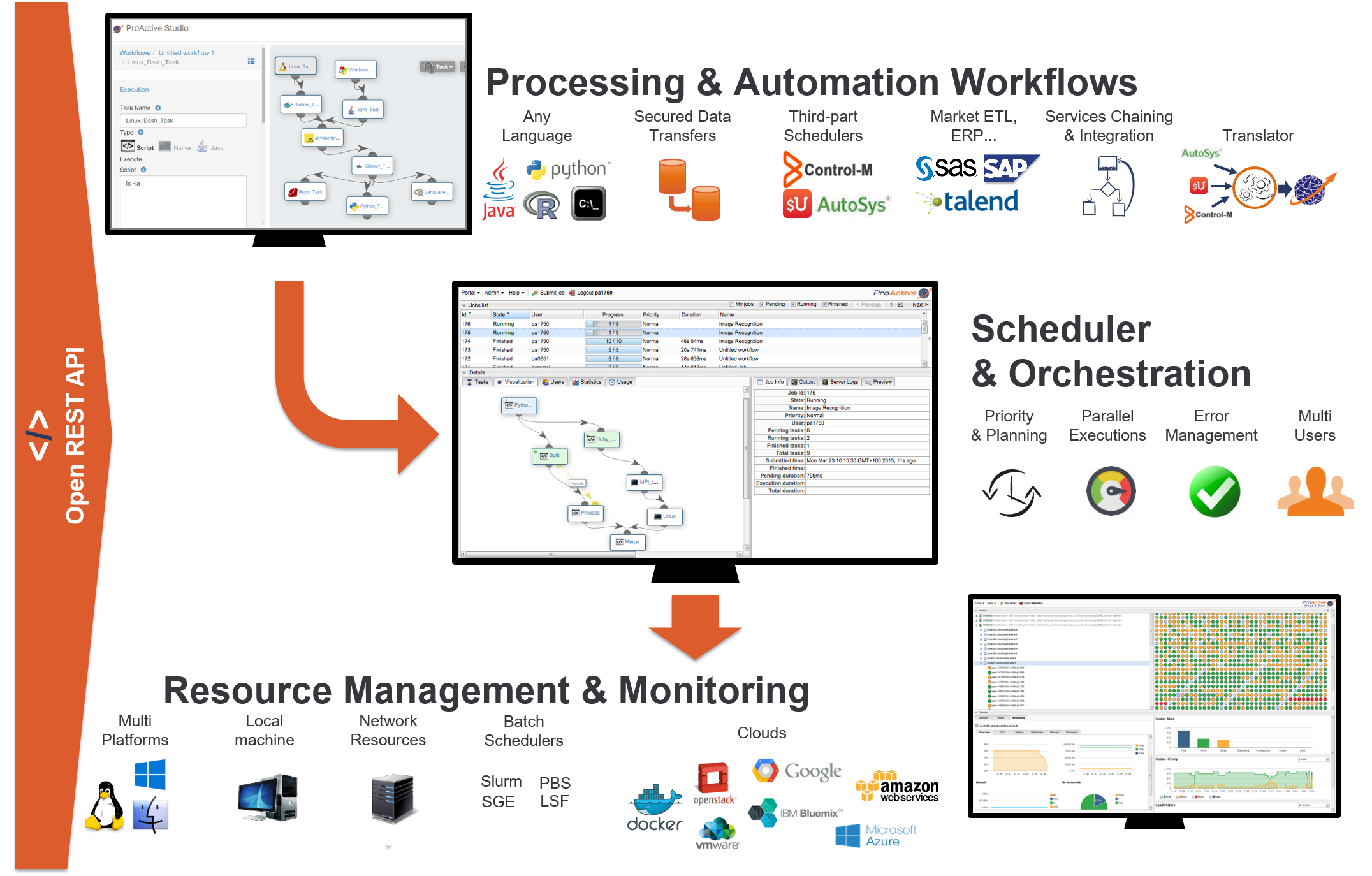

On the top left there is the Studio interface which allows you to build Workflows. It can be interactively configured to address specific domains, for instance Finance, Big Data, IoT, Artificial Intelligence (AI). See for instance the Documentation of ProActive AI Orchestration here, and try it online here. In the middle there is the Scheduler which enables an enterprise to orchestrate and automate Multi-users, Multi-application Jobs. Finally, at the bottom right is the Resource manager interface which manages and automates resource provisioning on any Public Cloud, on any virtualization software, on any container system, and on any Physical Machine of any OS. All the components you see come with fully Open and modern REST APIs.

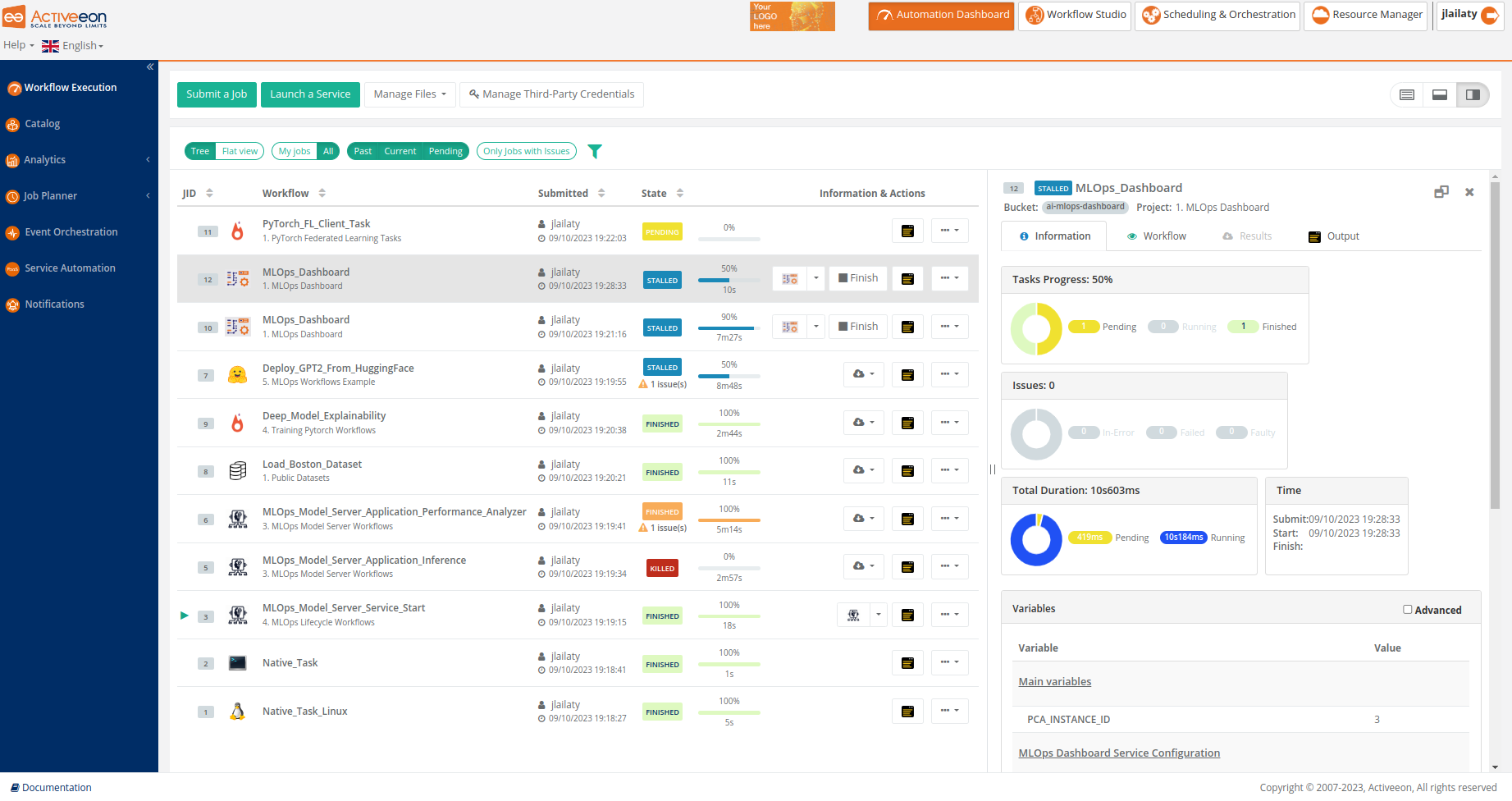

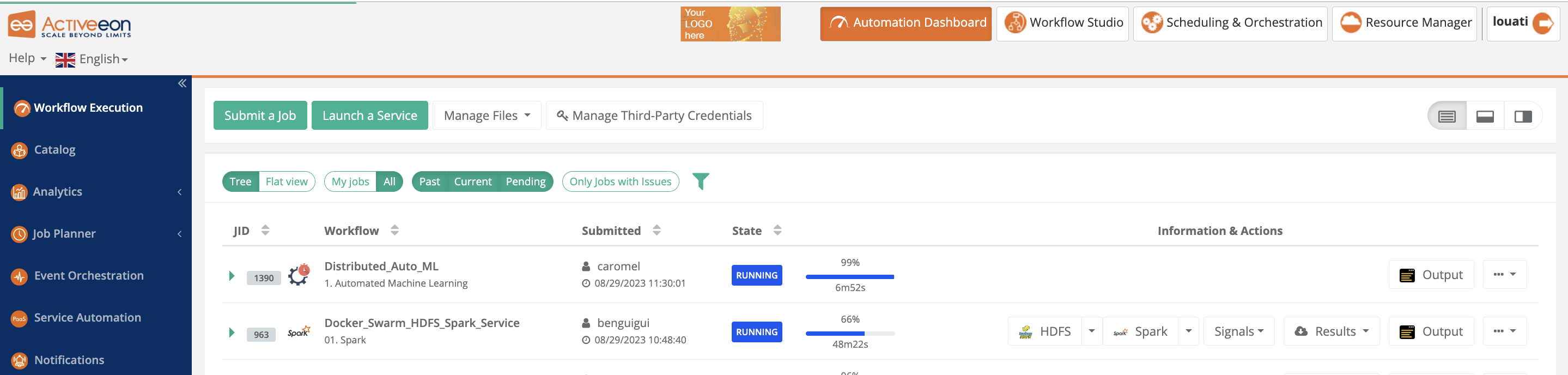

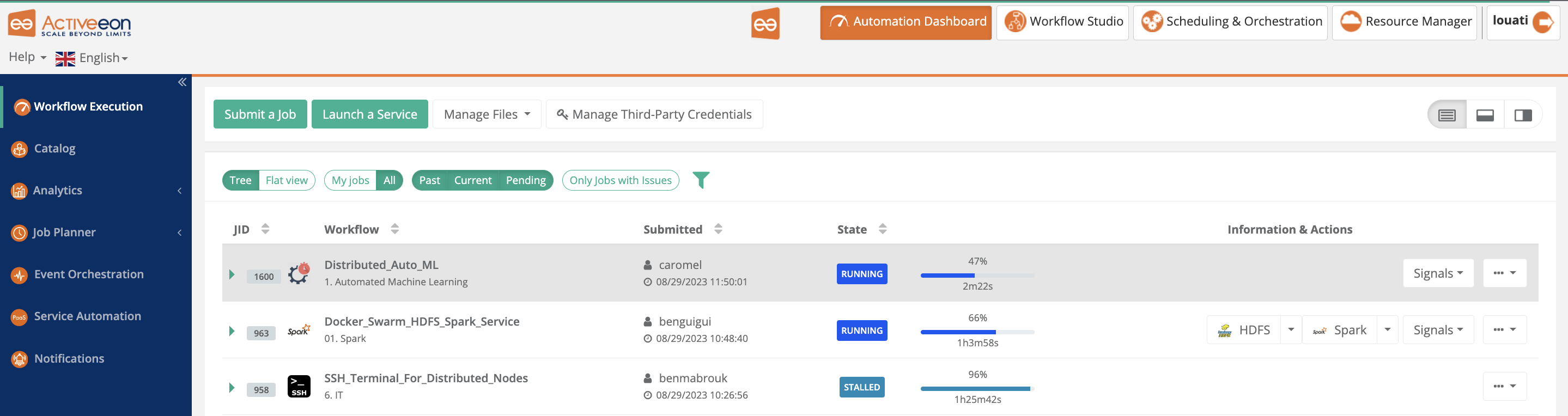

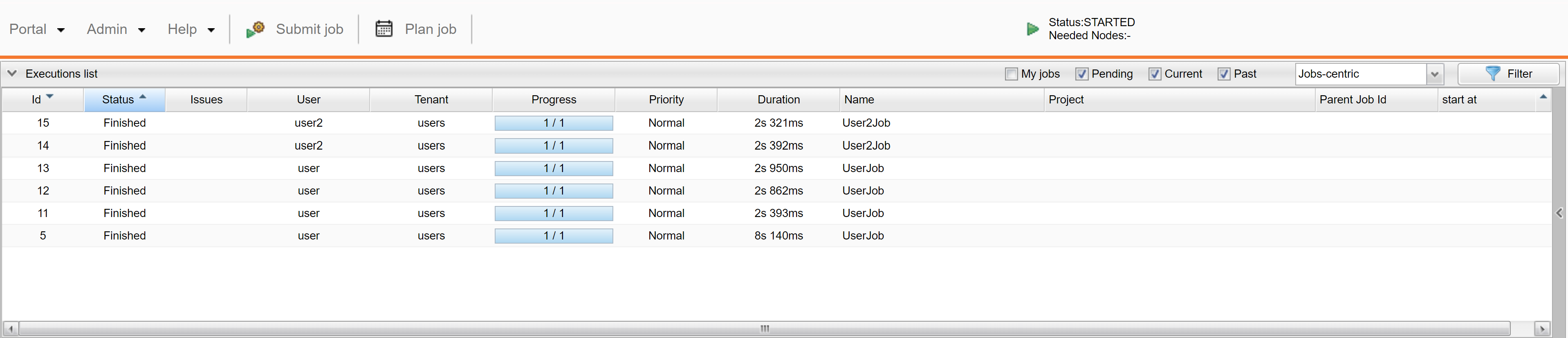

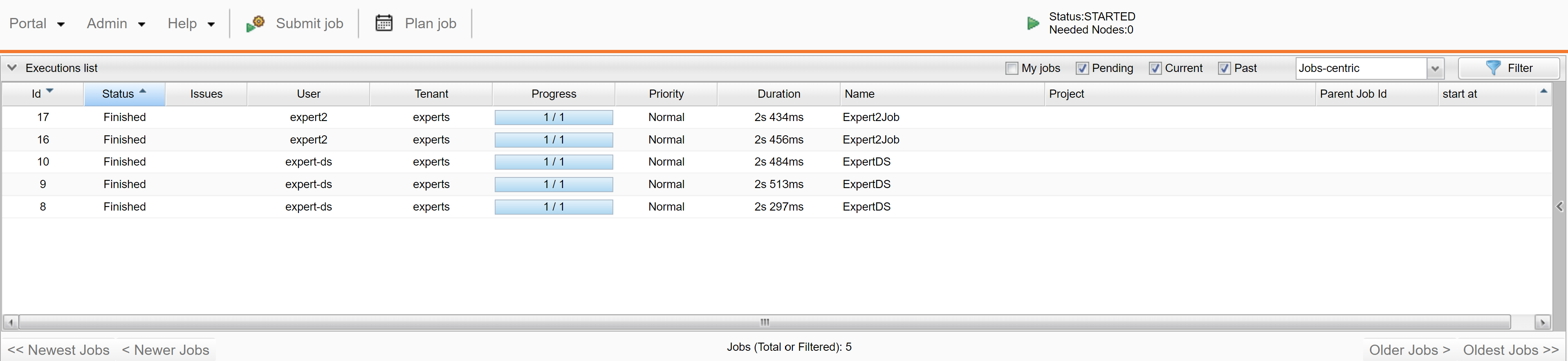

The screenshot above shows the Workflow Execution Portal, which is the main portal of ProActive Workflows & Scheduling and the entry point for end-users to submit workflows manually, monitor their executions and access job outputs, results, services endpoints, etc.

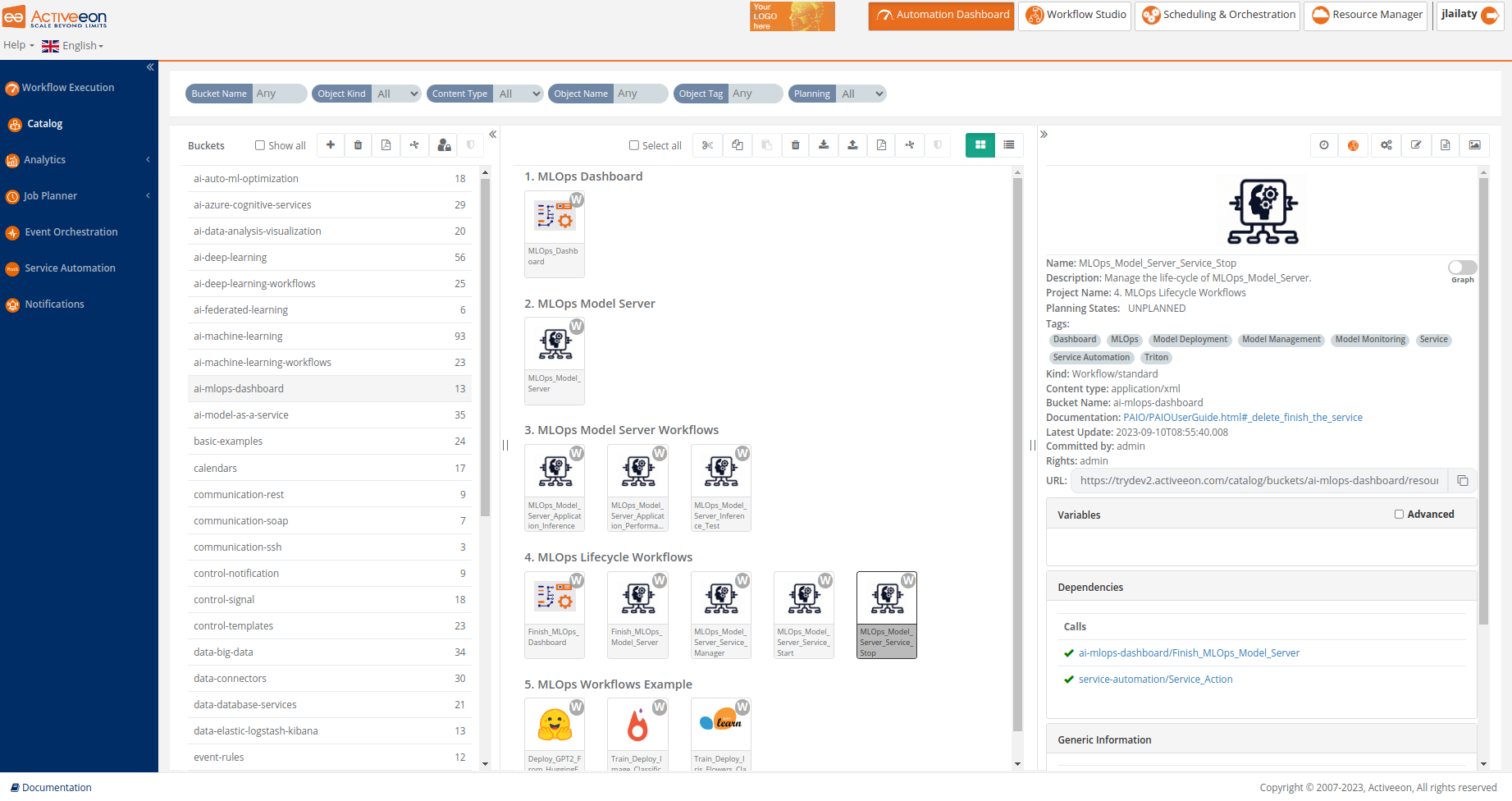

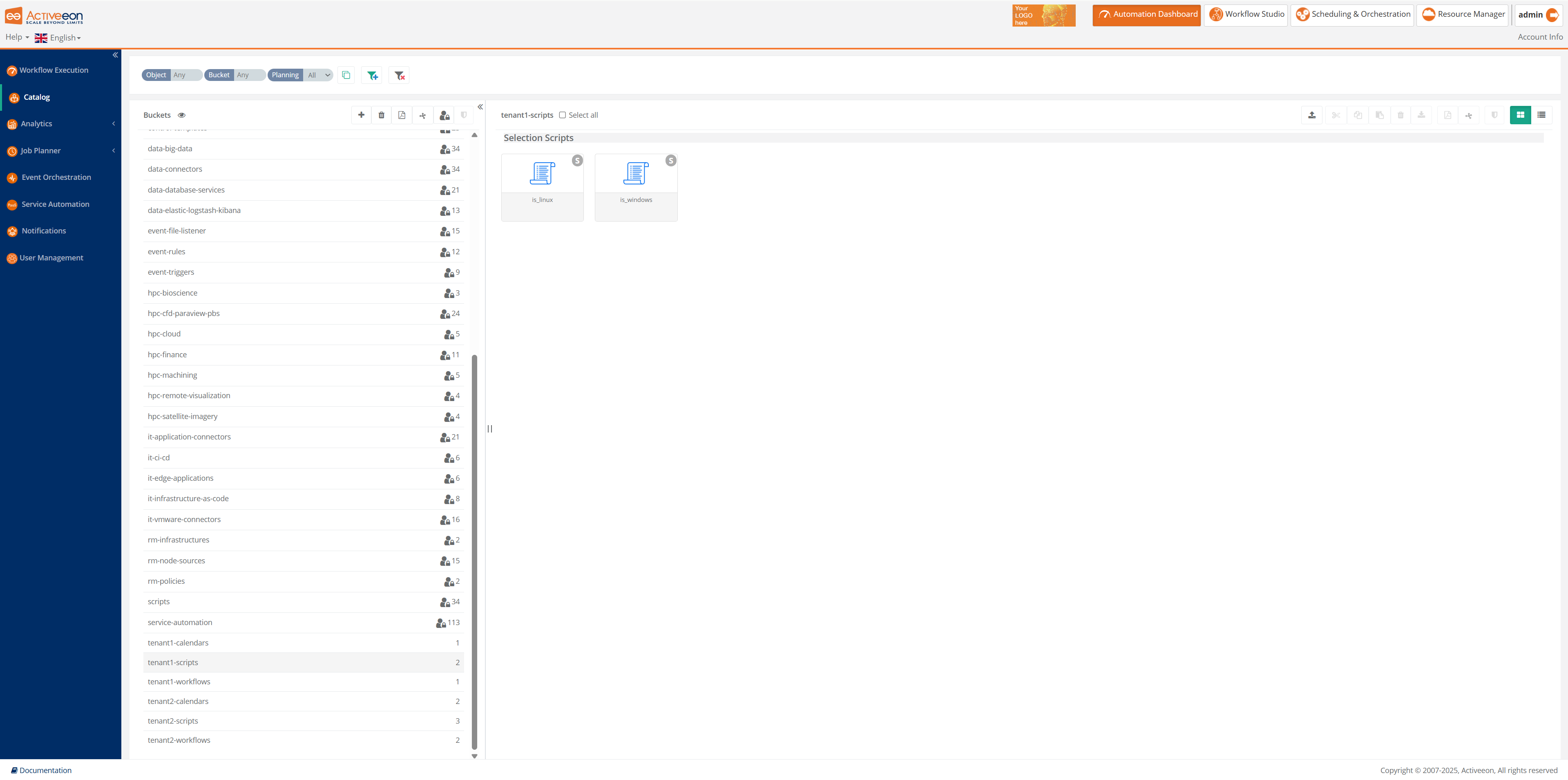

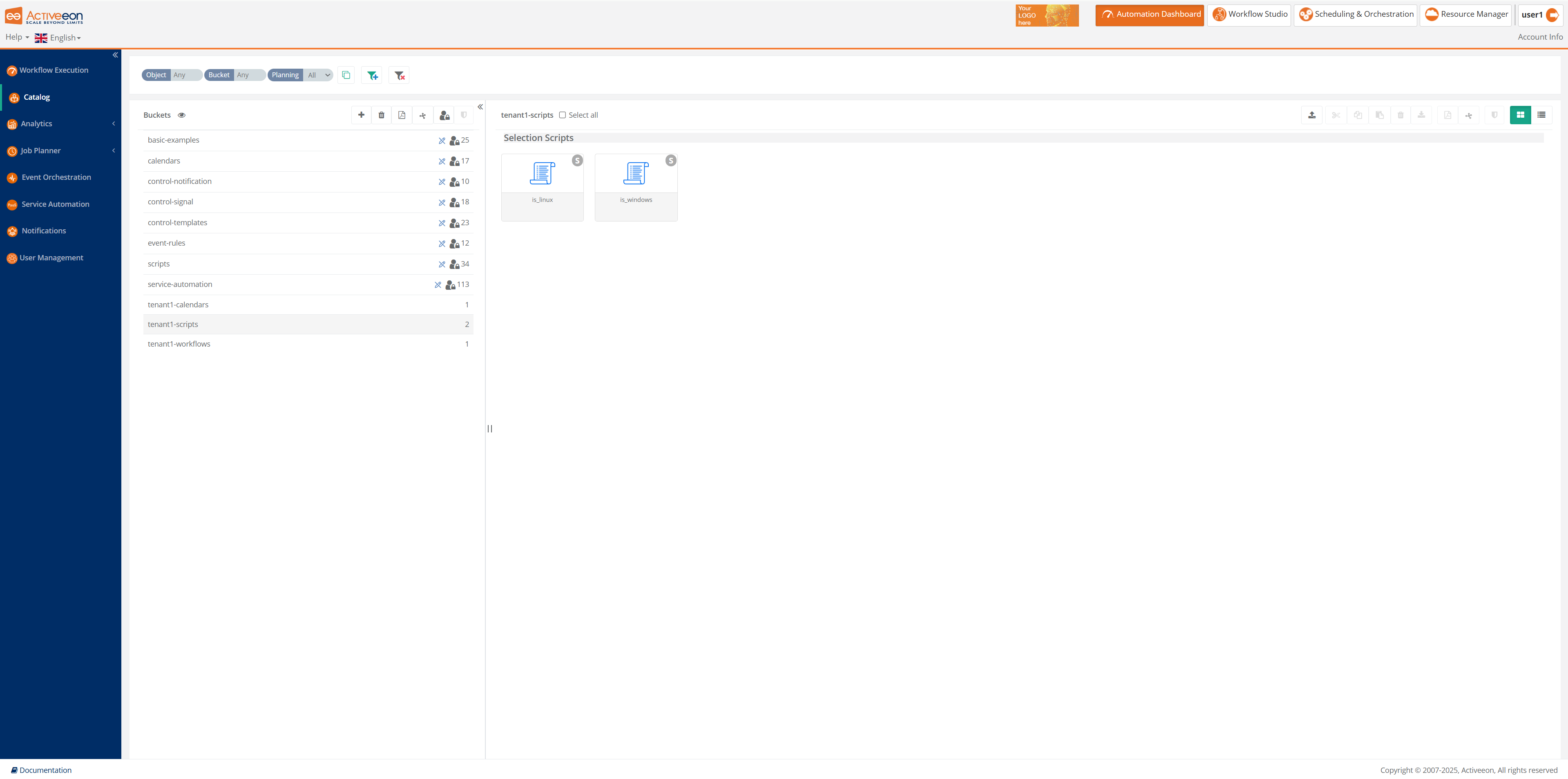

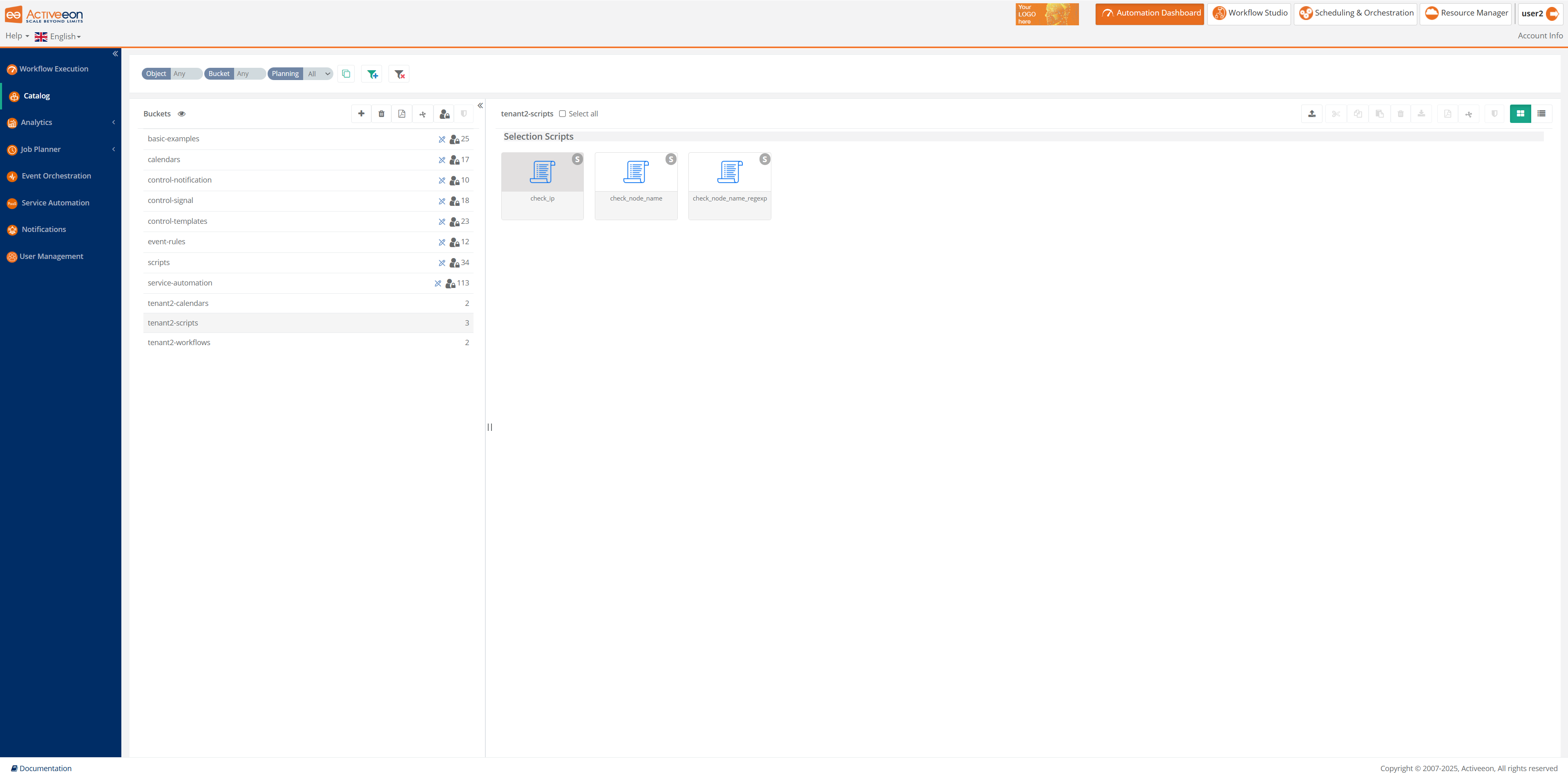

The screenshot above shows the Catalog Portal, where one can store Workflows, Calendars, Scripts, etc. Powerful versioning together with full access control (RBAC) is supported, and users can share easily Workflows and templates between teams, and various environments (Dev, Test, Staging and Prod).

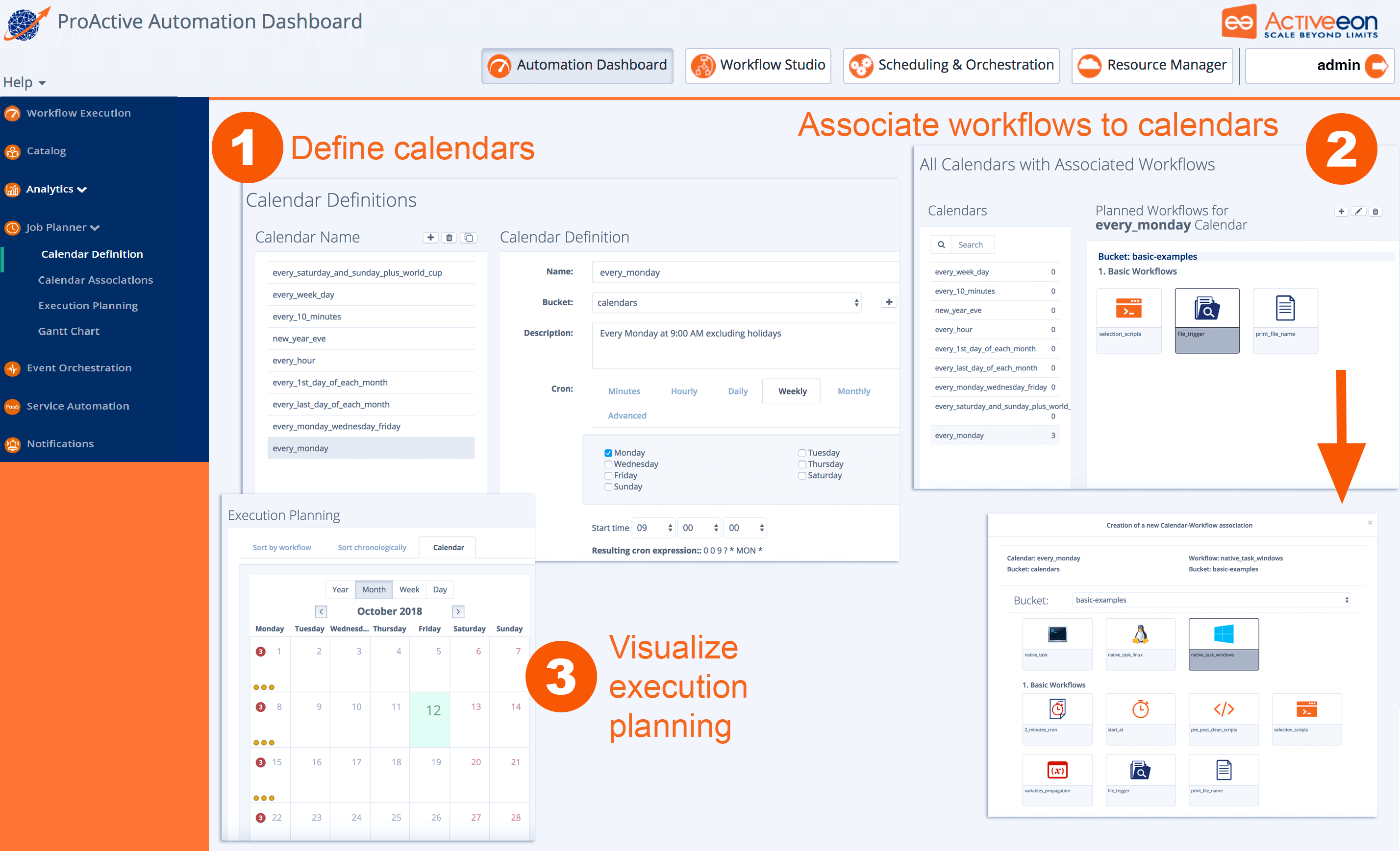

The screenshot above shows the Job Planner Portal, allowing to automate and schedule recurring Jobs. From left to right, you can define and use Calendar Definitions , associate Workflows to calendars, visualize the execution planning for the future, as well as actual executions of the past.

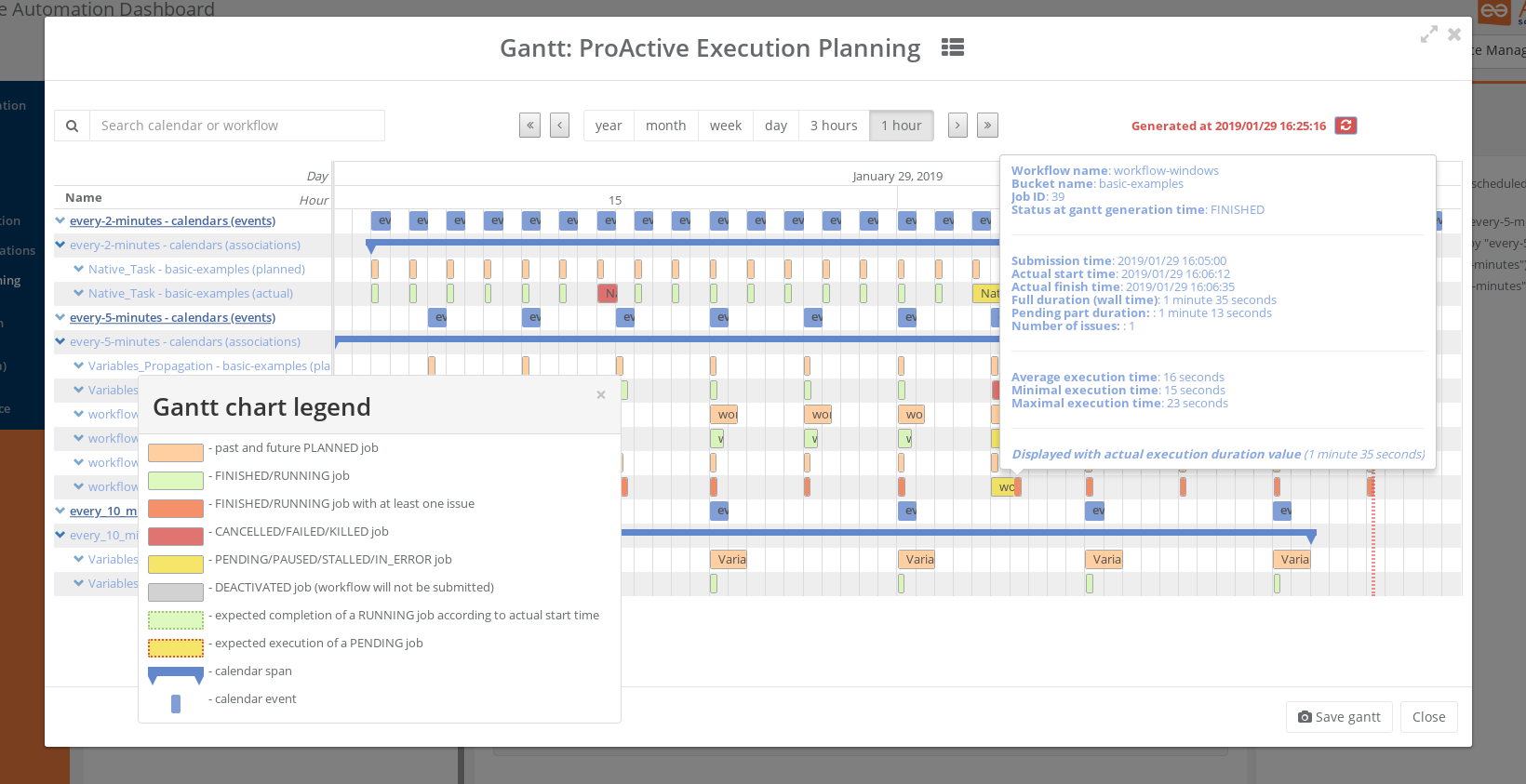

The Gantt screenshot above shows the Gantt View of Job Planner, featuring past job history, current Job being executed, and future Jobs that will be submitted, all in a comprehensive interactive view. You easily see the potential differences between Planned Submission Time and Actual Start Time of the Jobs, get estimations of the Finished Time, visualize the Job that stayed PENDING for some time (in Yellow) and the Jobs that had issues and got KILLED, CANCELLED, or FAILED (in red).

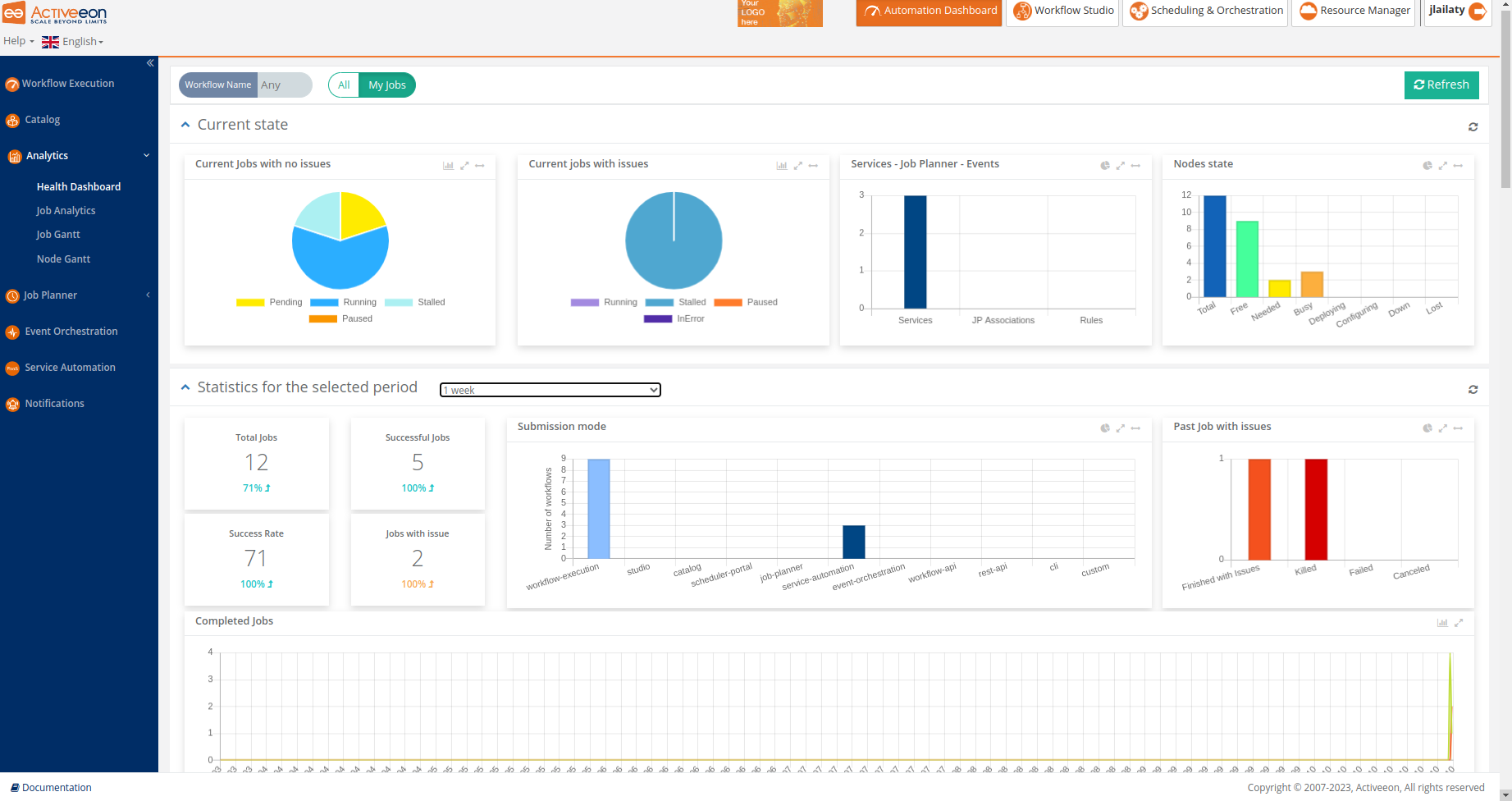

The screenshots above show the Health Dashboard Portal, which is a part of the Analytics service, that displays global statistics and current state of the ProActive server. Using this portal, users can monitor the status of critical resources, detect issues, and take proactive measures to ensure the efficient operation of workflows and services.

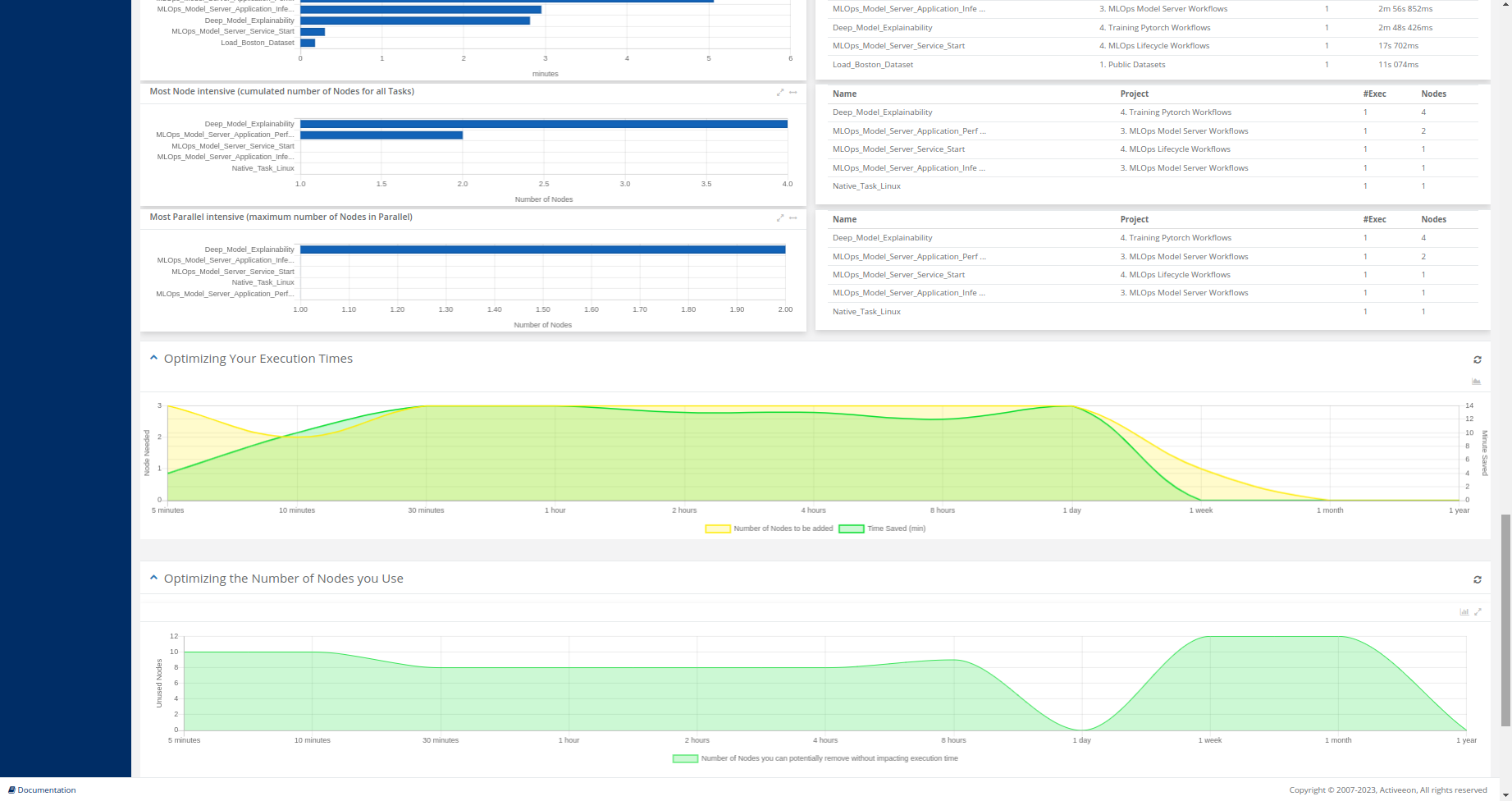

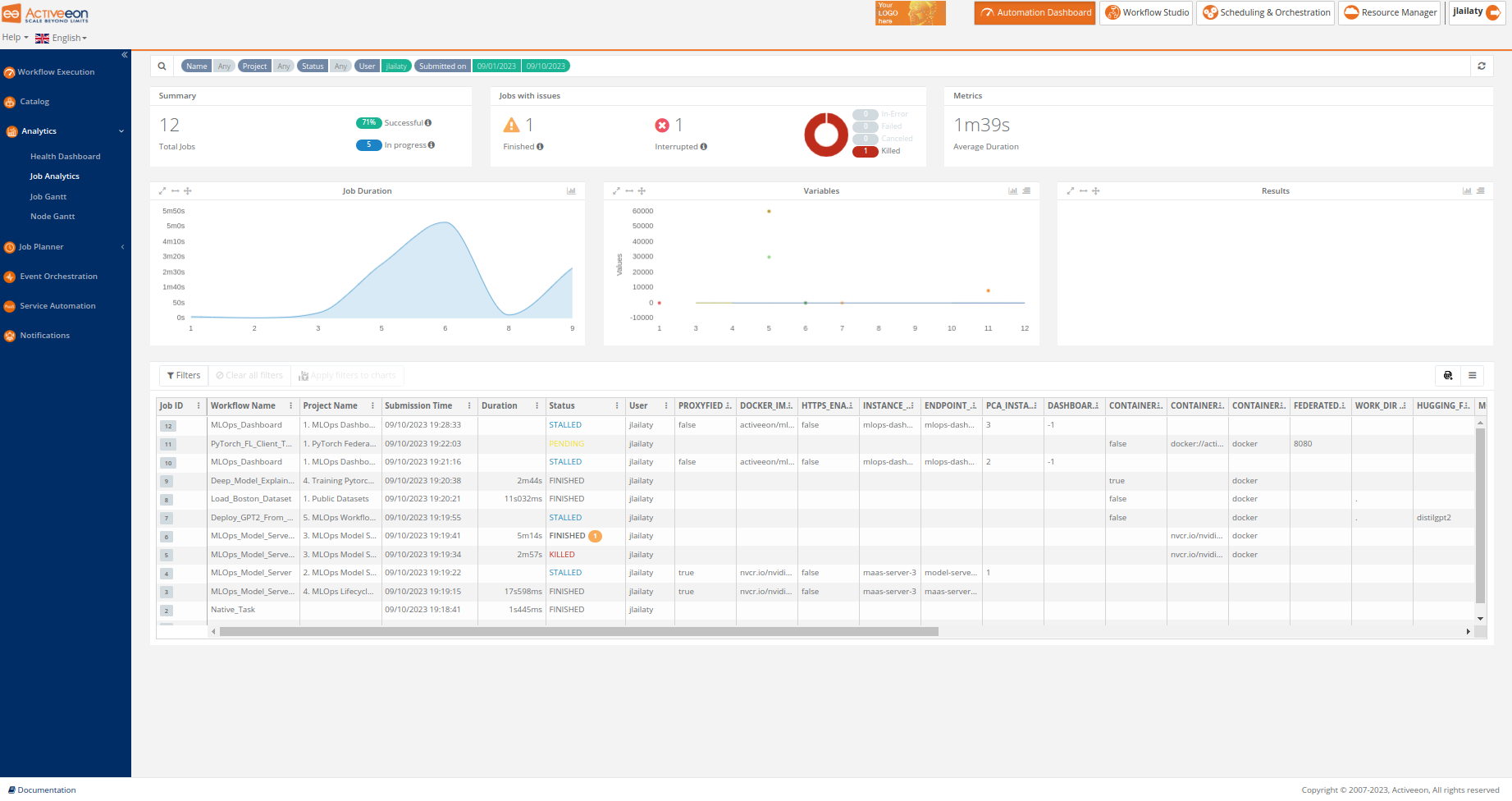

The screenshot above shows the Job Analytics Portal, which is a ProActive component, part of the Analytics service, that provides an overview of executed workflows along with their input variables and results.

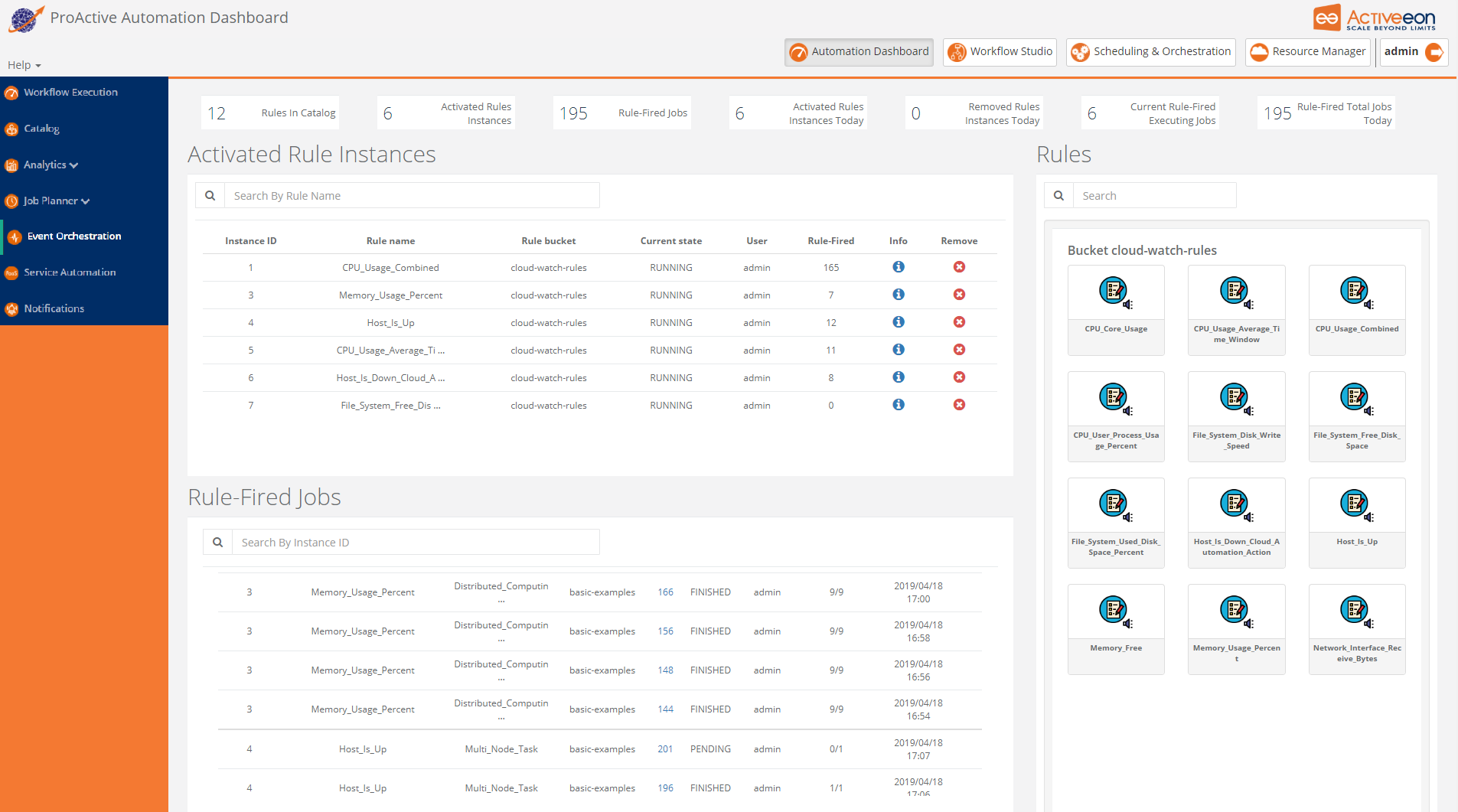

The screenshot above shows the Event Orchestration Portal, where a user can manage and benefit from Event Orchestration - smart monitoring system. This ProActive component detects complex events and then triggers user-specified actions according to predefined set of rules.

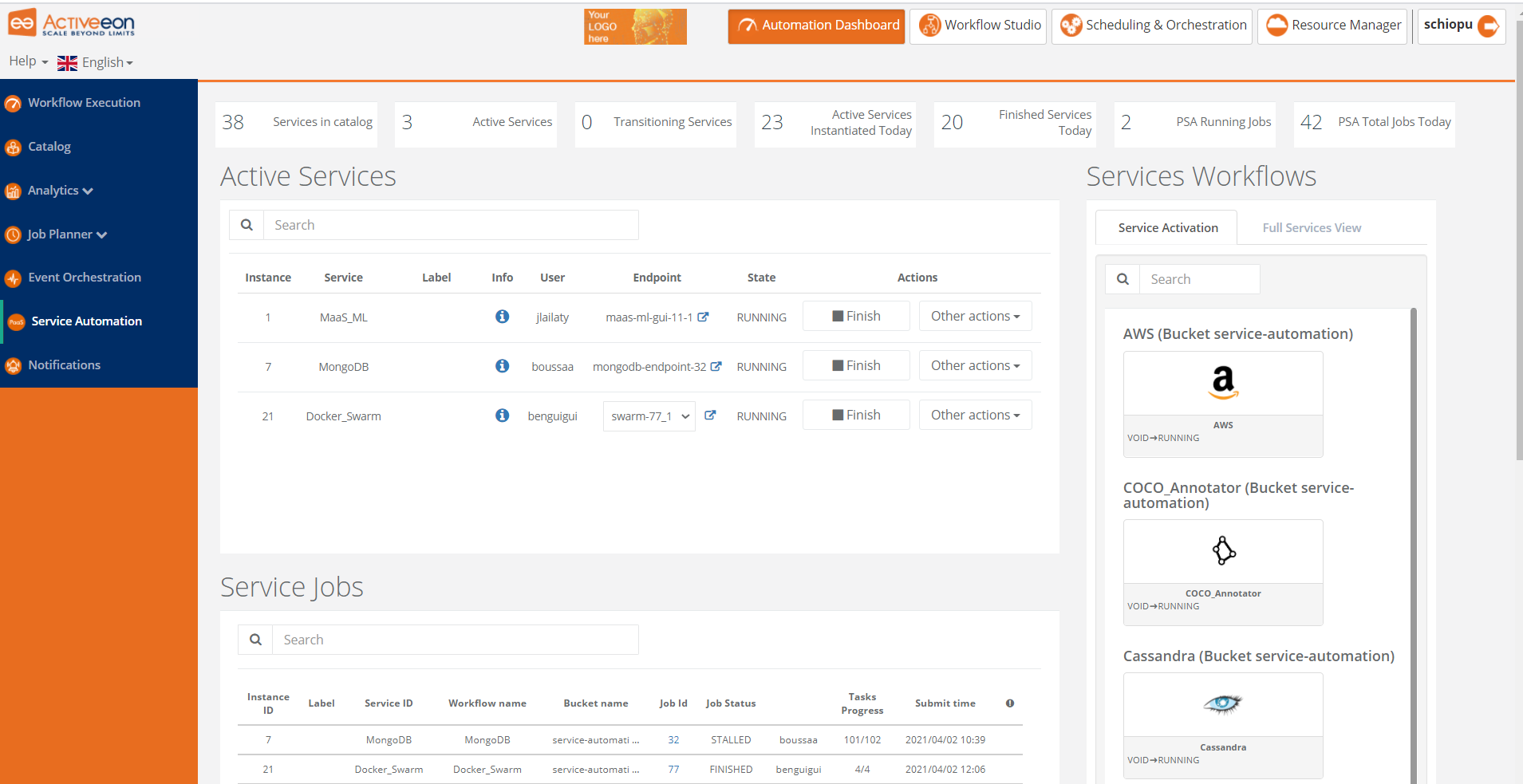

The screenshot above shows the Service Automation Portal which is actually a PaaS automation tool. It allows you to easily manage any On-Demand Services with full Life-Cycle Management (create, deploy, suspend, resume and terminate).

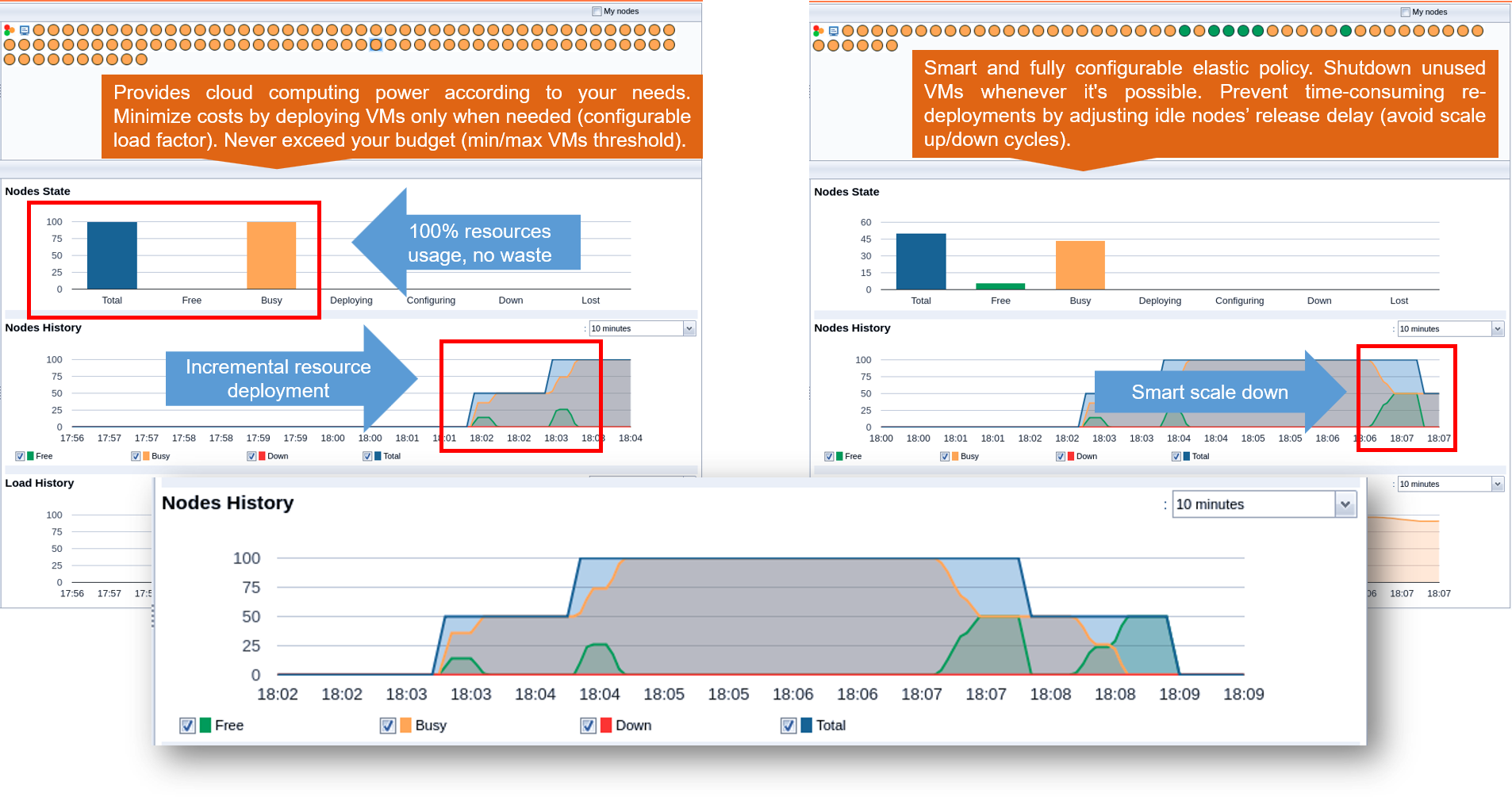

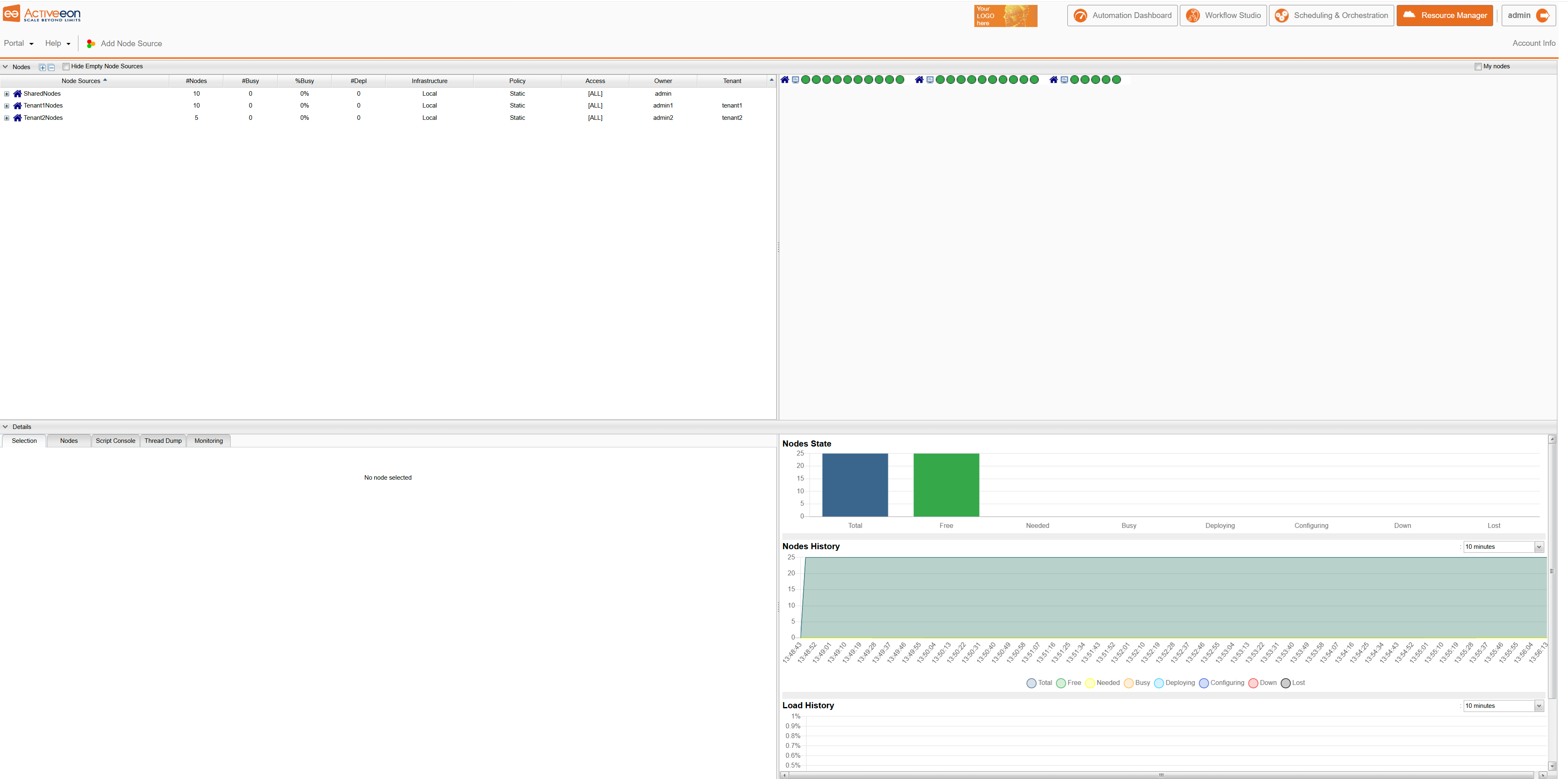

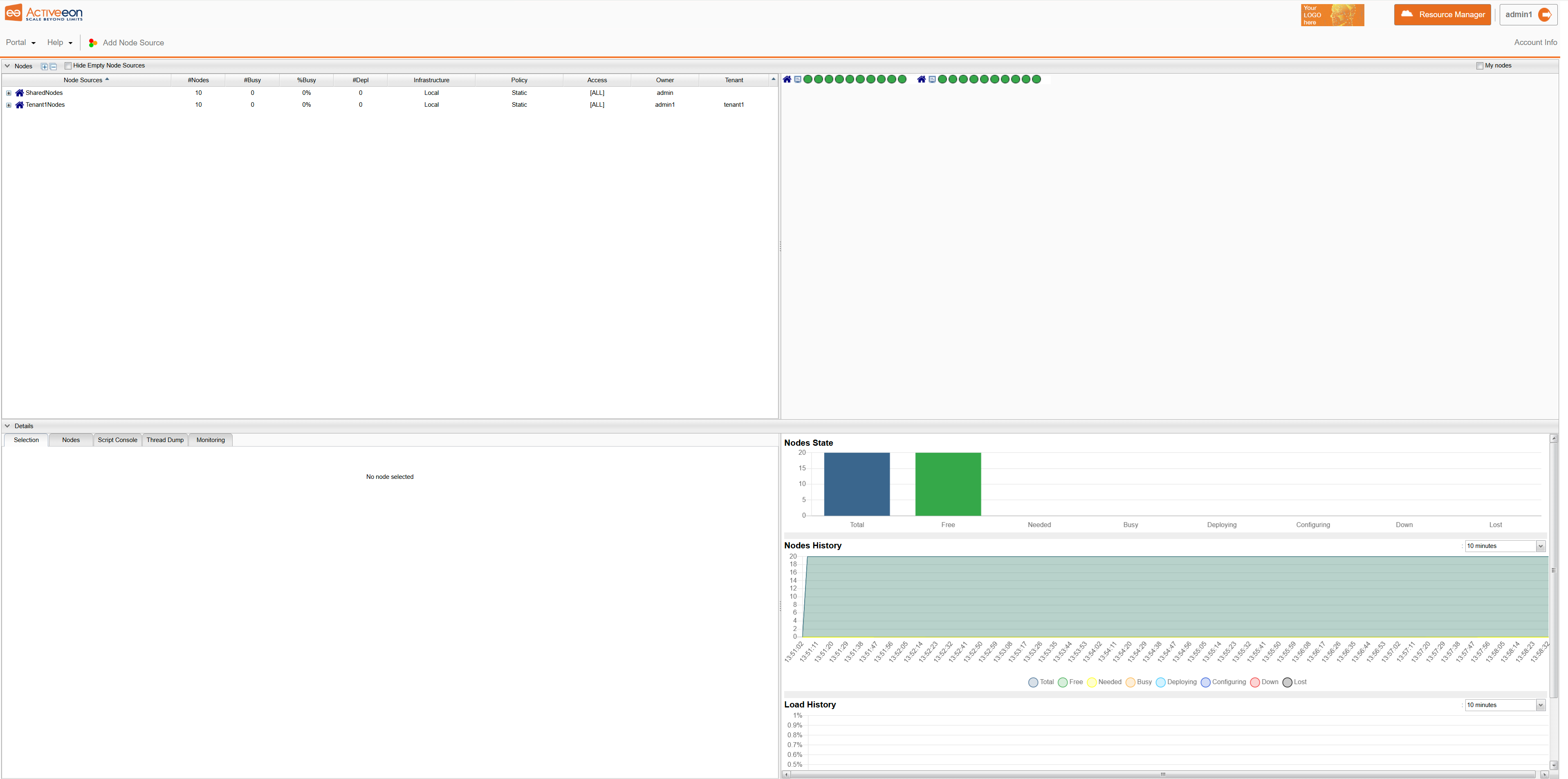

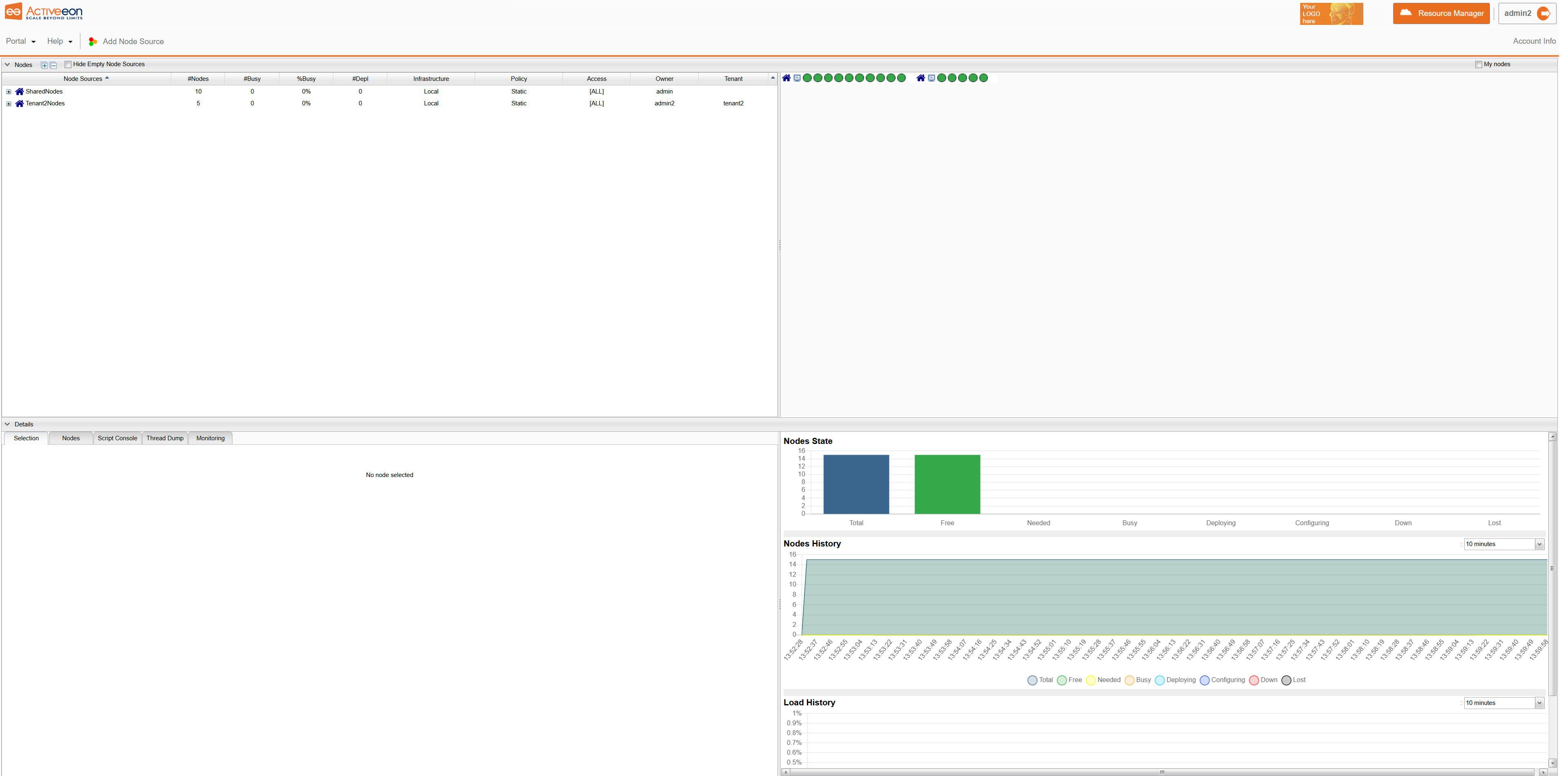

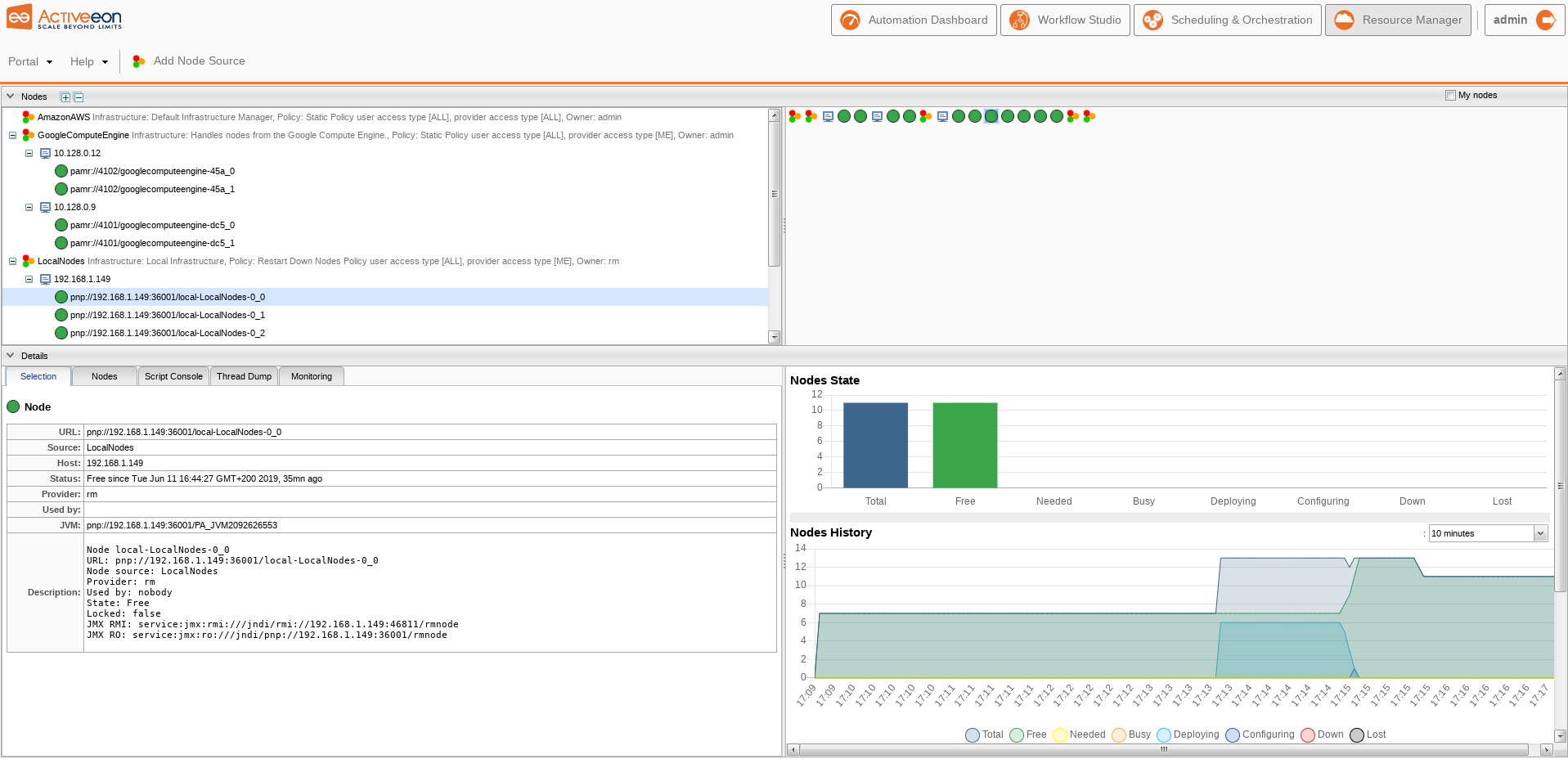

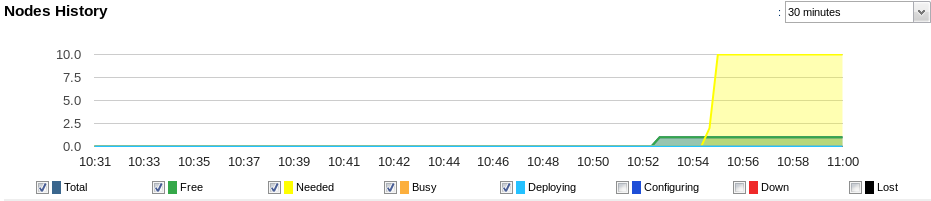

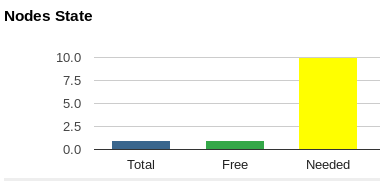

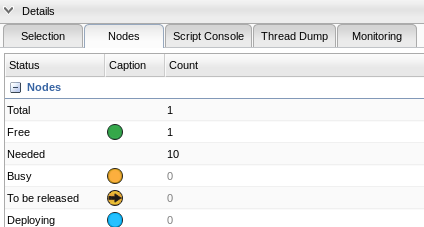

The screenshots above taken from the Resource Manager Portal shows that you can configure ProActive Scheduler to dynamically scale up and down the infrastructure being used (e.g. the number of VMs you buy on Clouds) according to the actual workload to execute.

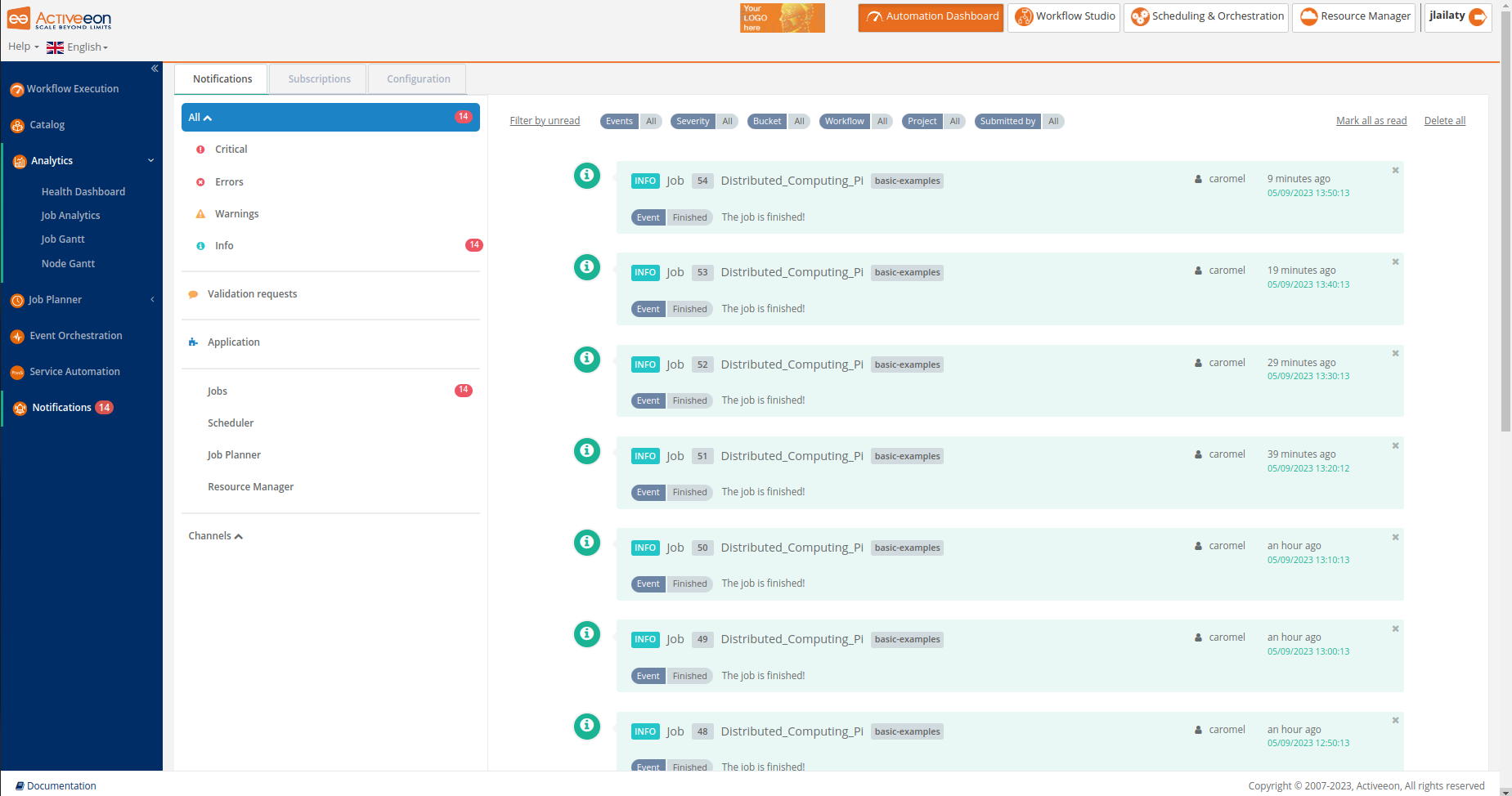

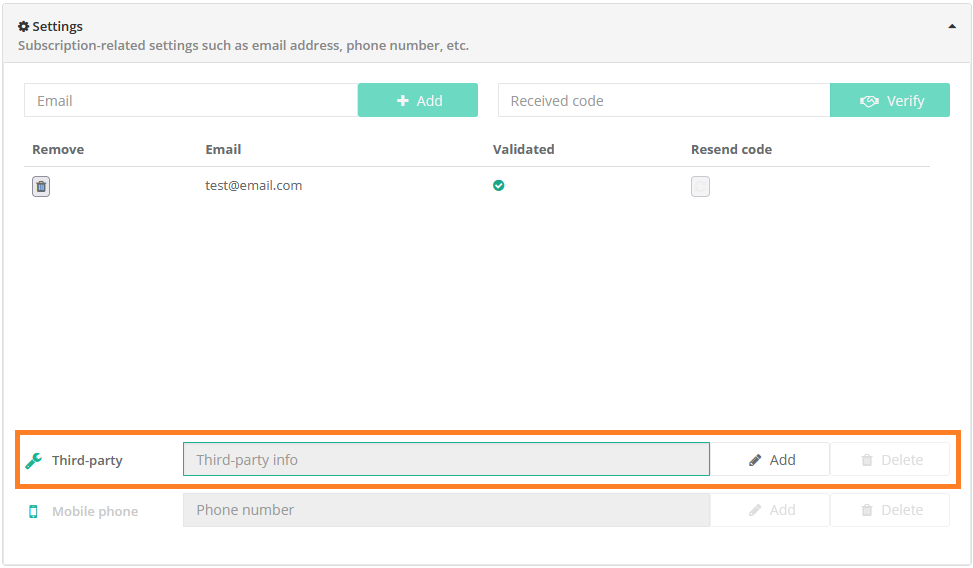

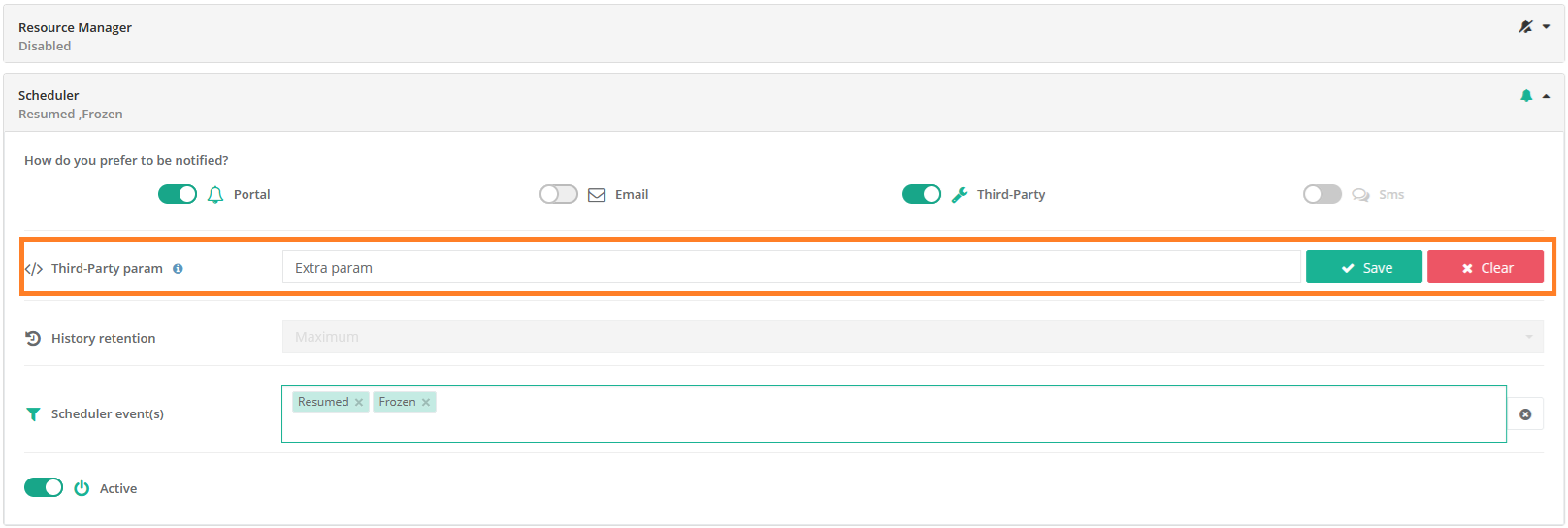

The screenshot above shows the Notifications Portal, which is a ProActive component that allows a user or a group of users to subscribe to different types of notifications (web, email, sms or a third-party notification) when certain event(s) occur (e.g., job In-Error state, job in Finished state, scheduler in Paused state, etc.).

The administration guide covers cluster setup and cluster administration. Cluster setup includes two main steps:

-

The installation and configuration of the ProActive Scheduler.

-

The set up of ProActive Nodes.

1.1. Glossary

The following terms are used throughout the documentation:

- ProActive Workflows & Scheduling

-

The full distribution of ProActive for Workflows & Scheduling, it contains the ProActive Scheduler server, the REST & Web interfaces, the command line tools. It is the commercial product name.

- ProActive Scheduler

-

Can refer to any of the following:

-

A complete set of ProActive components.

-

An archive that contains a released version of ProActive components, for example

activeeon_enterprise-pca_server-OS-ARCH-VERSION.zip. -

A set of server-side ProActive components installed and running on a Server Host.

-

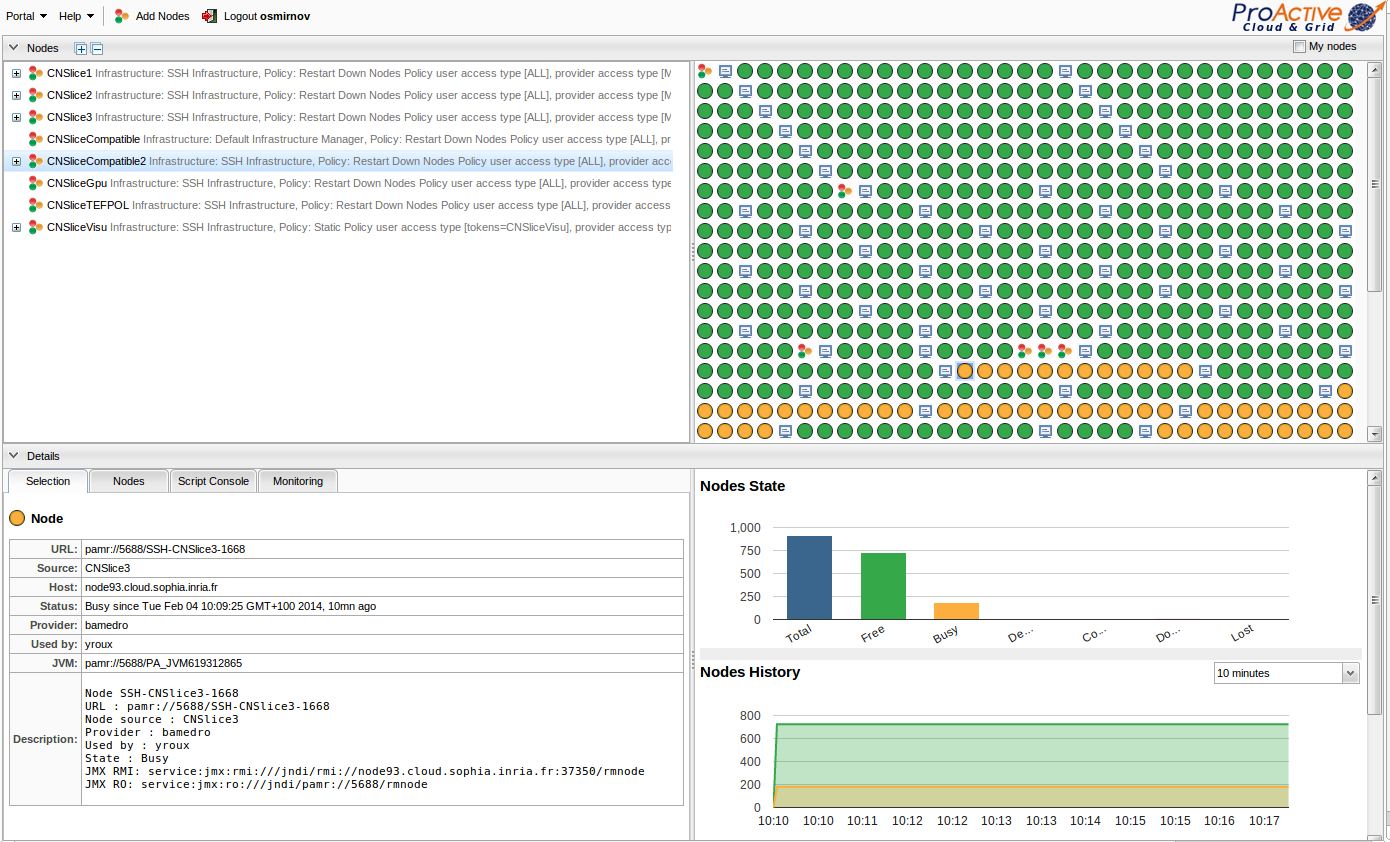

- Resource Manager

-

ProActive component that manages ProActive Nodes running on Compute Hosts.

- Scheduler

-

ProActive component that accepts Jobs from users, orders the constituent Tasks according to priority and resource availability, and eventually executes them on the resources (ProActive Nodes) provided by the Resource Manager.

| Please note the difference between Scheduler and ProActive Scheduler. |

- REST API

-

ProActive component that provides RESTful API for the Resource Manager, the Scheduler and the Catalog.

- Resource Manager Portal

-

ProActive component that provides a web interface to the Resource Manager. Also called Resource Manager portal.

- Scheduler Portal

-

ProActive component that provides a web interface to the Scheduler. Also called Scheduler portal.

- Workflow Studio

-

ProActive component that provides a web interface for designing Workflows.

- Automation Dashboard

-

Centralized interface for the following web portals: Workflow Execution, Catalog, Analytics, Job Planner, Service Automation, Event Orchestration and Notifications.

- Analytics

-

Web interface and ProActive component, responsible to gather monitoring information for ProActive Scheduler, Jobs and ProActive Nodes

- Job Analytics

-

ProActive component, part of the Analytics service, that provides an overview of executed workflows along with their input variables and results.

- Node Gantt

-

ProActive component, part of the Analytics service, that provides an overview of ProActive Nodes usage over time.

- Health Dashboard

-

Web interface, part of the Analytics service, that displays global statistics and current state of the ProActive server.

- Job Analytics portal

-

Web interface of the Analytics component.

- Notification Service

-

ProActive component that allows a user or a group of users to subscribe to different types of notifications (web, email, sms or a third-party notification) when certain event(s) occur (e.g., job In-Error state, job in Finished state, scheduler in Paused state, etc.).

- Notification portal

-

Web interface to visualize notifications generated by the Notification Service and manage subscriptions.

- Notification Subscription

-

In Notification Service, a subscription is a per-user configuration to receive notifications for a particular type of events.

- Third Party Notification

-

A Notification Method which executes a script when the notification is triggered.

- Notification Method

-

Element of a Notification Subscription which defines how a user is notified (portal, email, third-party, etc.).

- Notification Channel

-

In the Notification Service, a channel is a notification container that can be notified and displays notifications to groups of users

- Service Automation

-

ProActive component that allows a user to easily manage any On-Demand Services (PaaS, IaaS and MaaS) with full Life-Cycle Management (create, deploy, pause, resume and terminate).

- Service Automation portal

-

Web interface of the Service Automation.

- Workflow Execution portal

-

Web interface, is the main portal of ProActive Workflows & Scheduling and the entry point for end-users to submit workflows manually, monitor their executions and access job outputs, results, services endpoints, etc.

- Catalog portal

-

Web interface of the Catalog component.

- Event Orchestration

-

ProActive component that monitors a system, according to predefined set of rules, detects complex events and then triggers user-specified actions.

- Event Orchestration portal

-

Web interface of the Event Orchestration component.

- Scheduling API

-

ProActive component that offers a GraphQL endpoint for getting information about a ProActive Scheduler instance.

- Connector IaaS

-

ProActive component that enables to do CRUD operations on different infrastructures on public or private Cloud (AWS EC2, Openstack, VMWare, Kubernetes, etc).

- Job Planner

-

ProActive component providing advanced scheduling options for Workflows.

- Job Planner portal

-

Web interface to manage the Job Planner service.

- Calendar Definition portal

-

Web interface, component of the Job Planner that allows to create Calendar Definitions.

- Calendar Association portal

-

Web interface, component of the Job Planner that defines Workflows associated to Calendar Definitions.

- Execution Planning portal

-

Web interface, component of the Job Planner that allows to see future executions per calendar.

- Job Planner Gantt portal

-

Web interface, component of the Job Planner that allows to see past and future executions on a Gantt diagram.

- Bucket

-

ProActive notion used with the Catalog to refer to a specific collection of ProActive Objects and in particular ProActive Workflows.

- Server Host

-

The machine on which ProActive Scheduler is installed.

SCHEDULER_ADDRESS-

The IP address of the Server Host.

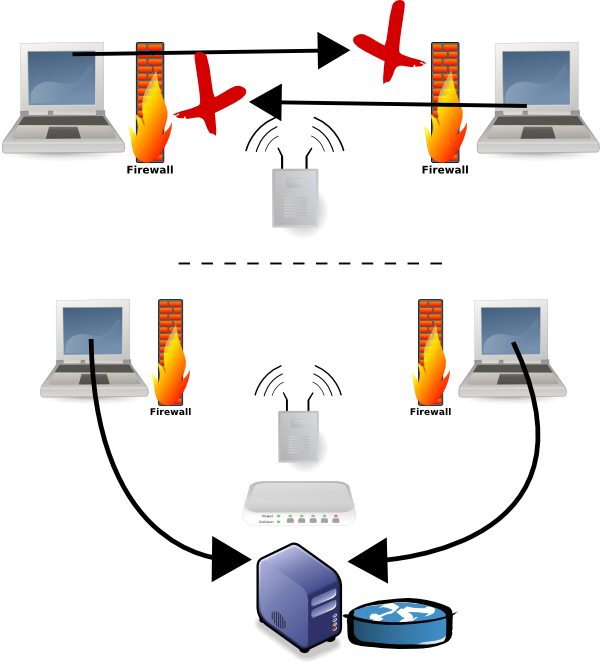

- ProActive Node

-

One ProActive Node can execute one Task at a time. This concept is often tied to the number of cores available on a Compute Host. We assume a task consumes one core (more is possible, see multi-nodes tasks), so on a 4 cores machines you might want to run 4 ProActive Nodes. One (by default) or more ProActive Nodes can be executed in a Java process on the Compute Hosts and will communicate with the ProActive Scheduler to execute tasks. We distinguish two types of ProActive Nodes:

-

Server ProActive Nodes: Nodes that are running in the same host as ProActive server;

-

Remote ProActive Nodes: Nodes that are running on machines other than ProActive Server.

-

- Compute Host

-

Any machine which is meant to provide computational resources to be managed by the ProActive Scheduler. One or more ProActive Nodes need to be running on the machine for it to be managed by the ProActive Scheduler.

|

Examples of Compute Hosts:

|

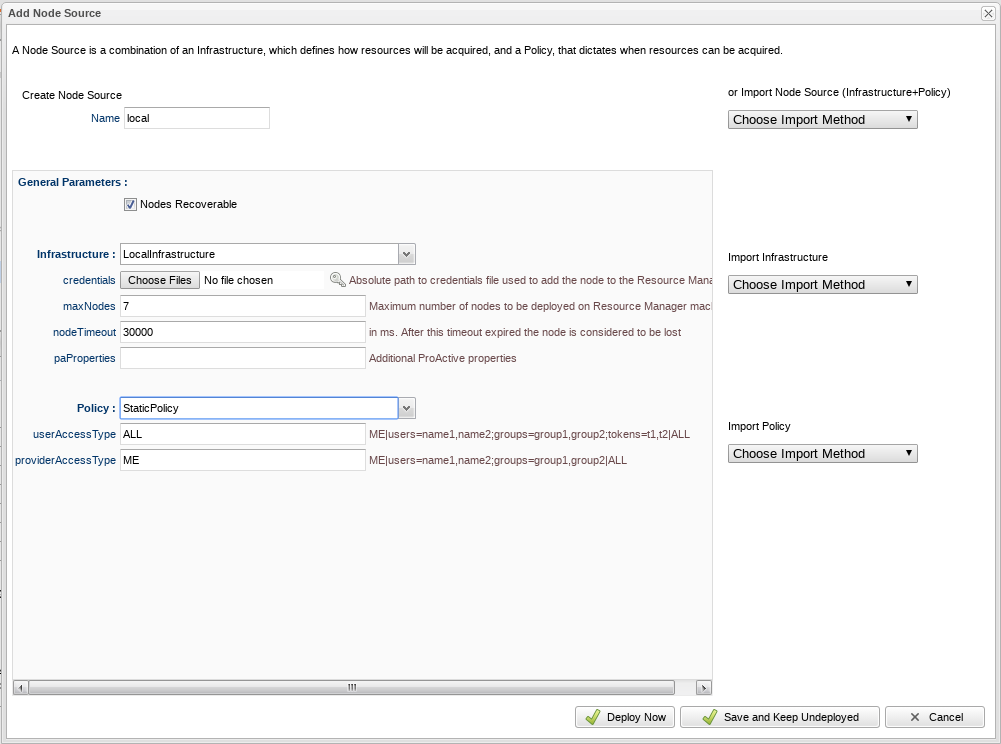

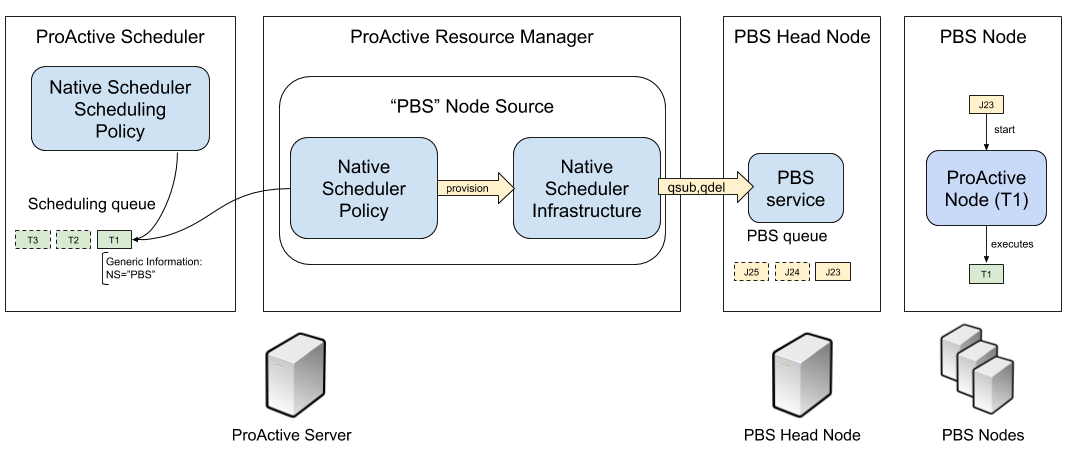

- Node Source

-

A set of ProActive Nodes deployed using the same deployment mechanism and sharing the same access policy.

- Node Source Infrastructure

-

The configuration attached to a Node Source which defines the deployment mechanism used to deploy ProActive Nodes.

- Node Source Policy

-

The configuration attached to a Node Source which defines the ProActive Nodes acquisition and access policies.

- Scheduling Policy

-

The policy used by the ProActive Scheduler to determine how Jobs and Tasks are scheduled.

PROACTIVE_HOME-

The path to the extracted archive of ProActive Scheduler release, either on the Server Host or on a Compute Host.

- Workflow

-

User-defined representation of a distributed computation. Consists of the definitions of one or more Tasks and their dependencies.

- Workflow Revision

-

ProActive concept that reflects the changes made on a Workflow during it development. Generally speaking, the term Workflow is used to refer to the latest version of a Workflow Revision.

- Generic Information

-

Are additional information which are attached to Workflows or Tasks. See generic information.

- Calendar Definition

-

Is a json object attached by adding it to the Generic Information of a Workflow.

- Job

-

An instance of a Workflow submitted to the ProActive Scheduler. Sometimes also used as a synonym for Workflow.

- Job Id

-

An integer identifier which uniquely represents a Job inside the ProActive Scheduler.

- Job Icon

-

An icon representing the Job and displayed in portals. The Job Icon is defined by the Generic Information workflow.icon.

- Task

-

A unit of computation handled by ProActive Scheduler. Both Workflows and Jobs are made of Tasks. A Task must define a ProActive Task Executable and can also define additional task scripts

- Task Id

-

An integer identifier which uniquely represents a Task inside a Job ProActive Scheduler. Task ids are only unique inside a given Job.

- Task Executable

-

The main executable definition of a ProActive Task. A Task Executable can either be a Script Task, a Java Task or a Native Task.

- Script Task

-

A Task Executable defined as a script execution.

- Java Task

-

A Task Executable defined as a Java class execution.

- Native Task

-

A Task Executable defined as a native command execution.

- Additional Task Scripts

-

A collection of scripts part of a ProActive Task definition which can be used in complement to the main Task Executable. Additional Task scripts can either be Selection Script, Fork Environment Script, Pre Script, Post Script, Control Flow Script or Cleaning Script

- Selection Script

-

A script part of a ProActive Task definition and used to select a specific ProActive Node to execute a ProActive Task.

- Fork Environment Script

-

A script part of a ProActive Task definition and run on the ProActive Node selected to execute the Task. Fork Environment script is used to configure the forked Java Virtual Machine process which executes the task.

- Pre Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, before the Task Executable.

- Post Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, after the Task Executable.

- Control Flow Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, after the Task Executable, to determine control flow actions.

- Control Flow Action

-

A dynamic workflow action performed after the execution of a ProActive Task. Possible control flow actions are Branch, Loop or Replicate.

- Branch

-

A dynamic workflow action performed after the execution of a ProActive Task similar to an IF/THEN/ELSE structure.

- Loop

-

A dynamic workflow action performed after the execution of a ProActive Task similar to a FOR structure.

- Replicate

-

A dynamic workflow action performed after the execution of a ProActive Task similar to a PARALLEL FOR structure.

- Cleaning Script

-

A script part of a ProActive Task definition and run after the Task Executable and before releasing the ProActive Node to the Resource Manager.

- Script Bindings

-

Named objects which can be used inside a Script Task or inside Additional Task Scripts and which are automatically defined by the ProActive Scheduler. The type of each script binding depends on the script language used.

- Task Icon

-

An icon representing the Task and displayed in the Studio portal. The Task Icon is defined by the Task Generic Information task.icon.

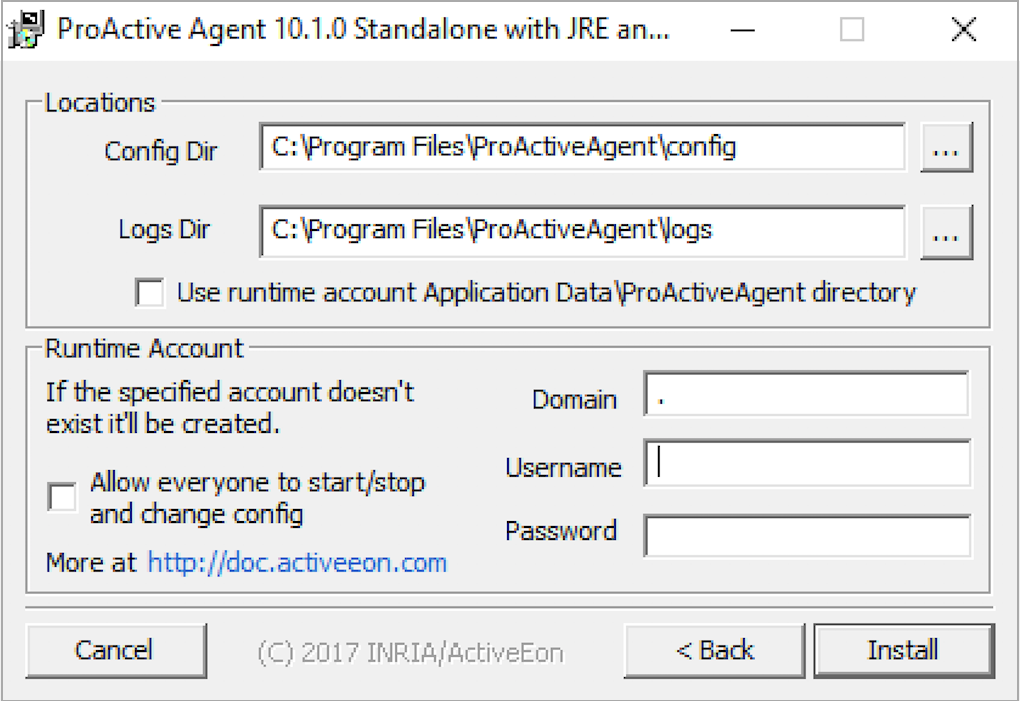

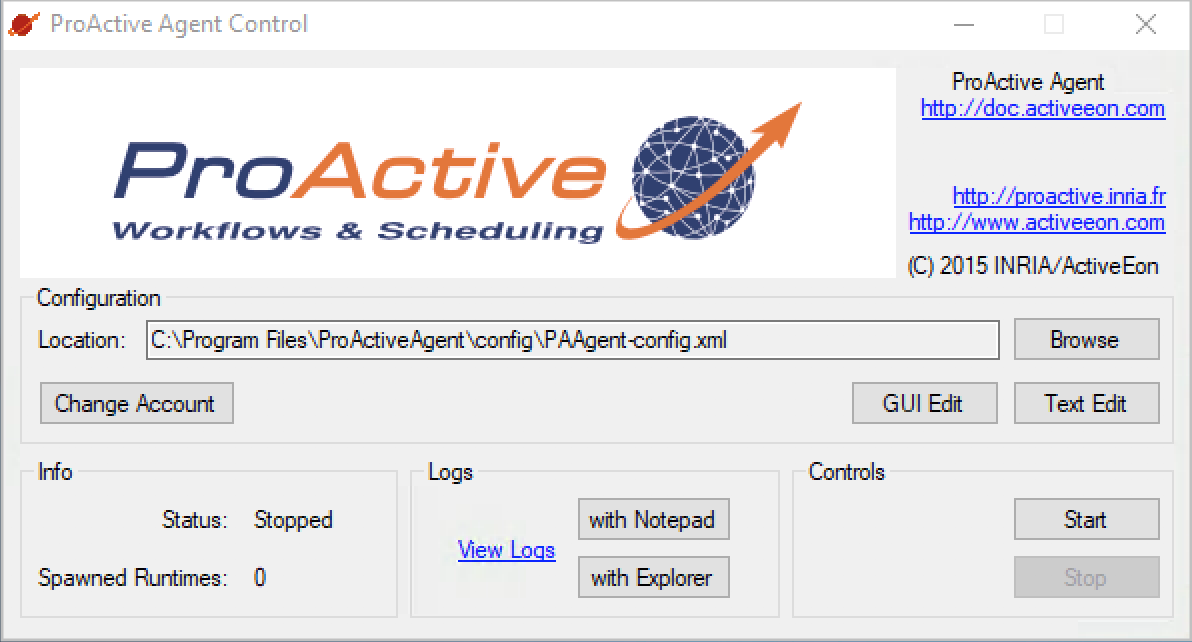

- ProActive Agent

-

A daemon installed on a Compute Host that starts and stops ProActive Nodes according to a schedule, restarts ProActive Nodes in case of failure and enforces resource limits for the Tasks.

2. Get Started

ProActive Scheduler can be installed and started either manually or automatically.

For manual installation, you can install and start ProActive:

-

as a Java process, see section Start ProActive Manually as a Java process.

-

as a system service, see section Install and start ProActive as a system service.

For automatic installation, you can install and start ProActive:

-

using Docker Compose (applicable to any machine with Docker and Docker Compose installed), see section Automated ProActive Installation with Docker Compose.

-

using Kubernetes (applicable to any On-premises or AKS Kubernetes clusters) , see section Automated ProActive Installation with Kubernetes.

-

using Shell Scripts (applicable to both physical and cloud virtual machines), see section Automated ProActive Installation with Shell Scripts.

We recommend using one of the automated installation methods, as they are faster and simpler.

2.1. Minimum requirements

The minimal hardware requirements for the ProActive server is 4 Cores and 8 GB RAM.

ProActive server is compatible with any 64 bits Linux, Windows or Mac operating system. The server process uses at minimum 4 GB of RAM.

ProActive Nodes are compatible with any Linux, Windows or Mac systems (both 64 bits and 32 bits versions). ProActive Nodes can also run on some Unix systems (such as Solaris, HP-UX), by installing and using a Java Runtime Environment compatible with these systems.

Sizing the ProActive server correctly depends on the number of jobs and tasks executed and submitted per day, the number of nodes connected to the server and the number of concurrent users.

A medium-sized ProActive Scheduler installation uses 12 cores, 24 GB RAM. Large setups use 24 cores and 100 GB RAM.

Additionally, using local ProActive Nodes (deployed directly inside the ProActive server) or having other services running on the same machine as the server can impact the sizing.

2.2. Start ProActive Manually as a Java process

First, Download ProActive Scheduler and unzip the archive. The extracted folder will be referenced as PROACTIVE_HOME in the rest of the documentation.

The archive contains all required dependencies with no extra prerequisites.

The first way to start a ProActive Scheduler is by running the proactive-server script. To do so, you can use the following command with no extra configuration.

$PROACTIVE_HOME/bin/proactive-server| It is possible to start the server with a specific number of nodes by adding the -ln parameter. For example, PROACTIVE_HOME/bin/proactive-server -ln 1 will start the proactive server with a single node. In case this parameter is not specified, the number of nodes corresponds to the number of cores of the machine where ProActive server is installed. |

The router created on localhost:33647 Starting the scheduler... Starting the resource manager... The resource manager with 4 local nodes created on pnp://localhost:41303/ The scheduler created on pnp://localhost:41303/ The web application /automation-dashboard created on http://localhost:8080/automation-dashboard The web application /studio created on http://localhost:8080/studio The web application /job-analytics created on http://localhost:8080/job-analytics The web application /notification-service created on http://localhost:8080/notification-service The web application /cloud-automation-service created on http://localhost:8080/cloud-automation-service The web application /catalog created on http://localhost:8080/catalog The web application /proactive-cloud-watch created on http://localhost:8080/proactive-cloud-watch The web application /scheduler created on http://localhost:8080/scheduler The web application /scheduling-api created on http://localhost:8080/scheduling-api The web application /connector-iaas created on http://localhost:8080/connector-iaas The web application /rm created on http://localhost:8080/rm The web application /rest created on http://localhost:8080/rest The web application /job-planner created on http://localhost:8080/job-planner *** Get started at http://localhost:8080 ***

The following ProActive Scheduler components and services are started:

The URLs of the Scheduler, Resource Manager, REST API and Web Interfaces are displayed in the output.

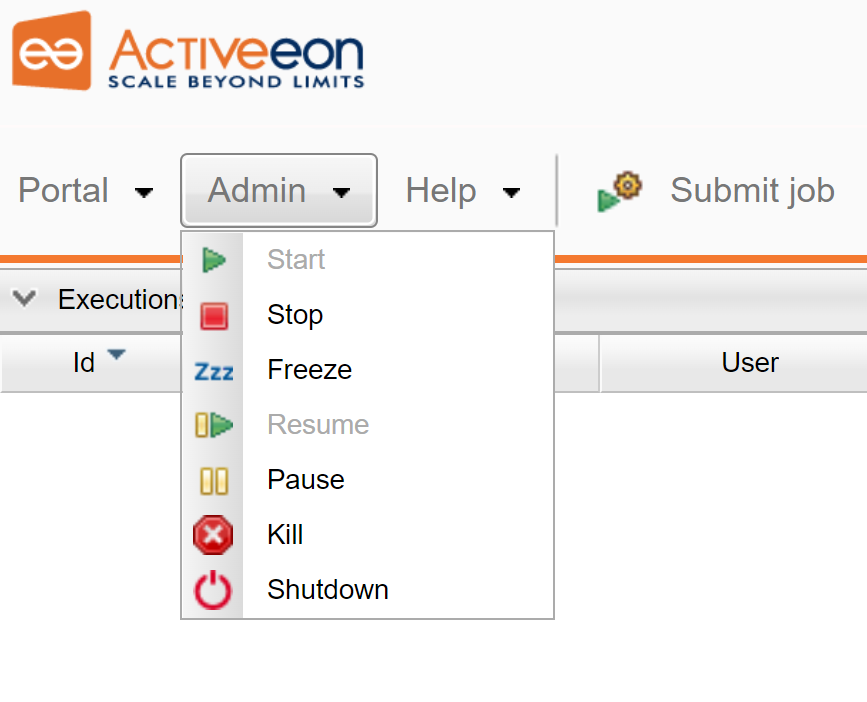

The ProActive server can also be started in a specific state by using the command line options --stopped, --paused or --frozen.

When one of these options is used, the scheduler will start in the provided state, which can be changed afterwards using the ProActive Scheduler portal.

See Scheduler Life-Cycle Management for an explanation of the different scheduler states.

2.3. Install and start ProActive as a system service

First, Download ProActive Scheduler and unzip the archive. The extracted folder will be referenced as PROACTIVE_HOME in the rest of the documentation.

The archive contains all required dependencies with no extra prerequisites.

The second way to start a ProActive Scheduler is to install it as a system service. Depending on your operating system, the installation of ProActive can be done as follows.

2.3.1. How to install ProActive on Windows

Under Windows, it is possible to use the nssm service manager tool to manage a running script as a service. You can configure nssm to absolve all responsibility for restarting it and let Windows take care of recovery actions.

In our case, you need to provide to nssm the Path to this script $PROACTIVE_HOME/bin/proactive-server.bat to start ProActive as a service.

2.3.2. How to install ProActive on Linux

Under Linux, the user has to run the install.sh script located under the tools folder as root and follow the installation steps provided in an interactive mode.

Generally, it is recommended to keep the default configuration (internal account, protocol used, port , etc) unless you would like to setup a particular one.

Below is an example of an installation procedure with default answers:

$ sudo $PROACTIVE_HOME/tools/install.sh Directory where to install the scheduler: /opt/proactive Name of the user starting the ProActive service: proactive Group of the user starting the ProActive service: proactive ProActive use internal system accounts which should be modified in a production environment. Do you want to regenerate the internal accounts ? [Y/n] n ProActive can integrate with Linux PAM (Pluggable Authentication Modules) to authenticate users of the linux system. Warning: this will add the proactive account to the linux "shadow" group Do you want to set PAM integration for ProActive scheduler? [y/N] n Protocol to use for accessing Web apps deployed by the proactive server: [http/https] http Port to use for accessing Web apps deployed by the proactive server: 8080 Number of ProActive nodes to start on the server machine: 4 The ProActive server can automatically remove from its database old jobs. This feature also remove the associated job logs from the file system. Do you want to enable automatic job/node history removal? [Y/n] y Remove history older than (in days) [30]: 30 Here are the network interfaces available on your machine and the interface which will be automatically selected by ProActive: Available interfaces: Eligible addresses: /xxx.xxx.xxx.xxx /127.0.0.1 Elected address : /xxx.xxx.xxx.xxx Elected address : Do you want to change the network interface used by the ProActive server? [y/N] n Start the proactive-scheduler service at machine startup? [Y/n] y Restrict the access to the ProActive installation folder to user proactive? [y/N] n Restrict the access to the configuration files containing sensitive information to user proactive? [Y/n] y Do you want to start the scheduler service now? [Y/n] y If a problem occurs, check output in /var/log/proactive/scheduler

The installation script will create a symbolic link called default under the root path chosen for the installation that will point to the current version folder.

Accordingly, the ProActive server installation can be accessed using /opt/proactive/default path.

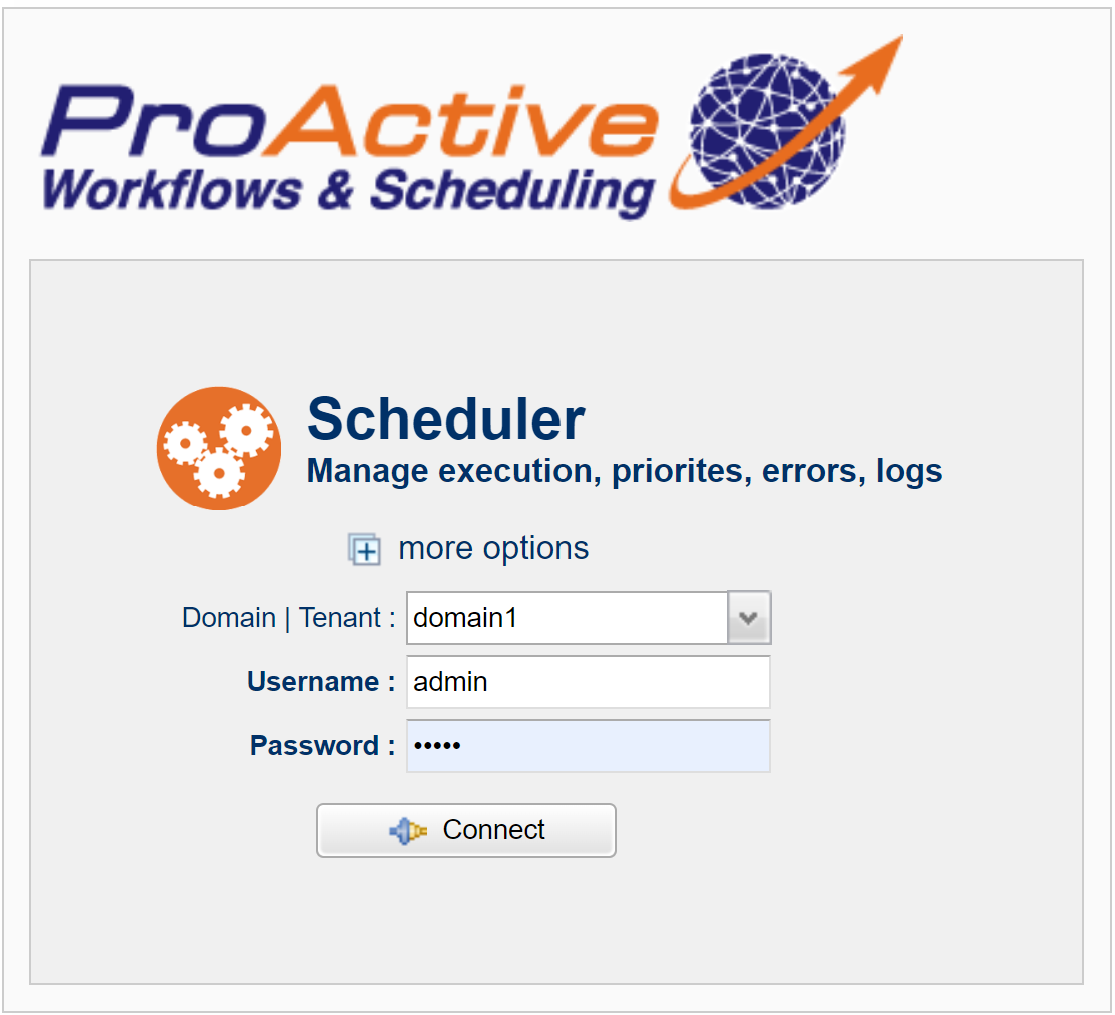

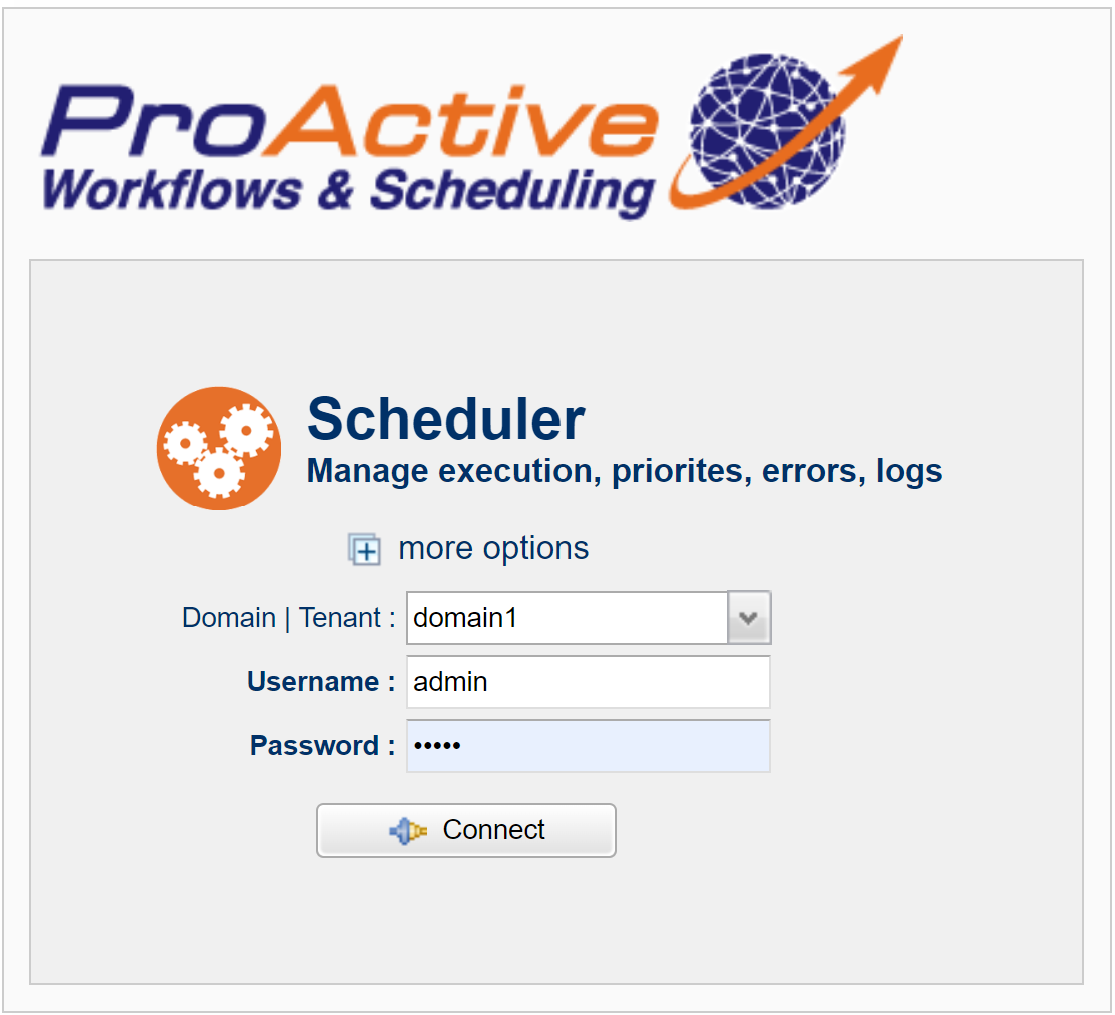

Once the server is up, ProActive Web Portals are available at http://localhost:8080/. The user can access to any of the four portals using the default credentials: admin/admin

Your ProActive Scheduler is ready to execute Jobs!

2.3.3. How to upgrade ProActive on Linux

First, Download ProActive Scheduler and unzip the archive inside a temporary folder that will be used only during the installation (for example /home/user/temp).

Make sure that you don’t extract the new version archive in the same folder as the current ProActive installation (e.g. not in /opt/proactive) as it would otherwise create conflicts.

To upgrade ProActive, the user has to run the install.sh script located under the tools folder of the extracted new version as root or sudo and follow the installations steps provided in an interactive mode.

When launching the installation script, the answer to the question Directory where to install the scheduler should point to the same base directory where the old version is installed (e.g. /opt/proactive).

This will enable the detection of the existing installation and copying addons, data files and the configuration changes from the previous installation to the new one.

Also, the existing version installed in /opt/proactive should not be removed prior to the upgrade. Otherwise, the porting of data and settings from the previous version to the new one would be impossible.

The new version installation will also create a symbolic link called default under ProActive installation root directory that points to the new version, and a symbolic link called previous that points to the older version.

It is thus possible to revert the upgrade if anything goes wrong simply by changing the default symbolic link target. In that case, The previous symbolic link can either be removed or pointed to an older installation.

In some cases, this operation can induce some conflicts that can be solved easily using Git command-line tools.

Below is an example of the upgrade installation procedure with default answers:

$ sudo $PROACTIVE_HOME/tools/install.sh This will install the ProActive scheduler 10.1.0-SNAPSHOT as a service. Once the installer is started, it must process until the end otherwise the installation may be corrupted. Do you want to continue? [Y/n] y Directory where to install the scheduler: /opt/proactive Previous installation was owned by user proactive with group proactive Detected an existing ProActive Scheduler installation at /opt/proactive/8.4 Copying addons and data files from the previous installation... Do you want to port all configuration changes to the new version (do not do this from a version prior to 8.4.0 before executing the patch)? [Y/n] y Porting configuration into new installation... [master 61aaa19] Commit all changes before switching from 8.4 to 10.1 4 files changed, 8 insertions(+), 8 deletions(-) warning: no common commits Depuis /opt/proactive/8.4/ * [nouvelle branche] master -> old-version/master Cherry-picking all changes to new installation Here is the list of JAR files in the new installation 'addons' folder. If there are duplicates, you need to manually remove outdated versions. Restrict the access to the ProActive installation folder to user proactive? [y/N] n Restrict the access to the configuration files containing sensitive information to user proactive? [Y/n] y Resource Manager credentials are used by remote ProActive Agents to register to the scheduler. Do you want to start the scheduler service now? [Y/n] y If a problem occurs, check output in /var/log/proactive/scheduler

| Once the upgrade is performed and the new version started for the first time, the ProActive server will upgrade Catalog objects to the new version. This procedure ensures that any object modified manually by a user will not be upgraded. |

2.4. Automated ProActive Installation with Docker Compose

ProActive could be easily deployed and started within a fully containerized environment. For this purpose, we provide a ready-to-use Docker Compose configuration that enables you running ProActive Workflows and Scheduling (PWS) as a containerized service. It starts the following services:

-

ProActive server container

-

(Optional) ProActive PostgreSQL database container. The database container is not started when using our embedded HSQL Database.

The Docker Compose configuration pulls the ProActive server and database images from the Private Activeeon Docker Registry.

These images can be used out of the box, on any operating system. You can customize the ProActive server and database settings, located in the .env file according to your requirements.

To be able to access the Private Activeeon Docker Registry, a ProActive enterprise licence and access credentials are required. Please contact us at contact@activeeon.com to request access.

Here are the installation steps you need to follow in order to have ProActive running in a fully containerized environment:

2.4.1. Download the Docker Compose Installation Package

We offer a public Docker Compose installation package, enabling you to easily configure and initiate the Docker installation within your environment.

The Docker Compose installation package includes:

-

The Docker Compose input file (

.env): Used to set the environment variables that are then read by the Docker Compose configuration files. -

The Docker Compose configuration files: Define and configure the ProActive server and database containers using YAML definitions:

-

docker-compose-hsqldb.yml: Used to have ProActive with an embedded HSQL database. -

docker-compose-pg.yml: Used to have ProActive with a Postgres database.

-

You can proceed to donwload it using the following command:

2.4.2. Configure the Docker Compose Input File

Before starting the ProActive installation, you need first to configure the installation input parameters using the Docker Compose environment file .env.

Click here to display the .env input file

.env:

##########################################################################################################################################

########################## 1. Installation Properties ####################################################################################

##########################################################################################################################################

# Settings of the Docker registry images

# ProActive Docker image namespace

DOCKER_IMAGE_PROACTIVE_NAMESPACE=<proactive_namespace>

# ProActive version tag to be deployed

DOCKER_IMAGE_PROACTIVE_TAG=<proactive_tag>

# Postgres Docker image namespace

DOCKER_IMAGE_POSTGRES_NAMESPACE=<postgres_namespace>

# Postgres version tag to be deployed

DOCKER_IMAGE_POSTGRES_TAG=<postgres_namespace>

##########################################################################################################################################

########################## 2. PWS Server Configuration Properties ########################################################################

##########################################################################################################################################

# The internal hostname used (e.g. localhost, myserver) or IP address (e.g. 127.0.0.1, 192.168.12.1)

PWS_HOST_ADDRESS=<host_address>

# The internal http protocol to use (http or https)

PWS_PROTOCOL=http

# The internal port used (e.g., 8080 for http, 8443 for https)

PWS_PORT=8080

# The internal port to use for PARM communication, 33647 by default

PWS_PAMR_PORT=33647

# Remove jobs history older than (in days), 30 days by default

PWS_JOB_CLEANUP_DAYS=30

# User starting the ProActive Server

PWS_USER_ID=1001

PWS_USER_GROUP_ID=1001

PWS_USER_NAME=activeeon

PWS_USER_GROUP_NAME=activeeon

# Password of the ProActive 'admin' user

PWS_LOGIN_ADMIN_PASSWORD=activeeon

# Number of ProActive agent nodes to deploy on the ProActive Server machine

PWS_WORKER_NODES=4

##########################################################################################################################################

########################## 3. Public Access Configuration Properties #####################################################################

##########################################################################################################################################

# 'ENABLE_PUBLIC_ACCESS' should be set to true when the ProActive Server is deployed behind a reverse proxy or inside a cloud instance

ENABLE_PUBLIC_ACCESS=false

# Public Hostname used (e.g. public.myserver.mydomain) or IP address (e.g. 192.168.12.1)

PWS_PUBLIC_HOSTNAME=<public_host_address>

# Public protocol used (http or https)

PWS_PUBLIC_SCHEME=https

# Public port used (e.g. 8080, 8443)

PWS_PUBLIC_PORT=8443

##########################################################################################################################################

########################## 4. TLS Certificate Configuration Properties ###################################################################

##########################################################################################################################################

# 'ENABLE_TLS_CERTIFICATE_CONFIGURATION' should be set to true in case you have a valid certificate for the host address

ENABLE_TLS_CERTIFICATE_CONFIGURATION=false

# Path of the certificate full chain or PFX keystore (accepted formats are crt, pem or pfx)

TLS_CERTIFICATE_PATH=/opt/proactive/server/backup/fullchain.pem

# If the certificate is provided in pem/crt format, path to the server private key ('TLS_CERTIFICATE_KEY_PATH' is ignored if the certificate is provided as a pfx keystore)

TLS_CERTIFICATE_KEY_PATH=/opt/proactive/server/backup/privkey.pem

# Password of the server private key (if applicable) or pfx keystore

TLS_CERTIFICATE_KEYSTORE_PASSWORD=activeeon

##########################################################################################################################################

########################## 5. LDAP Configuration Properties ##############################################################################

##########################################################################################################################################

# 'ENABLE_LDAP_AUTHENTICATION' should be set to true if you would like to enable the LDAP authentication to the ProActive Server

ENABLE_LDAP_AUTHENTICATION=false

# Url of the ldap server. Multiple urls can be provided separated by commas

LDAP_SERVER_URLS=<ldap_server_urls>

# Scope in the LDAP tree where users can be found

LDAP_USER_SUBTREE=<ldap_user_subtree>

# Scope in the LDAP tree where groups can be found

LDAP_GROUP_SUBTREE=<ldap_group_subtree>

# Login that will be used when binding to the ldap server (to search for a user and its group). The login should be provided using its ldap distinguished name

LDAP_BIND_LOGIN=<ldap_bind_login>

# Password associated with the bind login

LDAP_BIND_PASSWORD=<ldap_bind_password>

# Ldap filter used to search for a user. %s corresponds to the user login name that will be given as parameter to the filter

LDAP_USER_FILTER=<ldap_user_filter>

# Ldap filter used to search for all groups associated with a user. %s corresponds to the user distinguished name found by the user filter

LDAP_GROUP_FILTER=<ldap_group_filter>

# A login name that will be used to test the user and group filter and validate the configuration. The test login name must be provided as a user id

LDAP_TEST_LOGIN=<ldap_test_login>

# Password associated with the test user

LDAP_TEST_PASSWORD=<ldap_test_password>

# List of existing ldap groups that will be associated with a ProActive administrator role. Multiple groups can be provided separated by commas

LDAP_ADMIN_ROLES=<ldap_admin_roles>

# List of existing ldap groups that will be associated with a ProActive standard user role. Multiple groups can be provided separated by commas

LDAP_USER_ROLES=<ldap_user_roles>

# If enabled, the group filter will use as parameter the user uid instead of its distinguished name

LDAP_GROUP_FILTER_USE_UID=false

# If enabled, StartTLS mode is activated

LDAP_START_TLS=false

##########################################################################################################################################

########################## 6. PWS Users Configuration Properties #########################################################################

##########################################################################################################################################

# 'ENABLE_USERS_CONFIGURATION' should be set to true if you would like to create internal ProActive users via login/password

ENABLE_USERS_CONFIGURATION=true

# A json formatted array where each object defines a set of LOGIN, PASSWORD, and GROUPS of a ProActive internal user

PWS_USERS=[{ "LOGIN": "test_admin", "PASSWORD": "activeeon", "GROUPS": "scheduleradmins,rmcoreadmins" }, { "LOGIN": "test_user", "PASSWORD": "activeeon", "GROUPS": "user" }]

##########################################################################################################################################

########################## 7. External Database Configuration Properties #################################################################

##########################################################################################################################################

# 'ENABLE_EXTERNAL_DATABASE_CONFIGURATION' should be set to true if you are planning to use ProActive with an external database (i.e., postgres)

# It is recommended to not change these parameters unless you would like to change the passwords

ENABLE_EXTERNAL_DATABASE_CONFIGURATION=false

POSTGRES_PASSWORD=postgres

DB_DNS=proactive-database

DB_PORT=5432

DB_DIALECT=org.hibernate.dialect.PostgreSQL94Dialect

DB_RM_PASS=rm

DB_SCHEDULER_PASS=scheduler

DB_CATALOG_PASS=catalog

DB_PCA_PASS=pca

DB_NOTIFICATION_PASS=notificationRequired configuration

The .env file mentioned above is ready for use in a minimal installation. However, it does require the definition of five mandatory parameter values:

-

DOCKER_IMAGE_PROACTIVE_NAMESPACE: Defines the ProActive Docker image namespace (or repository) in the Activeeon private registry. -

DOCKER_IMAGE_PROACTIVE_TAG: Defines the ProActive release version. -

DOCKER_IMAGE_POSTGRES_NAMESPACE: Defines the ProActive Postgres image namespace. -

DOCKER_IMAGE_POSTGRES_TAG: Defines the Postgres version to be used. -

PWS_HOST_ADDRESS: Defines the internal hostname or IP address of the machine where ProActive will be installed.

Advanced configuration

For more advanced configuration of the ProActive server, please refer to the parameters listed below:

PWS Server Configuration Properties

This section outlines the global configuration options available to configure the ProActive server.

-

PWS_PROTOCOL: The internal http protocol to use (http or https). By default, http. -

PWS_PORT: The internal port used (e.g., 8080 for http, 8443 for https). By default, 8080. -

PWS_PAMR_PORT: The internal port to use for PAMR communication. By default, 33647. -

PWS_JOB_CLEANUP_DAYS: Remove job history older than the specified number of days. By default, 30. -

PWS_USER_ID: ID of the user starting the ProActive server. By default, 1001. -

PWS_USER_GROUP_ID: Group ID of the user starting the ProActive server. By default, 1001. -

PWS_USER_NAME: Name of the user starting the ProActive server. By default, activeeon. -

PWS_USER_GROUP_NAME: Group of the user starting the ProActive server. By default, activeeon. -

PWS_LOGIN_ADMIN_PASSWORD: Password of the ProActive 'admin' user. By default, activeeon. -

PWS_WORKER_NODES: Number of ProActive agent nodes to deploy on the ProActive server machine. By default, 4.

Public Access Configuration Properties

This section describes the public REST Urls to be set when you are running the ProActive server behind a reverse proxy or in a cloud instance (e.g., Azure VM)

-

ENABLE_PUBLIC_ACCESS: Set to true when the ProActive server is deployed behind a reverse proxy or inside a cloud instance. By default, false. -

PWS_PUBLIC_HOSTNAME: Public hostname used (e.g. public.myserver.mydomain) or IP address (e.g. 192.168.12.1). -

PWS_PUBLIC_SCHEME: Public protocol used (http or https). -

PWS_PUBLIC_PORT: Public port used (e.g. 8080, 8443).

TLS Certificate Configuration Properties

In case you would like to set up a TLS certificate for the ProActive server, you can customize it in this section:

-

ENABLE_TLS_CERTIFICATE_CONFIGURATION: Set to true in case you have a valid certificate for the host address. By default, false. -

TLS_CERTIFICATE_PATH: Path of the certificate full chain or PFX keystore (accepted formats are crt, pem or pfx). -

TLS_CERTIFICATE_KEY_PATH: If the certificate is provided in pem/crt format, path to the server private key (TLS_CERTIFICATE_KEY_PATHis ignored if the certificate is provided as a pfx keystore). -

TLS_CERTIFICATE_KEYSTORE_PASSWORD: Password of the server private key (if applicable) or pfx keystore. By default, activeeon.

| The pem/crt/pfx certificate as well as the key have to be placed in the backup folder and their absolute paths need to be provided. See section Required configuration to learn more about the backup folder to be created beforehand. |

LDAP Configuration Properties

In case you would like to set up the LDAP authentication for the ProActive server, you can enable the LDAP configuration in this section.

The given parameter values are only examples and actual parameters must match your LDAP organization. This section will require two accounts:

-

An

LDAP_BIND_LOGIN(given as a LDAP distinguished name) that will be used by the ProActive server to execute LDAP queries (search queries only). -

An

LDAP_TEST_LOGIN(given as a LDAP user login name) that will be used to validate the configuration and guarantee that the connection to the LDAP server is working properly. This test account is only used during the installation.

You can then set up the right LDAP configuration using the following properties:

-

ENABLE_LDAP_AUTHENTICATION: Set to true if you would like to enable the LDAP authentication to the ProActive server. By default, false. -

LDAP_SERVER_URLS: Url of the LDAP server. Multiple urls can be provided separated by commas. Example:ldaps://ldap-server.company.com -

LDAP_USER_SUBTREE: Scope in the LDAP tree where users can be found. Example:ou=users,dc=activeeon,dc=com -

LDAP_GROUP_SUBTREE: Scope in the LDAP tree where groups can be found. Example:ou=groups,dc=activeeon,dc=com -

LDAP_BIND_LOGIN: Login that will be used when binding to the LDAP server (to search for a user and its group). The login should be provided using its LDAP distinguished name. Example:cn=admin,dc=activeeon,dc=com -

LDAP_BIND_PASSWORD: Password associated with the bind login. -

LDAP_USER_FILTER: LDAP filter used to search for a user. %s corresponds to the user login name that will be given as parameter to the filter. Example:(&(objectclass=inetOrgPerson)(uid=%s)) -

LDAP_GROUP_FILTER: LDAP filter used to search for all groups associated with a user. %s corresponds to the user distinguished name found by the user filter. Example:(&(objectclass=groupOfUniqueNames)(uniqueMember=%s)) -

LDAP_TEST_LOGIN: A login name that will be used to test the user and group filter and validate the configuration. The test login name must be provided as a user id. Example:activeeon -

LDAP_TEST_PASSWORD: Password associated with the test user. -

LDAP_ADMIN_ROLES: List of existing LDAP groups that will be associated with a ProActive administrator role. Multiple groups can be provided separated by commas. Example:activeeon-admins -

LDAP_USER_ROLES: List of existing LDAP groups that will be associated with a ProActive standard user role. Multiple groups can be provided separated by commas. Example:activeeon-operators -

LDAP_GROUP_FILTER_USE_UID: If enabled, the group filter will use as parameter the user uid instead of its distinguished name. By default, false. -

LDAP_START_TLS: If enabled, StartTLS mode is activated. By default, false.

PWS Users Configuration Properties

In case you would like to create internal user accounts for the ProActive server, you can enable the users configuration in this section:

-

ENABLE_USERS_CONFIGURATION: Set to true if you would like to create internal ProActive users via login/password. By default, false. -

PWS_USERS: A json formatted array where each object defines a set of LOGIN, PASSWORD, and GROUPS of a ProActive internal user. The list of ProActive predefined GROUPS that can be associated with a user are listed and explained following this link: User Permissions

External Database Configuration Properties

These parameters are only used when ProActive is started with a Postgres database. It is recommended to not change these parameters unless you would like to change the passwords.

-

ENABLE_EXTERNAL_DATABASE_CONFIGURATION: Set to true if you would like to start ProActive with a Postgres database. By default, false. -

POSTGRES_PASSWORD: Password of the Postgres database user. By default, postgres. -

DB_DNS: DNS of the machine hosting the database. By default, it is hosted in the same network as the ProActive server container, hence, the database container name is referenced. -

DB_PORT: Database port. By default, 5432. -

DB_DIALECT: Database dialect to be used for hibernate communication. By default, PostgreSQL94Dialect. -

DB_RM_PASS: Password of thermuser in the RM database. By default, rm. -

DB_SCHEDULER_PASS: Password of thescheduleruser in the SCHEDULER database. By default, scheduler. -

DB_CATALOG_PASS: Password of thecataloguser in the CATALOG database. By default, catalog. -

DB_PCA_PASS: Password of thepcauser in the PCA database. By default, pca. -

DB_NOTIFICATION_PASS: Password of thenotificationuser in the NOTIFICATION database. By default, notification.

2.4.3. Docker Compose Configuration with an Embedded HSQL Database

Once you set the required configuration options in the .env file, you can use now the Docker Compose configuration provided in the installation package (docker-compose-hsqldb.yml) to run the ProActive server container with an embedded HSQL database.

It will start one single container related to the ProActive server.

Click here to display the docker-compose-hsqldb.yml file

version: '2.1'

services:

proactive-server:

restart: on-failure

image: dockerhub.activeeon.com/${DOCKER_IMAGE_PROACTIVE_NAMESPACE}/proactive-scheduler:${DOCKER_IMAGE_PROACTIVE_TAG}

container_name: proactive-scheduler

networks:

- pa-network

ports:

- ${PWS_PORT}:${PWS_PORT}

- ${PWS_PAMR_PORT}:${PWS_PAMR_PORT}

env_file:

- .env

environment:

- PWS_PROTOCOL

- PWS_PORT

volumes:

- proactive-default:/opt/proactive/server/default

- proactive-previous:/opt/proactive/server/previous

- proactive-backup:/opt/proactive/server/backup

healthcheck:

test: [ "CMD", "curl", "-k", "-f", "${PWS_PROTOCOL}://proactive-server:${PWS_PORT}" ]

interval: 30s

timeout: 10s

retries: 30

volumes:

proactive-default:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/default'

proactive-previous:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/previous'

proactive-backup:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/backup'

networks:

pa-network:Required configuration

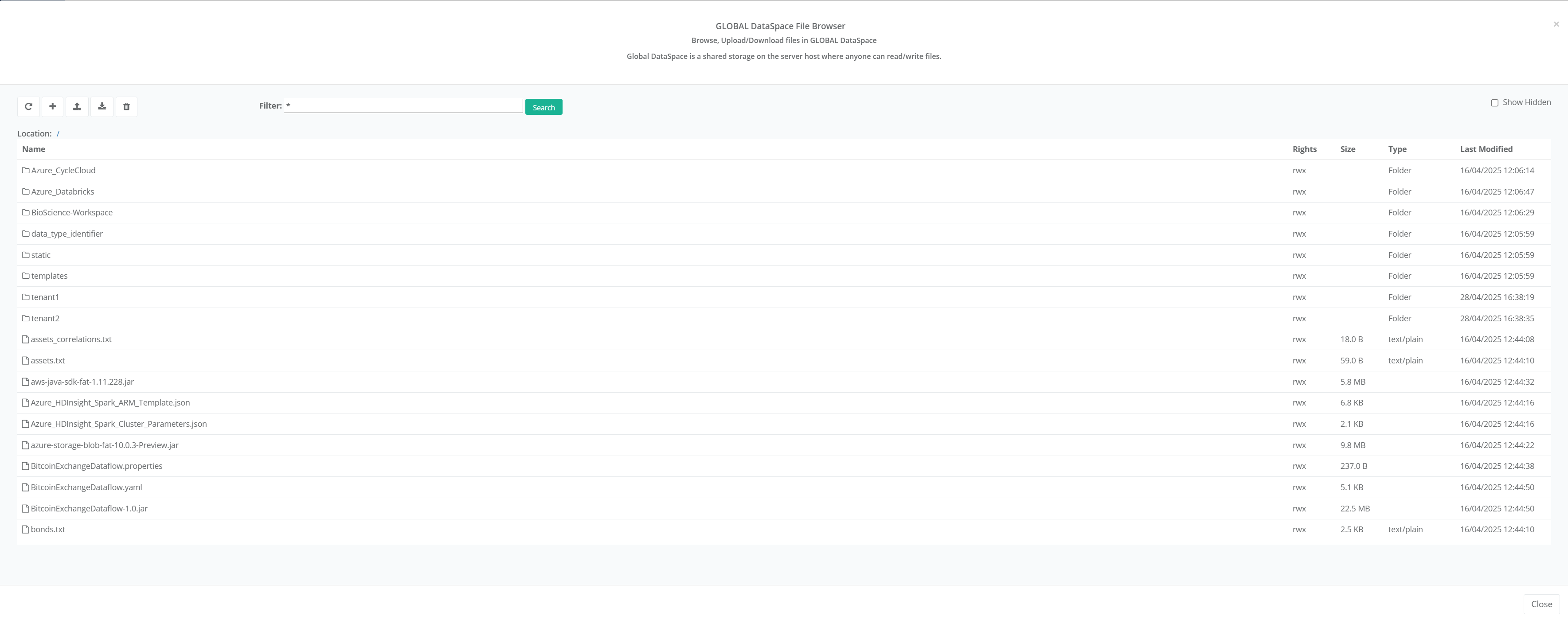

You can use the YAML Docker Compose definition as it is. However, to persist containers’ data,

it is necessary to establish local mounted volumes on the ProActive server and database VMs. Hence, you need to create 3 empty folders on the host machine named default, previous, and backup.

$ mkdir -p /opt/proactive/default /opt/proactive/previous /opt/proactive/backup

Then, you have to configure the absolute paths to these 3 folders in the docker-compose-hsqldb.yml file using the device: property (e.g., in the example above 3 folders are created and configured: /opt/proactive/default, /opt/proactive/previous, and /opt/proactive/backup).

...

volumes:

proactive-default:

...

device: '/opt/proactive/default'

proactive-previous:

...

device: '/opt/proactive/previous'

proactive-backup:

...

device: '/opt/proactive/backup'

These folders will host the current installation files (default), the upgraded one (previous), and all previous upgraded installation files (backup).

2.4.4. Docker Compose Configuration with a PostgreSQL Database

To use the Docker Compose configuration with a PostgreSQL database, ensure that the correct configuration options are set in the .env file beforehand.

Additionally, enable the advanced property ENABLE_EXTERNAL_DATABASE_CONFIGURATION.

Once done, you can then proceed with the Docker Compose configuration provided in the installation package docker-compose-pg.yml to launch the ProActive server container alongside an external PostgreSQL database container.

It will start two containers, one for the server, and one for the database.

Click here to display the docker-compose-pg.yml file

version: '2.1'

services:

proactive-server:

restart: on-failure

image: dockerhub.activeeon.com/${DOCKER_IMAGE_PROACTIVE_NAMESPACE}/proactive-scheduler:${DOCKER_IMAGE_PROACTIVE_TAG}

container_name: proactive-scheduler

networks:

- pa-network

ports:

- ${PWS_PORT}:${PWS_PORT}

- ${PWS_PAMR_PORT}:${PWS_PAMR_PORT}

env_file:

- .env

environment:

- PWS_PROTOCOL

- PWS_PORT

volumes:

- proactive-default:/opt/proactive/server/default

- proactive-previous:/opt/proactive/server/previous

- proactive-backup:/opt/proactive/server/backup

depends_on:

proactive-database:

condition: service_healthy

healthcheck:

test: [ "CMD", "curl", "-k", "-f", "${PWS_PROTOCOL}://proactive-server:${PWS_PORT}" ]

interval: 30s

timeout: 10s

retries: 30

proactive-database:

restart: on-failure

image: dockerhub.activeeon.com/${DOCKER_IMAGE_POSTGRES_NAMESPACE}/postgres-pa:${DOCKER_IMAGE_POSTGRES_TAG}

container_name: proactive-database

networks:

- pa-network

env_file:

- .env

volumes:

- proactive-data:/var/lib/postgresql/data

ports:

- 5432:5432

healthcheck:

test: [ "CMD-SHELL", "pg_isready -U postgres" ]

interval: 10s

timeout: 5s

retries: 5

volumes:

proactive-default:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/default'

proactive-previous:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/previous'

proactive-backup:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/backup'

proactive-data:

driver: local

driver_opts:

type: 'none'

o: 'bind'

device: '/opt/proactive/data'

networks:

pa-network:Required configuration

Same as for a Docker Compose installation using an embedded database (See section persistent volumes with compose), you need to create and link an empty folder to host the Postgres data in addition to the basic three default, previous, and backup folders that host the ProActive installation(s).

$ mkdir -p /opt/proactive/default /opt/proactive/previous /opt/proactive/backup /opt/proactive/data

You then need to specify the folder paths in the Docker Compose configuration file docker-compose-pg.yml:

...

volumes:

proactive-default:

...

device: '/opt/proactive/default'

proactive-previous:

...

device: '/opt/proactive/previous'

proactive-backup:

...

device: '/opt/proactive/backup'

proactive-data:

...

device: '/opt/proactive/data'

2.4.5. Start ProActive Using Docker Compose

Login into the private Activeeon registry:

$ docker login dockerhub.activeeon.com

To start the ProActive server with a default HSQL database, run:

$ docker-compose -f docker-compose-hsqldb.yml up -d

To start the ProActive server with a PostgreSQL database, run:

$ docker-compose -f docker-compose-pg.yml up -d

To monitor the ProActive server startup, run:

$ docker logs -f proactive-scheduler

Wait until the command output shows that ProActive server is started:

.... Loading workflow and utility packages... Packages successfully loaded ProActive started in 246.476727488 seconds

You can then access the ProActive Web Portals at <PWS_PROTOCOL>://<PWS_HOST_ADDRESS>:<PWS_PORT>/. The URL property values are specified in the .env file.

The user can access any of the four portals using the default credentials: admin/<PWS_LOGIN_ADMIN_PASSWORD>. PWS_LOGIN_ADMIN_PASSWORD is, by default, activeeon but you can customize it as well in the .env file.

2.4.6. Database Purge Operation in Docker Compose

In Postgres database, vacuum operations do not by default remove orphaned Large Objects from the database. Identification and removal of these objects is performed by the vacuumlo command.

As the ProActive database contains many large objects, it is essential to apply both vacuumlo and vacuum operations in order to control the disk space usage over time.

The purge operation is automatically integrated and performed within the Postgres container using a cron.daily process.

Though, prior to executing the purge operation, a preliminary configuration step is necessary (this preliminary step is done once for a given ProActive installation).

The following commands allow to prepare the database for the automatic purge operations performed within the Postgres database container.

Stop the ProActive server container:

$ docker-compose stop proactive-server

Run the embedded script for table configuration within the database container:

$ docker exec -it proactive-database /bin/bash /tables-configuration.sh

Start the ProActive server again:

$ docker-compose start proactive-server

The installation of ProActive using Docker Compose is now over ! Your ProActive Scheduler is now running as a set of container services !

2.4.7. Upgrade/Downgrade/Backup ProActive Using Docker Compose

ProActive can be easily upgraded using Docker Compose. We support automatic upgrade, downgrade, and backup of ProActive server installations in Docker.

Docker Compose configuration relies on Docker container volumes to host ProActive installation directories:

-

The

proactive-defaultvolume hosts the current and default installation. -

The

proactive-previousvolume hosts the previous installation after upgrade. -

The

proactive-backupvolume hosts all previous installations after multiple upgrades.

In the following, we are going to explain each procedure.

ProActive upgrade

In order to upgrade the ProActive server to a newer version, you have to edit the .env config file to provide a newer version. The current ProActive version is specified using the DOCKER_IMAGE_PROACTIVE_TAG option in the input installation file.

For example, you can replace DOCKER_IMAGE_PROACTIVE_TAG=13.0.0 by DOCKER_IMAGE_PROACTIVE_TAG=13.0.8 to get a maintenance release of ProActive. It is also applicable for minor and major release versions.

Now that a newer version is provided, you can restart the container:

$ docker-compose -f docker-compose-hsqldb.yml down $ docker-compose -f docker-compose-hsqldb.yml up -d

The new version will be detected and the upgrade process will start at container startup.

In fact, the current version will be automatically placed on the proactive-previous volume and the newer version will be placed on the proactive-default volume and started from there.

ProActive backup

The backup of previous ProActive versions is also automatic and it is triggered on every upgrade. When a new upgrade is started, the proactive-previous volume is checked and if it is not empty, its content is automatically moved to the backup volume proactive-backup.

Afterwards, the upgrade process starts as previously described.

ProActive downgrade

Using Docker Compose we also support one-level automatic downgrade of ProActive installations located in proactive-default and proactive-previous volumes.

To do so, you should provide the previous version of ProActive, as it is specified in the proactive-previous volume, to the DOCKER_IMAGE_PROACTIVE_TAG option in the .env file. This will allow to automatically swap the content of proactive-default and proactive-previous volumes.

ProActive will be then started from the proactive-default volume which will contain the last previous version.

2.5. Automated ProActive Installation with Kubernetes

ProActive could be easily deployed and started on AKS (Azure) or bare-metal (On-premises) Kubernetes clusters. For this purpose, we provide a ready-to-use Kubernetes configuration that enables you running ProActive Workflows and Scheduling (PWS) as a set of pods.

It allows you to start the following pods:

-

ProActive server pod

-

(Optional) ProActive static nodes pods

-

(Optional) ProActive dynamic nodes pods

-

(Optional) ProActive PostgreSQL database pod. The database pod is not started when using an embedded HSQL database.

The Kubernetes installation pulls the ProActive server, node, and database images from the Private Activeeon Docker Registry.

To be able to pull images from the Private Activeeon Docker Registry, a ProActive enterprise licence and access credentials are required. Please contact us at contact@activeeon.com to request access and support.

Here are the installation steps you need to follow in order to have ProActive running in a Kubernetes cluster:

2.5.1. Download the Helm Installation Package

Helm is a Kubernetes deployment tool for automating the creation, packaging, configuration, and deployment of applications and services to Kubernetes clusters.

ProActive uses Helm Charts (i.e., Helm package definition) to create and configure the kubernetes deployments. Thus, Helm must be pre-installed in order to deploy the ProActive pods.

Please refer to Helm’s documentation to get started.

Once Helm is set up properly, proceed to download the Helm installation package of ProActive:

The next step is to configure the Helm Chart by setting up the right configuration in the input values.yml file of the Chart.

2.5.2. Configure the Helm Input File

The values.yaml, which is included in the installation package, is necessary to provide the right configuration values before installing the Chart.

Click here to display the Helm input file

# Default values for Activeeon ProActive Chart

# This is a YAML-formatted file

# It declares variables to be passed into the ProActive Helm templates

# We provide below a summary of the different configuration blocks

# You can search for one of these terms to quickly jump into a specific block

# Configuration Blocks Menu (in order):

# *********** Common Configuration Properties ***************************

# - target:

# - namespace:

# - imageCredentials:

# *********** ProActive Scheduler Server Configuration Properties *******

# - user:

# - protocol:

# - proactiveAdminPassword:

# - housekeepingDays:

# - serverWorkerNodes:

# - ports:

# - database:

# - usersConfiguration:

# - ldapConfiguration:

# *********** Node Sources Configuration Properties *********************

# - nodeSources:

# *********** Kubernetes Resources Configuration Properties *************

# - ingress:

# - persistentVolumes:

# - services:

# - replicas:

# - images:

# - resources:

# - volumeMounts:

# - readinessProbe:

# - livenessProbe:

#***********************************************************************************************************************

#**************************************** Common Configuration Properties **********************************************

#***********************************************************************************************************************

#**************************************** Target Kubernetes cluster ****************************************************

# Supported target Kubernetes clusters: "on-prem" or "aks" clusters

target:

# The 'cluster' property value could be "aks" or "on-prem"

cluster: <cluster>

#**************************************** Namespace Configuration ******************************************************

# Namespace to be used to create all ProActive installation resources

# The namespace will be associated to every resource created by the helm chart

namespace: ae-dev

#******************************** Credentials of the Activeeon Private Docker Registry *********************************

# If you do not have access, please contact contact@activeeon.com to request access

imageCredentials:

registry: dockerhub.activeeon.com

username: <username>

password: <password>

email: support@activeeon.com

#***********************************************************************************************************************

#**************************************** ProActive Scheduler Server Configuration *************************************

#***********************************************************************************************************************

#**************************************** User Starting the ProActive Server *******************************************

# On the Scheduler Pod startup, a new user is created to start the proactive server

# The root user is only used to do the installation

# The server is started by the following user

user:

userName: activeeon

groupName: activeeon

uid: 1000

gid: 1000

#**************************************** ProActive Web Protocol Configuration *****************************************

# Protocol http or https to use to access the ProActive Server Web Portals (default: http)

# The https requires for now some advanced manual configurations

# The http protocol is recommended to be used

protocol: http

#**************************************** ProActive 'admin' Password Configuration *************************************

# The admin user is an administrator of the platform

# This account is used internally for the installation to perform some actions like getting the session id or creating worker nodes, etc.

# It is used as well to access the ProActive web portals, at the login page

# It important to provide a custom password and then access the web portals using the given password

proactiveAdminPassword: activeeon

#**************************************** Jobs Housekeeping Configuration **********************************************

# The Scheduler provides a housekeeping mechanism that periodically removes finished jobs from the Scheduler and Workflow Execution portals

# It also removes associated logs and all job data from the database to save space

# The following property defines the number of days before jobs cleanup (default: 30)

housekeepingDays: 30

#**************************************** Server Worker Nodes Configuration ********************************************

# Defines the number of worker agent nodes to be created on the Scheduler server Pod

# It is recommended to not create too many nodes on the same Pod as the server to avoid performance overhead

serverWorkerNodes: 1

#**************************************** Ports Configuration **********************************************************

# This section describes the ports to be used by this installation

ports:

# Port to use to access the ProActive web portals (default: 8080)

# The web portals will be accessible through the external IP or DNS given by the load balancer service + httpPort

httpPort: &httpPort 8080

# Port to use for PAMR communication (default: 33647)

# It is recommended to use this default port

pamrPort: &pamrPort 33647

# Port to use to access the ProActive web portals on an on-prem installation (without a LoadBalancer)

# The web portals will be accessible through the cluster IP or DNS + nodePort

nodePort: &nodePort 32000

#**************************************** Database Configuration *******************************************************

# Database configuration properties

database:

# Enable or disable using an external database

# If set to 'true', postgres database is used, 'false' sets the default embedded HSQL database

enabled: true

# Postgres database configuration

# The next properties are considered only if the external database is used

dialect: org.hibernate.dialect.PostgreSQL94Dialect

# Credentials, used only for postgres configuration

# They define the passwords of the catalog, notification, pca, rm, and scheduler databases

passwords:

catalogPassword: catalog

notificationPassword: notification

pcaPassword: pca

rmPassword: rm

schedulerPassword: scheduler

postgresPassword: postgres

#**************************************** File Authentication Configuration ********************************************

# This is the default authentication method provided by ProActive

# The login, password, and groups could be provided in a JSON format

# For more information about the groups and other authentication methods, visit: https://doc.activeeon.com/latest/admin/ProActiveAdminGuide.html#_file

usersConfiguration:

addUsersConfiguration: true

users: [{ "LOGIN": "test_admin", "PASSWORD": "activeeon", "GROUPS": "scheduleradmins,rmcoreadmins" },

{ "LOGIN": "test_user", "PASSWORD": "activeeon", "GROUPS": "user" }]

#**************************************** LDAP Authentication Configuration ********************************************

# In addition to file based authentication, we provide a second authentication method, the LDAP authentication

ldapConfiguration:

# set ‘enableLdapConfiguration’ to true if you want to activate the ldap authentication in addition to the file based authentication

enableLdapConfiguration: false

# url of the ldap server. Multiple urls can be provided separated by commas

serverUrls: "<serverUrls>"

# Scope in the LDAP tree where users can be found

userSubtree: "<userSubtree>"

# Scope in the LDAP tree where groups can be found

groupSubtree: "<groupSubtree>"

# login that will be used when binding to the ldap server (to search for a user and its group). The login should be provided using its ldap distinguished name

bindLogin: "<bindLogin>"

# encrypted password associated with the bind login

bindPassword: "<bindPassword>"

# ldap filter used to search for a user. %s corresponds to the user login name that will be given as parameter to the filter

userFilter: "<userFilter>"

# ldap filter used to search for all groups associated with a user. %s corresponds to the user distinguished name found by the user filter

groupFilter: "<groupFilter>"

# a login name that will be used to test the user and group filter and validate the configuration. The test login name must be provided as a user id

testLogin: "<testLogin>"

# encrypted password associated with the test user

testPassword: "<testPassword>"

# list of existing ldap groups that will be associated with a ProActive administrator role. Multiple groups can be provided separated by commas

adminRoles: "<adminRoles>"

# list of existing ldap groups that will be associated with a ProActive standard user role. Multiple groups can be provided separated by commas

userRoles: "<userRoles>"

# If enabled, the group filter will use as parameter the user uid instead of its distinguished name

groupFilterUseUid: false

# If enabled, StartTLS mode is activated

startTls: false

#***********************************************************************************************************************

#**************************************** Node Sources Configuration ***************************************************

#***********************************************************************************************************************

# The following section describe the worker pods to be created following the Scheduler server startup

nodeSources:

# The 'dynamicK8s' configuration defines the worker nodes to be created dynamically depending on the workload

dynamicK8s:

# True, to set up a Dynamic Kubernetes Node Source at Server startup (false if not)

enabled: true

# Name of the Dynamic Kubernetes Node Source

name: Dynamic-Kube-Linux-Nodes

# Minimum number of nodes to be deployed by the node source

minNodes: 4

# Maximum number of nodes to be deployed

maxNodes: 15

# the 'staticLocal' configuration defines a fixed number of worker nodes to be created following the server startup

staticLocal:

# True, to set up a Static Kubernetes Node Source at Server startup (false if not)

enabled: true

# Name of the Static Kubernetes Node Source

name: Static-Kube-Linux-Nodes

# Number of nodes to be deployed

numberNodes: 4

#***********************************************************************************************************************

#**************************************** Kubernetes Resources Configuration *******************************************

#***********************************************************************************************************************

#**************************************** Ingress Configuration ********************************************************

ingress:

# Specifies whether the Ingress resource should be enabled (true or false)

enabled: false

# Specifies the Ingress class name to be used. Use 'kubectl get ingressclass' to check the available ingress classes in your cluster

ingressClassName: public

# Specifies the hostname to be used by the Ingress service

hostname: <hostname>

# To configure the TLS certificate for the https mode. We provide three ways:

# - Provided certificate: a fullchain + private key have to be provided

# - Self Signed certificate using Cert Manager

# - Let's Encrypt certificate using Cert Manager

# You have to enable only one of these certification method exclusively

providedCertificate:

# Specifies whether a provided certificate should be used (true or false)

enabled: true

tls:

# The actual content of the TLS certificate file (encoded in Base64). Use for example 'cat fullchain.pem | base64' to get the encrypted certificate file content

crt: <fullchain.pem>

# The actual content of the TLS private key file (encoded in Base64). Use for example 'cat privkey.pem | base64' to get the encrypted private key file content

key: <privkey.pem>

clusterIssuer:

selfSigned:

# Specifies whether a self-signed certificate should be used (true or false)

enabled: false

letsEncrypt:

# Specifies whether Let's Encrypt should be used for obtaining certificates (true or false)

enabled: false

# The Let's Encrypt ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# The email address to be associated with the Let's Encrypt account

email: test@test.com

#**************************************** Persistent Volumes Configuration *********************************************

persistentVolumes:

# The following properties define the required settings to create local Node persistent volumes

# The Local Node Volume will host the Scheduler Server installation files and the external database data

localVolumeConfig:

# Describes the storage class configuration

storageClass:

# Specifies the provisioner for the storage class as "kubernetes.io/no-provisioner."

provisioner: kubernetes.io/no-provisioner

# Sets the volume binding mode to "Immediate."

volumeBindingMode: Immediate

# Contains settings related to scheduling and placement of the persistent volumes.

scheduler:

persistentVolume:

# Specifies the storage capacity for the persistent volume

storage: 20Gi

# Defines the access modes for the persistent volume as ReadWriteMany

accessModes: ReadWriteMany

# Sets the reclaim policy for the persistent volume to "Delete."

persistentVolumeReclaimPolicy: Delete

# Specifies the local path for the persistent volume

localPath: <localPath>

#Defines node affinity settings

nodeAffinity:

# Specifies the node name

nodeName: <nodeName>

persistentVolumeClaim:

# Specifies the access modes for the persistent volume claim as ReadWriteMany

accessModes: ReadWriteMany

# Specifies the storage capacity for the persistent volume claim

storage: 20Gi

# Contains settings related to the database and placement of the persistent volumes

# It is used only when the postgres database is enabled

database:

persistentVolume:

storage: 20Gi

accessModes: ReadWriteMany

persistentVolumeReclaimPolicy: Delete

localPath: <localPath>

nodeAffinity:

nodeName: <nodeName>

persistentVolumeClaim:

accessModes: ReadWriteMany

storage: 20Gi

# The following properties define the required settings to create the Azure Disk for data persistence

# The Azure Disk will host the Scheduler Server installation files and the external database data

aksDiskVolumeConfig:

# The 'StorageClass' defines different classes of storage and their associated provisioning policies in a cluster

# It allows to abstract the underlying storage infrastructure and provide a way to dynamically provision and manage persistent volumes (PVs)

storageClass:

# The 'provisioner' specifies the CSI (Container Storage Interface) driver that is responsible for provisioning the storage

# In this case, 'disk.csi.azure.com' is the provisioner for Azure Disk storage

provisioner: disk.csi.azure.com

# The 'reclaimPolicy' determines what happens to the underlying Azure Disk resource when the associated PersistentVolume (PV) is deleted

# The 'Delete' policy means that the Azure Disk will be deleted when the PV is released

reclaimPolicy: Delete

# The 'allowVolumeExpansion' parameter indicates whether the storage volume associated with this StorageClass can be resized after creation

# Setting it to 'true' allows for volume expansion

allowVolumeExpansion: true

# The 'storageaccounttype' specifies the type of Azure storage account to be used for provisioning the Azure Disk

# In this case, 'StandardSSD_LRS' stands for Standard Solid State Drive (SSD) with Locally Redundant Storage (LRS)

storageaccounttype: StandardSSD_LRS

# The 'kind' specifies the type of the storage class

# In this case, it's set to 'Managed', which implies that the Azure Disk will be managed by the underlying cloud provider (Azure) rather than being manually managed

kind: Managed

# The scheduler persistent volume claim for Azure Disk provisioning

# For memory, PersistentVolumeClaim (PVC) is used to request and allocate storage resources for applications running within the cluster

scheduler:

persistentVolumeClaim:

# The 'storage' property specifies the desired storage capacity for the PVC