All documentation links

ProActive Workflows & Scheduling (PWS)

-

PWS User Guide (Workflows, Workload automation, Jobs, Tasks, Catalog, Resource Management, Big Data/ETL, …)

-

MODULE: Job Planner (Time-based Scheduling)

-

MODULE: Cloud Watch (Event-based Scheduling)

-

MODULE: Cloud Automation (PaaS On-Demand, Service deployment and management)

-

Administration Guide (Installation, Infrastructure & Nodes setup, Agents,…)

Machine Learning Open Studio (MLOS)

-

Machine Learning Open Studio User Guide - MLOS (a complete Data Science and Machine Learning Platform)

API documentation: Scheduler REST API Scheduler CLI Scheduler Java Workflow Creation Java Python Client

1. Overview

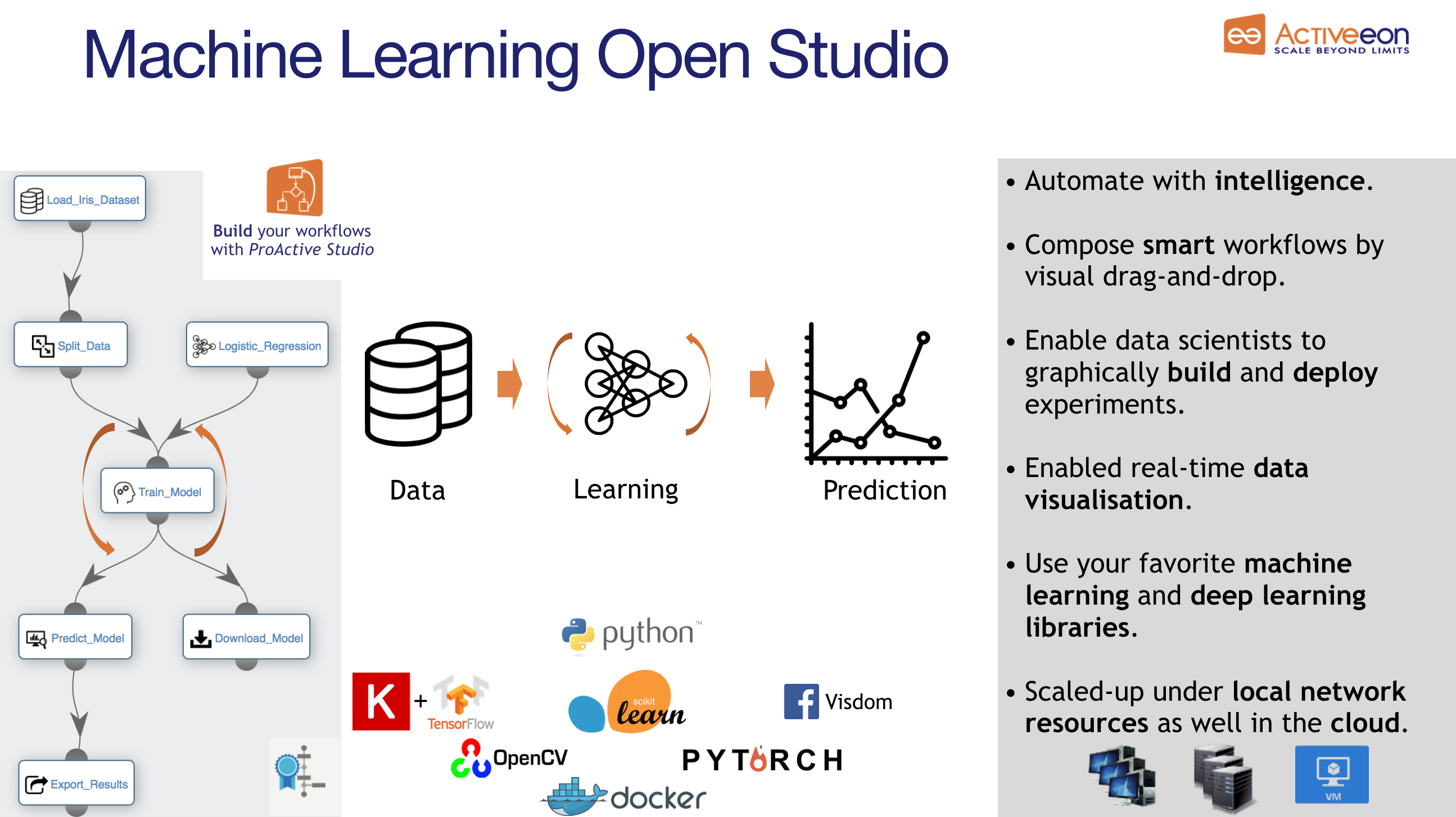

1.1. What is Machine Learning Open Studio (MLOS)?

Machine Learning Open Studio (ML-OS) is an interactive graphical interface that enables developers and data scientists to quickly and easily build, train, and deploy machine learning models at any scale. It provides a rich set of generic machine learning tasks that can be connected together to build either basic or complex machine learning workflows for various use cases such as: fraud detection, text analysis, online offer recommendations, prediction of equipment failures, facial expression analysis, etc.

Machine Learning Open Studio is open source and allows easy task parallelization, running them on resources matching constraints (Multi-CPU, GPU, data locality, library).

The Machine Learning Open Studio can be tried for free online here.

1.2. Glossary

The following terms are used throughout the documentation:

- ProActive Workflows & Scheduling

-

The full distribution of ProActive for Workflows & Scheduling, it contains the ProActive Scheduler server, the REST & Web interfaces, the command line tools. It is the commercial product name.

- ProActive Scheduler

-

Can refer to any of the following:

-

A complete set of ProActive components.

-

An archive that contains a released version of ProActive components, for example

activeeon_enterprise-pca_server-OS-ARCH-VERSION.zip. -

A set of server-side ProActive components installed and running on a Server Host.

-

- Resource Manager

-

ProActive component that manages ProActive Nodes running on Compute Hosts.

- Scheduler

-

ProActive component that accepts Jobs from users, orders the constituent Tasks according to priority and resource availability, and eventually executes them on the resources (ProActive Nodes) provided by the Resource Manager.

| Please note the difference between Scheduler and ProActive Scheduler. |

- REST API

-

ProActive component that provides RESTful API for the Resource Manager, the Scheduler and the Catalog.

- Resource Manager Web Interface

-

ProActive component that provides a web interface to the Resource Manager.

- Scheduler Web Interface

-

ProActive component that provides a web interface to the Scheduler.

- Workflow Studio

-

ProActive component that provides a web interface for designing Workflows.

- Job Planner Portal

-

ProActive component that provides a web interface for planning Workflows, and creating Calendar Definitions

- Job Planner

-

A ProActive component providing advanced scheduling options for Workflows.

- Bucket

-

ProActive notion used with the Catalog to refer to a specific collection of ProActive Objects and in particular ProActive Workflows.

- Server Host

-

The machine on which ProActive Scheduler is installed.

SCHEDULER_ADDRESS-

The IP address of the Server Host.

- ProActive Node

-

One ProActive Node can execute one Task at a time. This concept is often tied to the number of cores available on a Compute Host. We assume a task consumes one core (more is possible, so on a 4 cores machines you might want to run 4 ProActive Nodes. One (by default) or more ProActive Nodes can be executed in a Java process on the Compute Hosts and will communicate with the ProActive Scheduler to execute tasks.

- Compute Host

-

Any machine which is meant to provide computational resources to be managed by the ProActive Scheduler. One or more ProActive Nodes need to be running on the machine for it to be managed by the ProActive Scheduler.

|

Examples of Compute Hosts:

|

PROACTIVE_HOME-

The path to the extracted archive of ProActive Scheduler release, either on the Server Host or on a Compute Host.

- Workflow

-

User-defined representation of a distributed computation. Consists of the definitions of one or more Tasks and their dependencies.

- Generic Information

-

Are additional information which are attached to Workflows.

- Job

-

An instance of a Workflow submitted to the ProActive Scheduler. Sometimes also used as a synonym for Workflow.

- Job Icon

-

An icon representing the Job and displayed in portals. The Job Icon is defined by the Generic Information workflow.icon.

- Task

-

A unit of computation handled by ProActive Scheduler. Both Workflows and Jobs are made of Tasks.

- Task Icon

-

An icon representing the Task and displayed in the Studio portal. The Task Icon is defined by the Task Generic Information task.icon.

- ProActive Agent

-

A daemon installed on a Compute Host that starts and stops ProActive Nodes according to a schedule, restarts ProActive Nodes in case of failure and enforces resource limits for the Tasks.

2. Get Started

To submit your first Machine Learning (ML) workflow to ProActive Scheduler, install it in your environment (default credentials: admin/admin) or just use our demo platform try.activeeon.com.

ProActive Scheduler provides comprehensive interfaces that allow to:

-

Create workflows using ProActive Workflow Studio

-

Submit workflows, monitor their execution and retrieve the tasks results using ProActive Scheduler Portal

-

Add resources and monitor them using ProActive Resource Manager Portal

-

Version and share various objects using ProActive Catalog Portal

-

Provide an end-user workflow submission interface using Workflow Automation Portal

-

Generate metrics of multiple job executions using Job Analytics Portal

-

Plan workflow executions over time using Job Planner Portal

-

Add services using Cloud Automation Portal

-

Perform event based scheduling using Cloud Watch Portal

-

Control manual workflows validation steps using Notification Portal

We also provide a REST API and command line interfaces for advanced users.

3. Create a First Predictive Solution

Suppose you need to predict houses prices based on this information (features) provided by the estate agency:

-

CRIM per capita crime rate by town

-

ZN proportion of residential lawd zoned for lots over 25000

-

INDUS proportion of non-retail business acres per town

-

CHAS Charles River dummy variable

-

NOX nitric oxides concentration

-

RM average number of rooms per dwelling

-

AGE proportion of owner-occupied units built prior to 1940

-

DIS weighted distances to five Boston Employment centres

-

RAD index of accessibility to radial highways

-

TAX full-value property-tax rate per $10 000

-

PTRATIO pupil-teacher ratio by town

-

B 1000(Bk - 0.63)^2 where Bk is the proportion of blacks by town

-

LSTAT % lower status of the population

-

MDEV Median value of owner-occupied homes in $1000' s

Predicting houses prices is a complex problem, but we can simplify it a bit for this step by step example. We’ll show you how you can easily create a predictive analytics solution using MLOS.

3.1. Manage the Canvas

To use MLOS, you need to add the Machine Learning Bucket as main catalog in the ProActive Studio. This bucket contains a set of generic tasks that enables you to upload and prepare data, train a model and test it.

-

Open ProActive Workflow Studio home page.

-

Create a new workflow.

-

Fill the Workflow

General Parameters. -

Click on

Catalogmenu thenSet Bucket as Main Catalog Menuand selectmachine-learningbucket. This can also be achieved by adding/templates/machine-learningat the end of the URL of the proActive workflow studio. -

Click on

Catalogmenu thenAdd Bucket as Extra Catalog Menuand selectdata-visualizationbucket. -

Organize your canvas.

| Set Bucket as Main Catalog Menu allows the user to change the bucket used to get workflows from the Catalog in the studio. By selecting a bucket, the user can change the content of the main Catalog menu (named as the current bucket) to get workflows from another bucket as templates. |

3.2. Upload Data

To upload data into the Workflow, you need to use a dataset stored in a CSV file.

-

Once your dataset has been converted to CSV format, upload it into a cloud storage service for example Amazon S3. For this tutorial, we will use Boston house prices dataset available on this link: https://s3.eu-west-2.amazonaws.com/activeeon-public/datasets/boston-houses-prices.csv

-

Drag and drop the Import_Data task from the machine-learning bucket in the ProActive Workflow Studio.

-

Click on the task and click

General Parametersin the left to change the default parameters of this task. -

Put in FILE_URL variable the S3 link to upload your dataset.

-

Set the other parameters according to your dataset format.

This task uploads the data into the workflow that we can for model training and testing.

If you want to skip these steps, you can directly use the Load_Boston_Dataset Task by a simple drag and drop.

3.3. Prepare Data

This step consists of preparing the data for the training and testing of the predictive model. So in this example, we will simply split our datset into two separate datasets: one for training and one for testing.

To do this, we use the Split_Data Task in the machine_learning bucket.

-

Drag and drop the Split_Data Task into the canvas, and connect it to the Import_Data or Load_Boston_Dataset Task.

-

By default, the ratio is 0.7 this means that 70% of the dataset will be used for training the model and 0.3 for testing it.

-

Click the Split_Data Task and set the TRAIN_SIZE variable to 0.6.

3.4. Train a Predictive Model

Using MLOS, you can easily create different ML models in a single experiment and compare their results. This type of experimentation helps you find the best solution for your problem.

You can also enrich the machine-learning bucket by adding new ML algorithms and publish or customize an existing task according to your requirements as the tasks are open source.

To change the code of a task click on it and click the Task Implementation. You can also add new variables to a specific task.

|

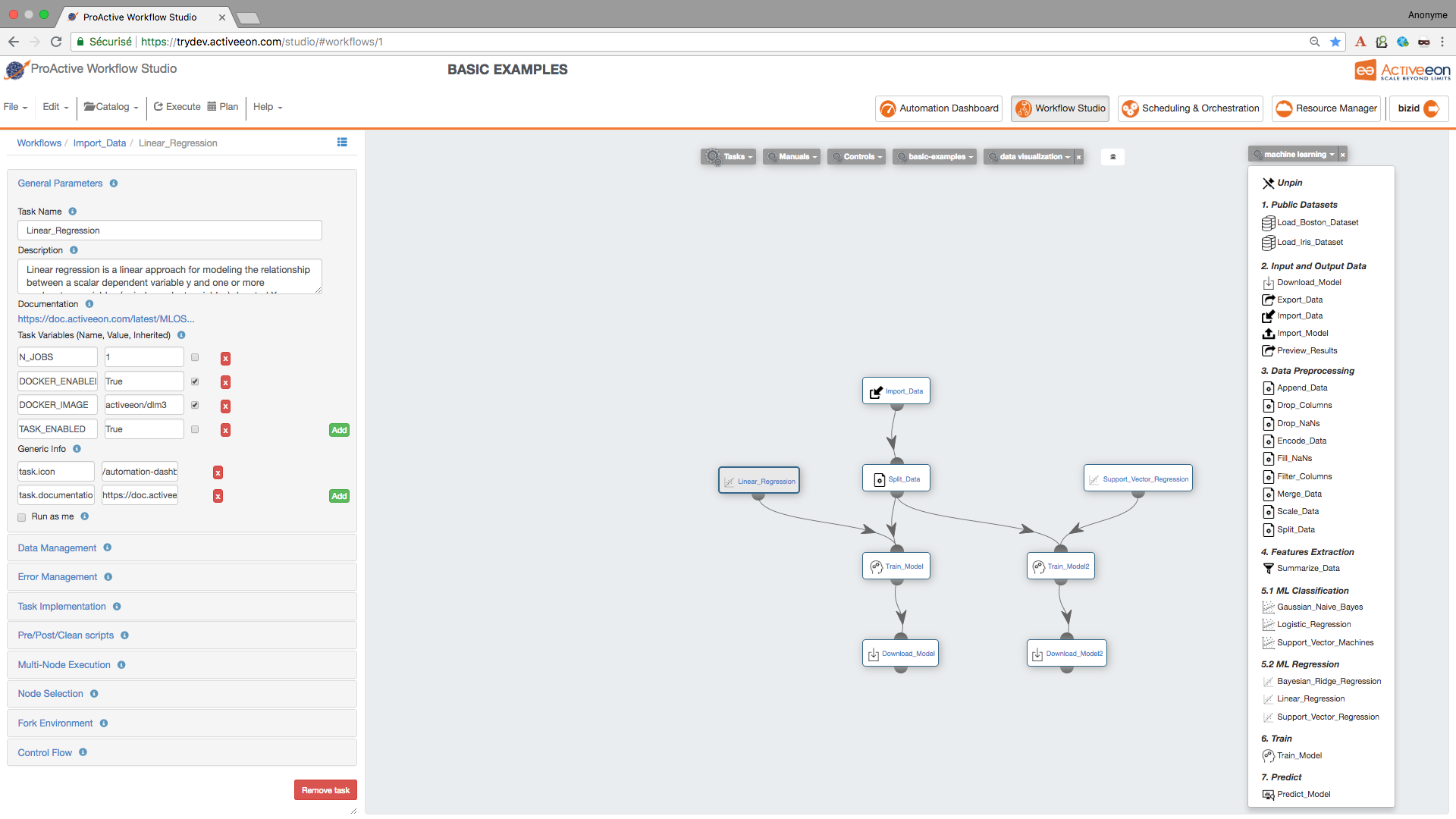

In this step, we will create two different types of models and then compare their scores to decide which algorithm is most suitable to our problem. As the Boston dataset used for this example consists of predicting price of houses (continuous label). As such, we need to deal with a regression predictive problem.

To solve this problem, we have to choose a regression algorithm to train the predictive model. To see the available regression algorithms available on the MLOS, see ML Regression Section in the machine-learning bucket.

For this example, we will use Linear_Regression Task and Support_Vector_Regression Task.

-

Find the Linear_Regression Task and Support_Vector_Regression Task and drag them into the canvas.

-

Find the Train_Model Task and drag it twice into the canvas and set its LABEL_COLUMN variable to LABEL.

-

Connect the Split_Data Task to the two Train_Model Tasks in order to give it access to the training data. Connect then the Linear_Regression Task to the first Train_Model Task and Support_Vector_Regression to the second Train_Model Task.

-

To be able to download the model learned by each algorithm, drag two Download_Model Tasks and connect them to each Train_Model Task.

3.5. Test the Predictive Model

To evaluate the two learned predictive models, we will use the testing data that was separated out by the Split_Data Task to score our trained models. We can then compare the results of the two models to see which generated better results.

-

Find the Predict_Model Task and drag and drop it twice into the canvas and set its LABEL_COLUMN variable to LABEL.

-

Connect the first Predict_Model Task to the Train_Model Task that is connected to Support_Vector_Regression Task.

-

Connect the second Predict_Model Task to the Train_Model Task that is connected to Linear_Regression Task.

-

Connect the second Predict_Model Task to the Split_Data Task

-

Find the Preview_Results Task in the ML bucket and drag and drop it twice into the canvas.

-

Connect each Preview_Results Task with Predict_Model.

| if you have a pickled file (.pkl) containing a predictive model that you have learned using another platform and you need to test it in the MLOS, you can load it using Import_Model Task. |

3.6. Run the Experiment and Preview the Results

Now the workflow is completed, let’s execute it by:

-

Click the Execute button on the menu to run the workflow.

-

Click the Scheduling & Orchestration button to track the workflow execution progress.

-

Click the Visualization tab and track the progress of your workflow execution (a green check mark appears on each Task when its execution is finished).

-

Visualize the output logs by clicking on the output tab and check the streaming check box.

-

Click the Tasks tab, select an Preview_Results task and click on the Preview tab, then click either on Open in browser to preview the results on your browser or on Save as file to download the results locally.

4. AutoML

The auto-ml-optimization bucket contains the Multi_Tuners_AutoML workflow that can be easily used to find the operating parameters for any system whose performance can be measured as a function of adjustable parameters.

It is an estimator that minimizes the posterior expected value of a loss function.

This bucket also comes with a set of workflows' examples that demonstrates how we can optimize mathematical functions, MLOS workflows and machine/deep learning algorithms from scripts using AutoML tuners.

4.1. AutoML Variants

All Multi_Tuners_AutoML variants propose six algorithms for hyperparameters' optimization. The choice of the sampling/search strategy depends strongly on the tackled problem. Three variants of AutoML are proposed. Each variant comes with specific pipelines (parallel or sequential) and visualization tools (Visdom or Tensorboard) as described in the subsections below (Multi_Tuners_AutoML_V1, Multi_Tuners_AutoML_V2, Multi_Tuners_AutoML_V3).

Common variables:

Variable name |

Description |

Type |

|

Specifies the tuner algorithm that will be used for hyperparameters optimization. |

List(Bayes, Grid, Random, QuasiRandom, CMAES, MOCMAES) default=Bayes |

|

Specifies the path of the workflow that we need to optimize. |

String [default=auto-ml-optimization/Himmelblau_Function] |

|

Specifies the representation of the search space which has to be defined using dictionaries. |

JSON format (see search space representation below) |

|

Specifies the number of maximum iterations. |

Int (default=2) |

|

Specifies the number of samples per iteration. |

Int (default=2) |

|

If True, the workflow will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

How to define the search space:

This subsection describes common building blocks to define a search space:

-

uniform: Uniform continuous distribution.

-

quantized_uniform: Uniform discrete distribution.

-

log: Logarithmic uniform continuous distribution.

-

quantized_log: Logarithmic uniform discrete distribution.

-

choice: Uniform choice distribution between non-numeric samples.

Which tuner algorithm to choose?

The choice of the tuner depends on the following aspects:

-

Time required to evalute the model.

-

Number of hyperparameters to optimize.

-

Type of variable.

-

The size of the search space.

In the following, we briefly describe the different tuners proposed by the Multi_Tuners_AutoML workflow:

-

Grid sampling applies when all variables are discrete and the number of possibilities is low. A grid search is a naive approach that will simply try all possibilities making the search extremely long even for medium sized problems.

-

Random sampling is an alternative to grid search when the number of discrete parameters to optimize and the time required for each evaluation is high. Random search picks the point randomly from the configuration space.

-

QuasiRandom sampling ensures a much more uniform exploration of the search space than traditional pseudo random. Thus, quasi random sampling is preferable when not all variables are discrete, the number of dimensions is high and the time required to evaluate a solution is high.

-

Bayes search models the search space using gaussian process regression, which allows to have an estimate of the loss function and the uncertainty on that estimate at every point of the search space. Modeling the search space suffers from the curse of dimensionality, which makes this method more suitable when the number of dimensions is low.

-

CMAES search is one of the most powerful black-box optimization algorithm. However, it requires a significant number of model evaluation (in the order of 10 to 50 times the number of dimensions) to converge to an optimal solution. This search method is more suitable when the time required for a model evaluation is relatively low.

-

MOCMAES search is a multi-objective algorithm optimizing multiple tradeoffs simultaneously. To do that, MOCMAES employs a number of CMAES algorithms.

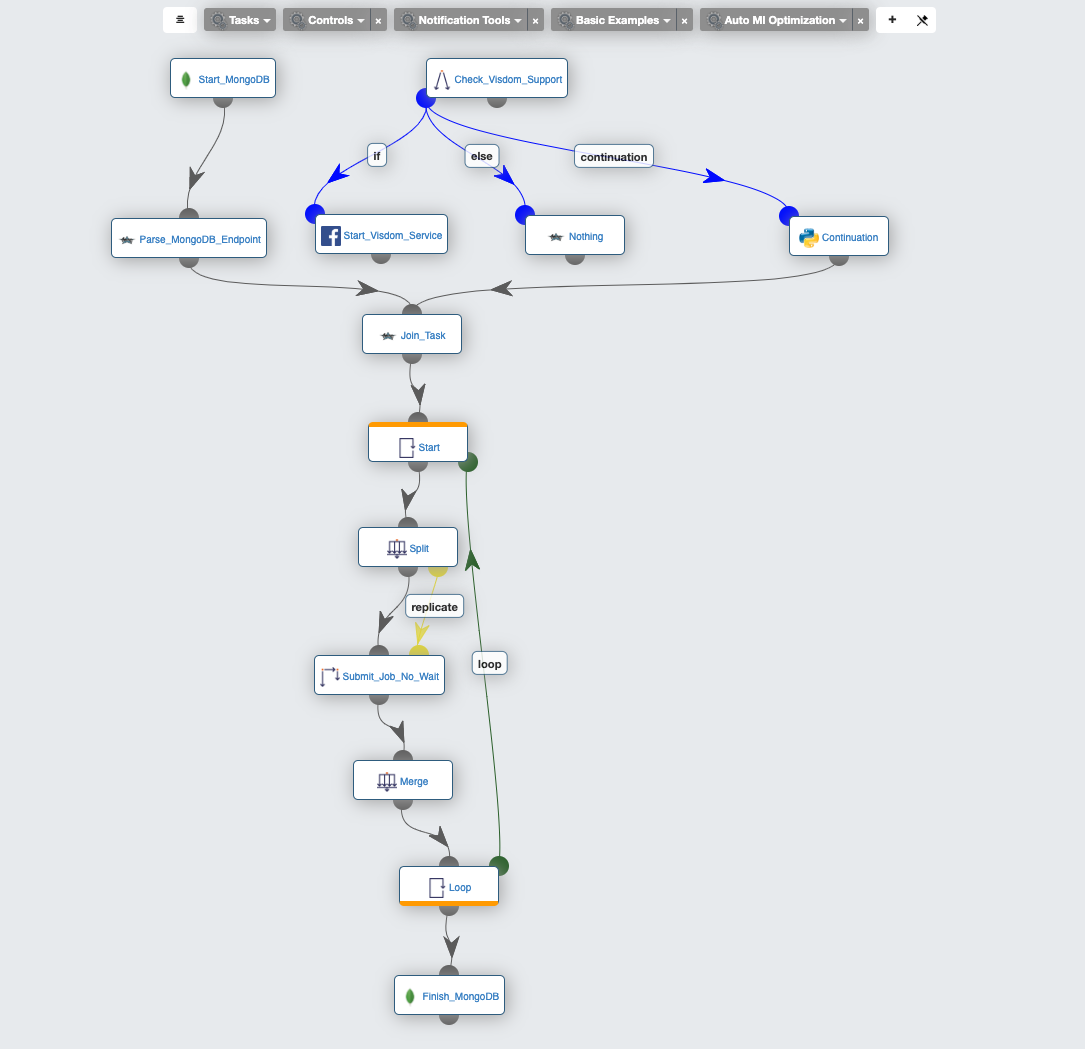

Multi_Tuners_AutoML_V1

Workflow Overview: generates at first the chosen values of hyperparameters for each trial. Then, it executes the different trials in parallel. Finally, it plots the different results using Visdom.

Specific variables:

Variable name |

Description |

Type |

|

If True, the results will be plotted in real time in the Visdom dashboard. |

Boolean (default=True) |

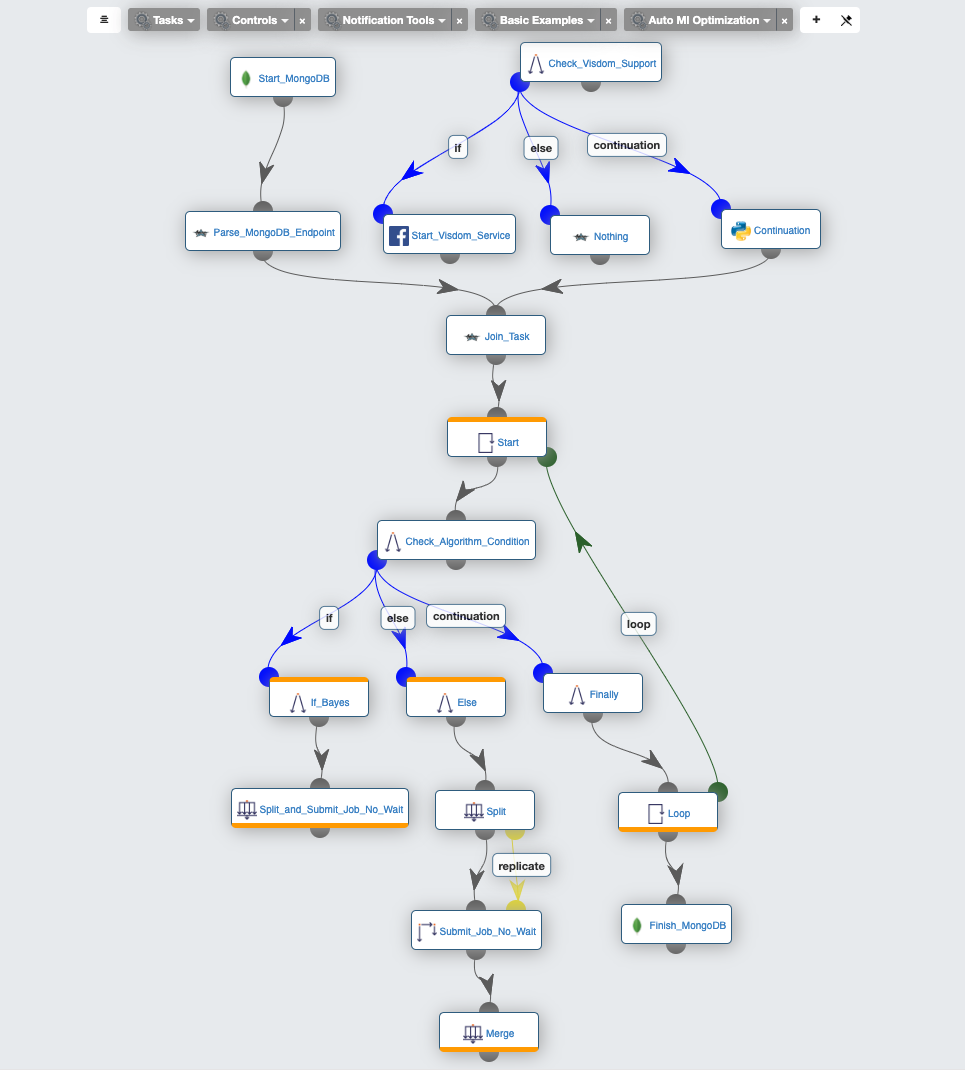

Multi_Tuners_AutoML_V2

Workflow Overview: considers two execution pipelines (parallel and sequential).

For all the tuning algorithms except the Bayesian one, the parallel pipeline is executed similar as Multi_Tuners_AutoML_V1. For the Bayesian algorithm, the sequential one is executed. This pipeline both generates the hyperparameters' values, and executes the associated trials sequentially.

The results can be visualized using Visdom.

Specific variables:

Variable name |

Description |

Type |

|

If True, the results will be plotted in real time in the Visdom dashboard. |

Boolean (default=True) |

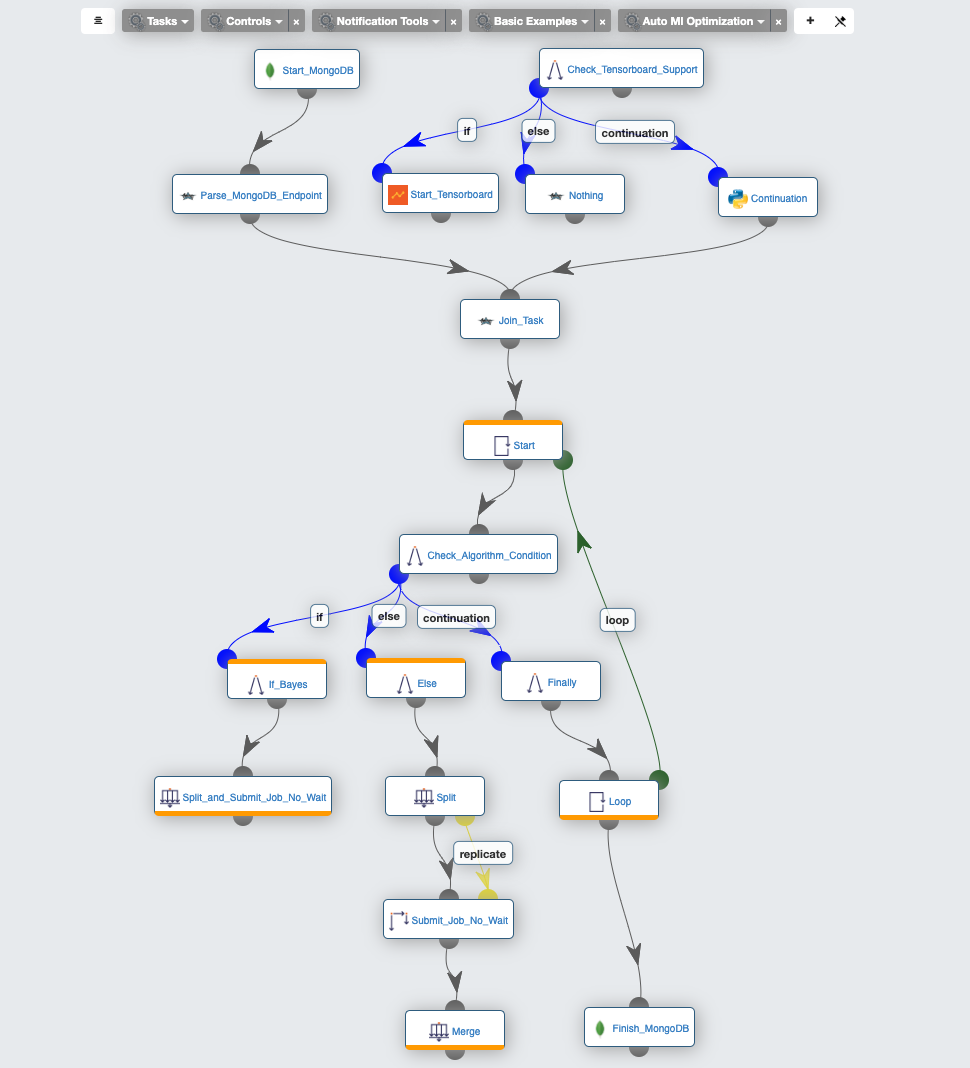

Multi_Tuners_AutoML_V3

Workflow Overview: is similar to Multi_Tuners_AutoML_V2. However, this version uses Tensorboard to visualize the different results.

Specific variables:

Variable name |

Description |

Type |

|

The path of the shared folder in which the Tensorboard logs will be saved. |

String (default=/shared/$INSTANCE_NAME) |

|

The path of the folder in which the Tensorboard logs will be saved in the docker container. |

String (default=/graphs/$INSTANCE_NAME) |

|

The instance name of the Tensorboard server that is/will be launched. |

String (default=tensorboard-server) |

|

If True, the results will be plotted in real time in the Tensorboard dashboard. |

Boolean (default=True) |

4.2. Objective ML Examples

The following workflows represent some machine learning and deep learning algorithms that can be optimized:

Diabetics Detection Using Logistic Regression: train a logistic regression model using MLOS machine learning generic tasks in order to detect diabetics.

CIFAR10 CNN: train a simple deep CNN on the CIFAR10 images dataset using the Keras library.

CIFAR100 CNN: train a simple deep CNN on the CIFAR100 images dataset using the Keras library.

Image Object Detection: train a YOLO model on the coco dataset using MLOS deep learning generic tasks.

Multiple SVM Models: train multiple types of SVMs models that can be optimized using AutoML.

4.3. Objective Function Examples

The following workflows represent some mathematical functions that can be optimized by the AutoML tuners.

Himmelblau_Function: is a multi-modal function, used to test the performance of optimization algorithms.

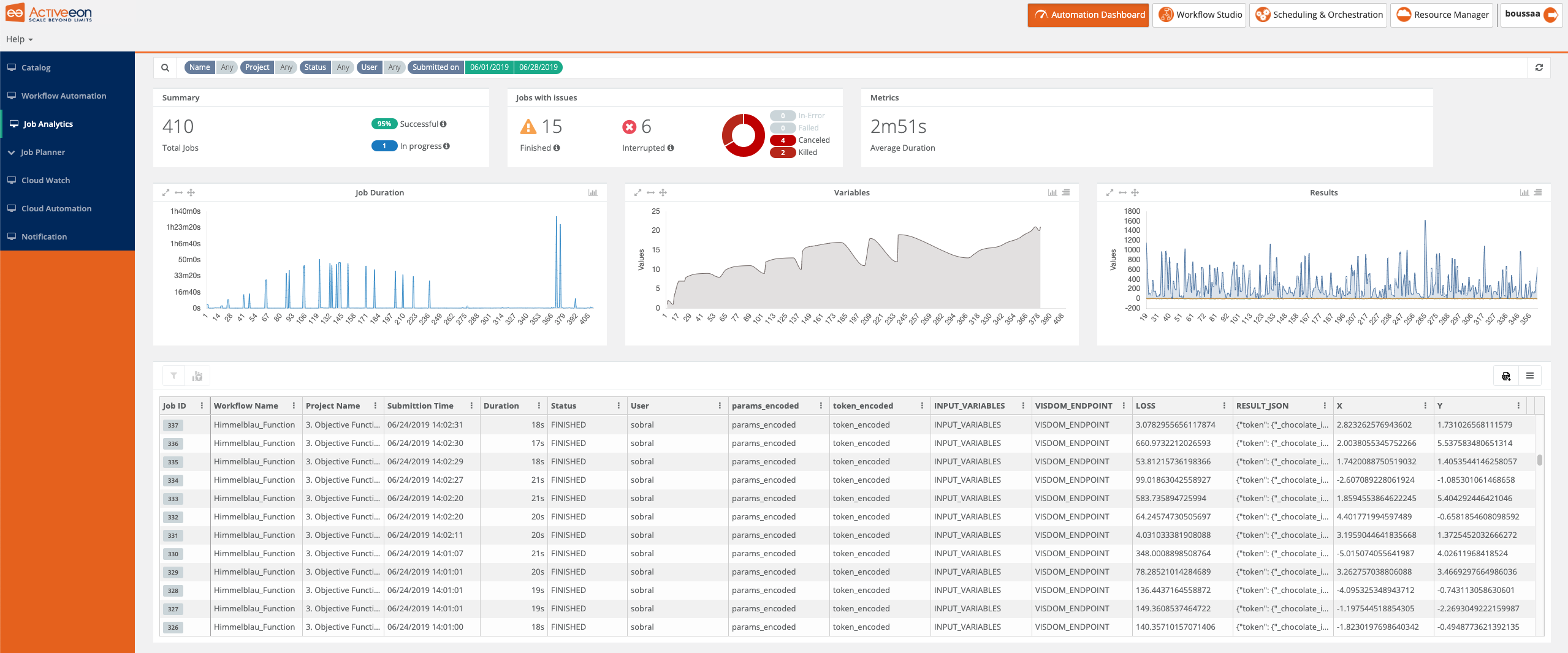

5. Job Analytics

The ProActive Job Analytics is a dashboard that provides an overview of executed workflows along with their input variables and results.

It offers several functionalities, including:

-

Advanced search by name, user, date, state, etc.

-

Execution metrics summary about durations, encountered issues, etc.

-

Charts to track variables and results evolution and correlation.

-

Export data in multiple formats for further use in analytics tools.

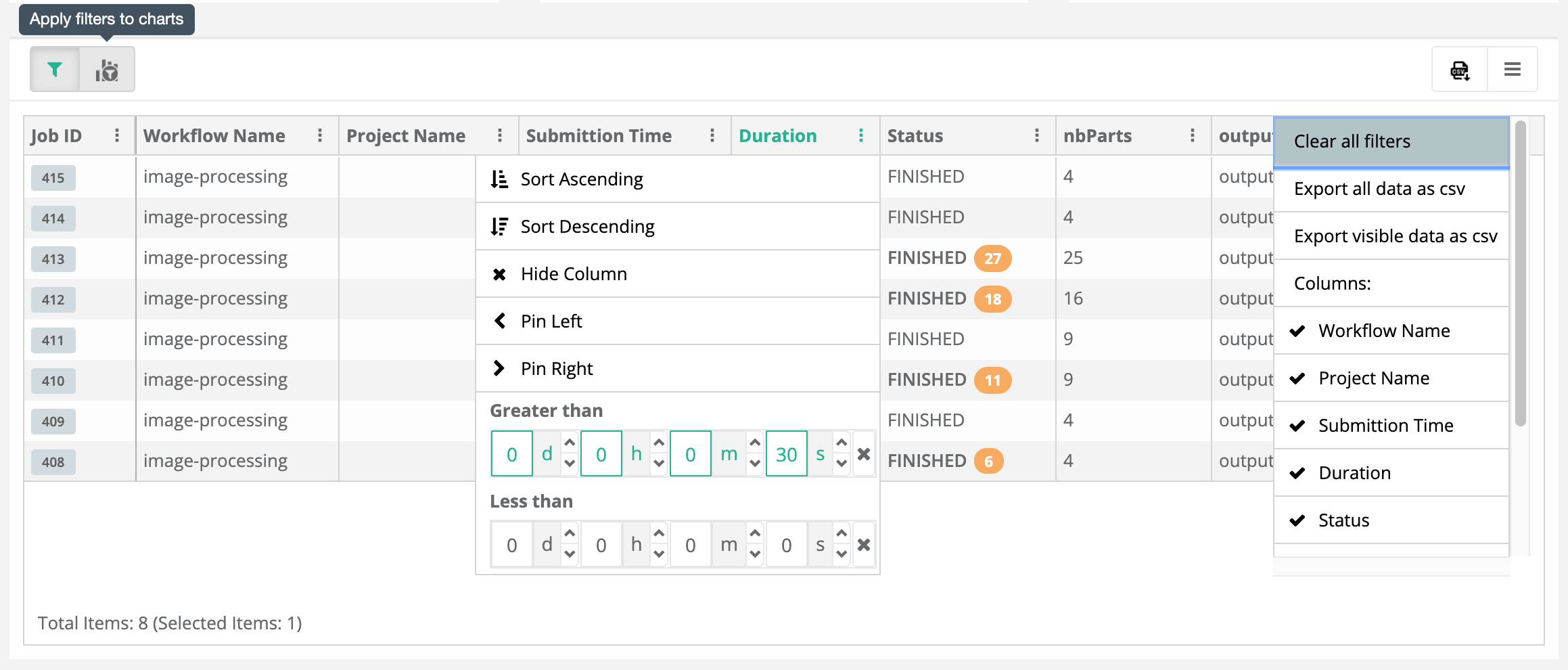

The screenshot below shows an overview of the Job Analytics Portal.

Job Analytics is very useful to compare metrics and charts of workflows that have common variables and results. For example, a ML algorithm might take different variable values and produce multiple results. It would be interesting to analyze the correlation and evolution of algorithm results regarding the input variation (See also a similar example of AutoML). The following sections will show you some key features of the dashboard and how to use them for a better understanding of your job executions.

5.1. Job Search

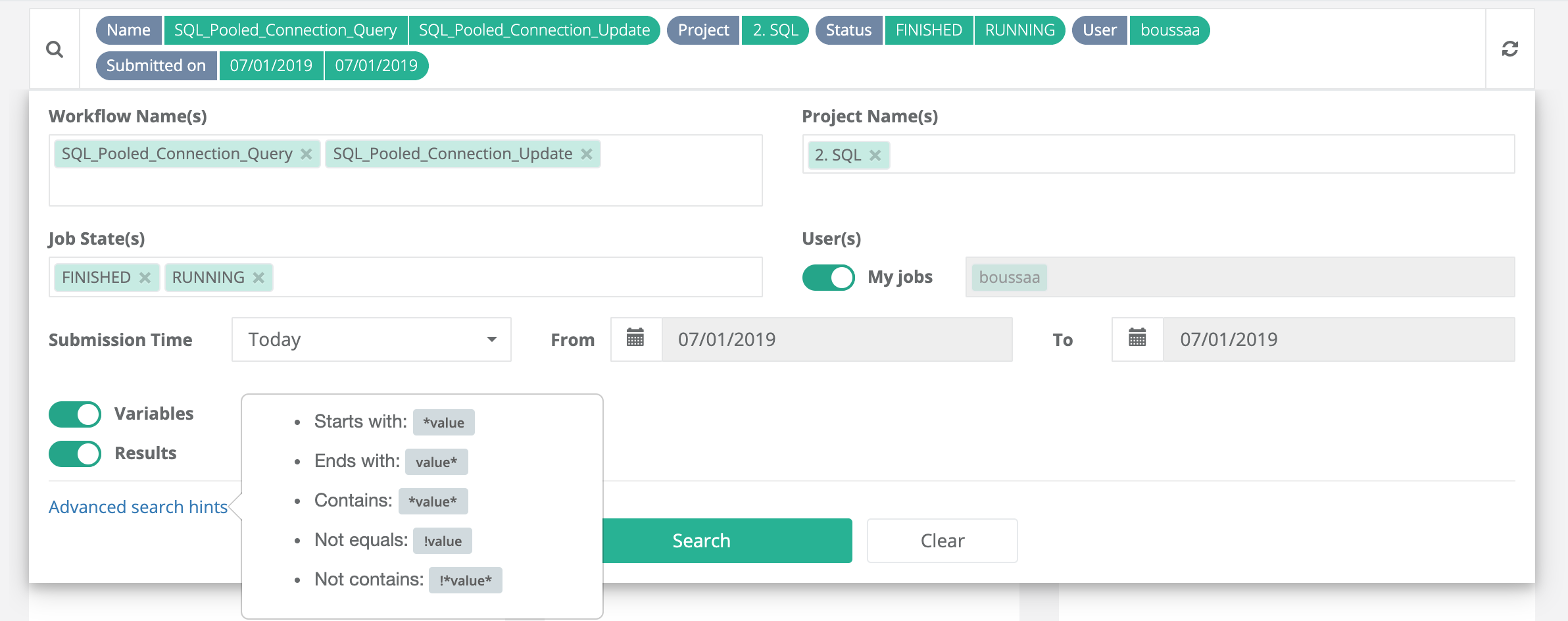

Job Analytics Portal includes a search window that allows users to search for jobs based on specific criteria (see screenshot below). The job search panel allows to select multi-value filters for the following job parameters:

-

Workflow Name(s): Jobs can be filtered by workflow name. Selecting/Typing one or more workflow names is provided by a built-in

auto-completefeature that helps you search for workflows or buckets from the ProActive Catalog. -

Project Name(s): You can also filter by one or more project names. You just have to specify the project names for the jobs you would like to analyze.

-

Job State(s): You can specify the state of jobs you are looking for. The possible job states are:

Pending,Running,Stalled,Paused,In_Error,Finished,Canceled,Failed, andKilled. For more information about job states, check the documentation here. Multiple values are accepted as well. -

User(s): This filter allows to either select only the jobs of the connected/current user or to specify a list of users that have executed the jobs. By default, the toggle filter is activated to select only the user jobs.

-

Submission Time: From the dropdown list, users can select a submission time frame (e.g., yesterday, last week, this month, etc.), or choose custom dates.

-

Variables and results: It is possible to choose whether to display or not the workflow variables and results. When deactivated, the charts related to variables and results evolution/correlation will not be displayed in the dashboard.

More advanced search options (highlighted in advanced search hints) could be used to provide filter values such as wildcards. For example, names that start with a specific string value are selected using value*. Other supported expressions are: *value for Ends with, *value* for Contains, !value for Not equals, and !*value* for Not contains.

Now you can hit the search button to request jobs from the scheduler database according to the provided filter values. The search bar at the top shows a summary of the active search filters.

5.2. Execution Metrics

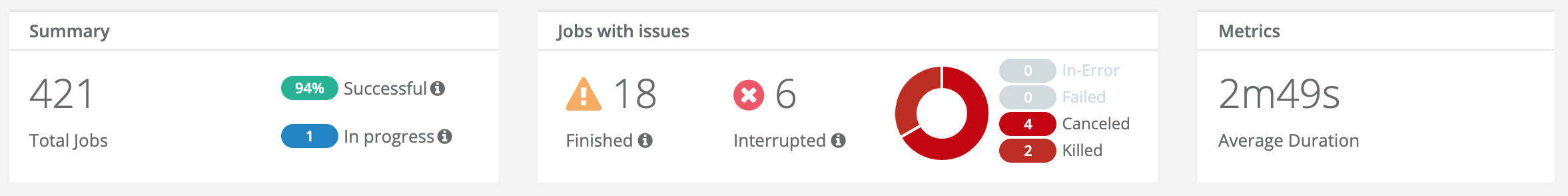

As shown in the screenshot below, Job Analytics Portal provides a summary of the most important job execution metrics. For instance, the dashboard shows:

-

A first panel that displays the number of total jobs that correspond to the search query. It also shows the ratio of successful jobs over the total number, and the number of jobs that are in progress and not yet finished. Please note that the number of in-progress jobs corresponds to the moment when the search query is executed and it is not automatically refreshed.

-

A second summary panel that displays the number of jobs with issues. We distinguish two types of issues: jobs that are finished but have encountered issues during their execution and interrupted jobs that did not finish their execution and were stopped due to diverse causes, such as insufficient resources, manual interruption, etc. Interrupted jobs include four states:

In-Error,Failed,Canceled, andKilled. -

The last metric gives an overview of the average duration of the selected jobs.

5.3. Job Charts

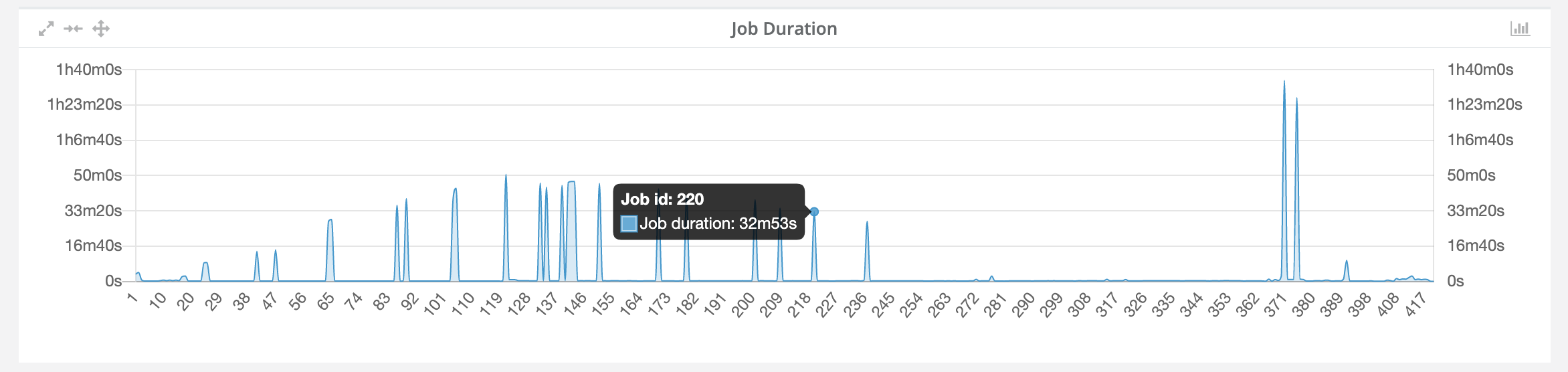

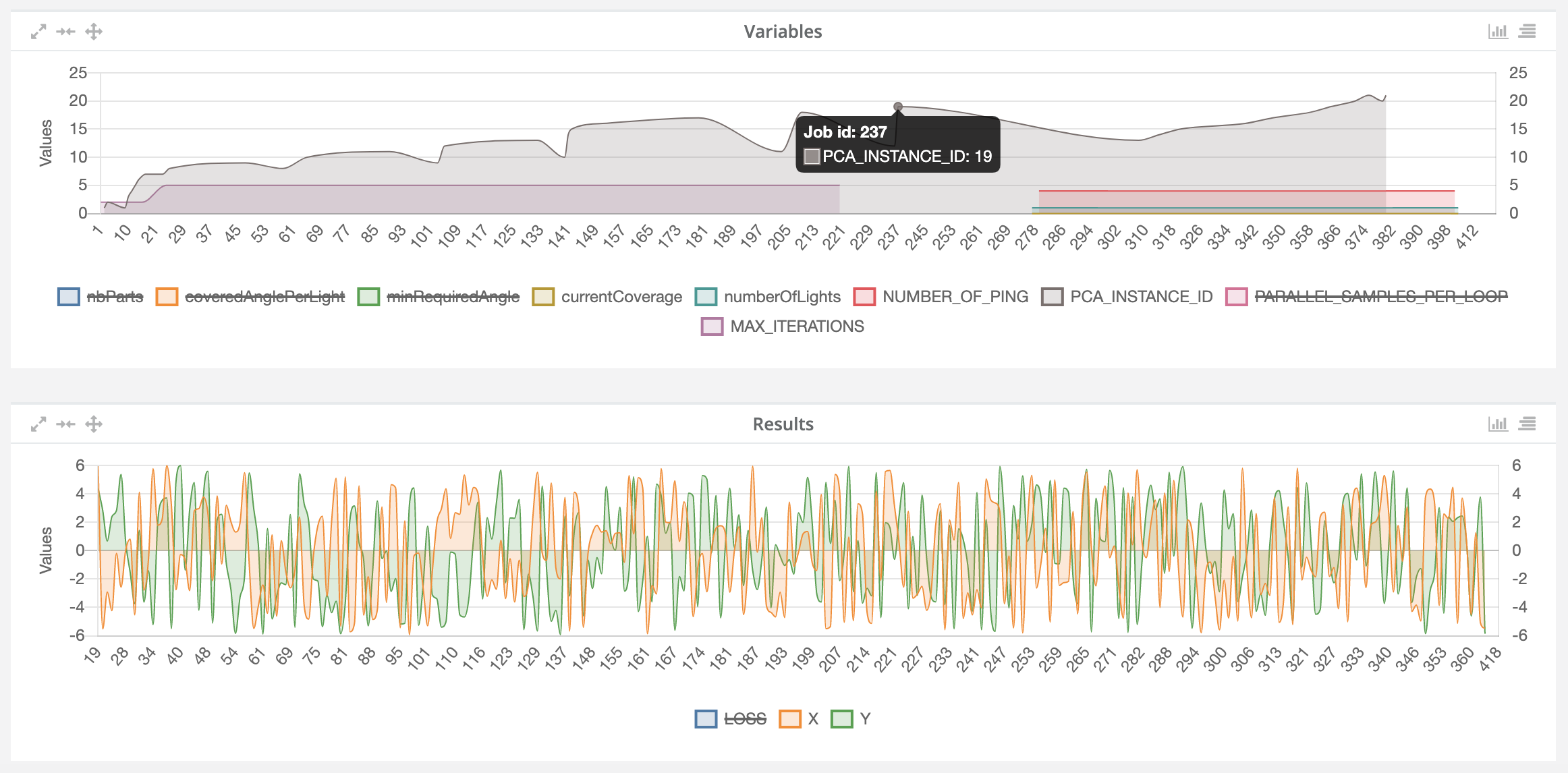

Job Analytics includes three types of charts:

-

Job duration chart: This chart shows durations per job. The x-axis shows the job ID and the y-axis shows the job duration. Hovering over the lines will also display the same information as a tooltip (see screenshot below). Using the duration chart will eventually help the users to identify any abnormal performance behaviour among several workflow executions.

-

Job variables chart: This chart is intended to show all variable values of selected jobs. It represents the evolution chart for all numeric-only variables of the selected jobs. The chart provides the ability to hide or show specific input variables by clicking on the variable name in the legend, as shown in the figure below.

-

Job results chart: This chart is intended to show all result values of selected jobs. It represents the evolution chart for all numeric-only results of the selected jobs. The chart provides also the ability to hide or show specific results by clicking on the variable name in the legend, as shown in the figure below.

All charts provide some advanced features such as "maximize" and "enlarge" to better visualize the results, and "move" to customize the dashboard layout (see top left side of charts). All of them provide the hovering feature as previously described and two types of charts to display: line and bar charts. Switching from one to the other can be activated through a toggle button located at the top right of the chart. Same for show/hide variables and results.

5.4. Job Execution Table

The last element of the Job Analytics dashboard shows a summary table that contains all job executions returned by the search query. It includes the job ID, status, duration, submission time, variables, results, etc. The jobs table provides many features:

-

Filtering: users can specify filter values for every column. For instance, the picture below applies a filter on the duration where we filter only jobs that last more than 30s. For string values, we can apply string-related filters such as Contains. For dates, a calendar is displayed to help users select the right date. Please note that variables and results types are not automatically detected. Therefore users can choose either the Contains filter or the Greater than and Less than filters.

-

Sort, hide, pin left and right columns: allows users to easily handle and display data with respect to their needs.

-

Export the job data to CSV format: enables users to exploit and process job data using other analytics tools such as R, Matlab, BI tools, ML APIs, etc.

-

Clear and apply filters: When filters are applied, the displayed data is updated. Therefore, we provide a button (see apply filters to charts on the top left of the of the table screenshot) that allows to synchronize the charts with the filtered data in the table. Finally, it is possible to clear all filters. This will automatically deactivate the synchronization.

-

Link to scheduler jobs: data in the job ID column is linked to the job executions in the scheduler. For example, if users want to access to the logs of a failing job, they can click on the corresponding job ID to be redirected to the job location in the Scheduling Portal.

We note also that clicking on the issue types and charts described in the previous sections filters the table to show the corresponding jobs.

| It is important to notice that the dashboard layout and search preferences are saved in the browser cache so that users can have access to their last dashboard and search settings. |

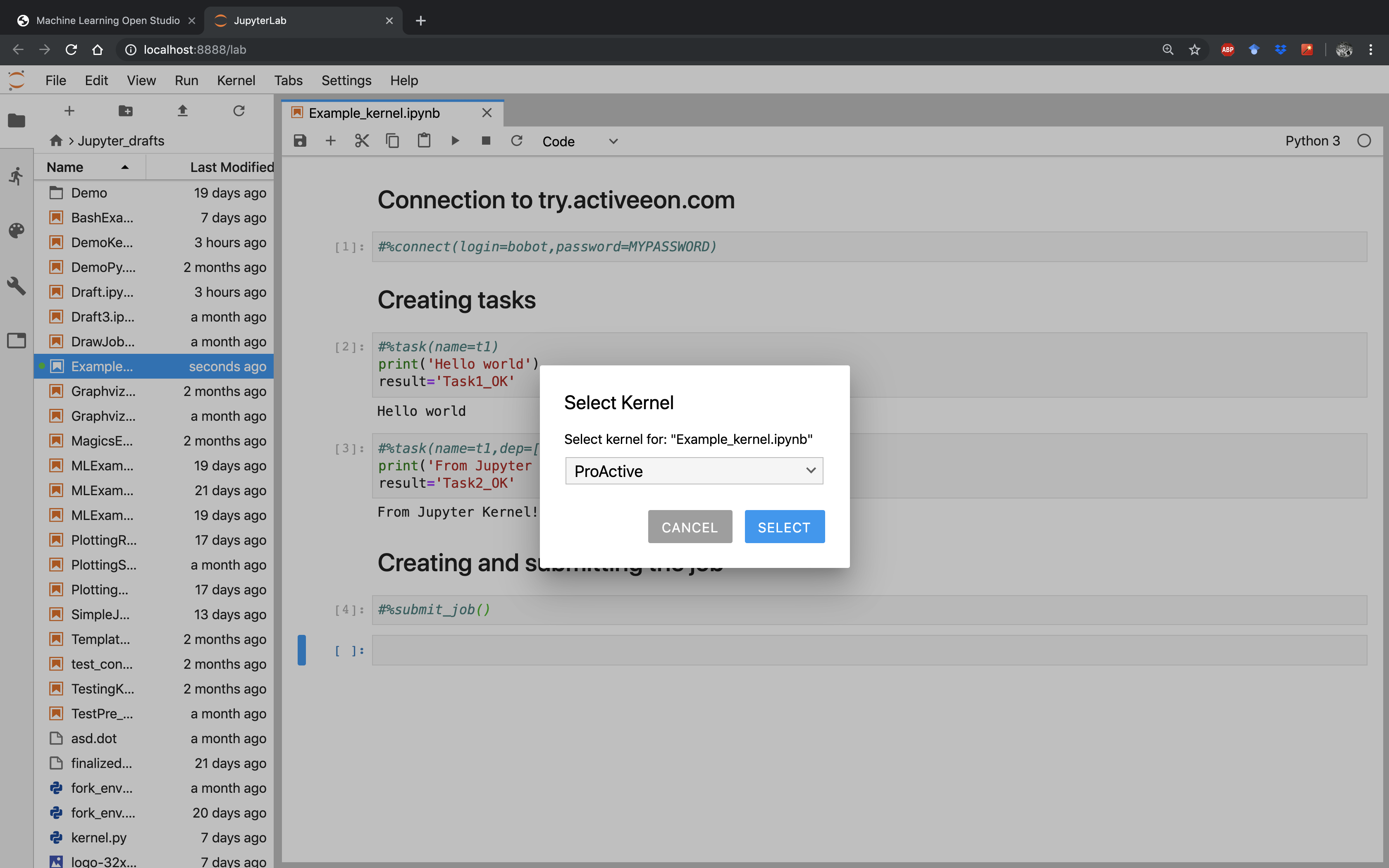

6. ProActive Jupyter Kernel

The ActiveEon Jupyter Kernel adds a kernel backend to Jupyter. This kernel interfaces directly with the ProActive scheduler and constructs tasks and workflows to execute them on the fly.

With this interface, users can run their code locally and test it using a native python kernel, and by a simple switch to ProActive kernel, run it on remote public or private infrastructures without having to modify the code. See the example below:

6.1. Installation

6.1.1. Requirements

Python 2 or 3

6.1.2. Using PyPi

-

open a terminal

-

install the ProActive jupyter kernel with the following commands:

$ pip install proactive proactive-jupyter-kernel --upgrade

$ python -m proactive-jupyter-kernel.install6.1.3. Using source code

-

open a terminal

-

clone the repository on your local machine:

$ git clone git@github.com:ow2-proactive/proactive-jupyter-kernel.git-

install the ProActive jupyter kernel with the following commands:

$ pip install proactive-jupyter-kernel/

$ python -m proactive-jupyter-kernel.install6.2. Platform

You can use any jupyter platform. We recommend to use jupyter lab. To launch it from your terminal after having installed it:

$ jupyter labor in daemon mode:

$ nohup jupyter lab &>/dev/null &When opened, click on the ProActive icon to open a notebook based on the ProActive kernel.

6.3. Help

As a quick start, we recommend the user to run the #%help() pragma using the following script:

#%help()This script gives a brief description of all the different pragmas that the ProActive Kernel provides.

To get a more detailed description of a needed pragma, the user can run the following script:

#%help(pragma=PRAGMA_NAME)6.4. Connection

6.4.1. Using connect()

If you are trying ProActive for the first time, sign up on the try platform.

Once you receive your login and password, connect to the trial platform using the #%connect() pragma:

#%connect(login=YOUR_LOGIN, password=YOUR_PASSWORD)To connect to another ProActive server host, use the later pragma this way:

#%connect(host=YOUR_HOST, [port=YOUR_PORT], login=YOUR_LOGIN, password=YOUR_PASSWORD)

Notice that the port parameter is optional. The default connexion port is 8080.

|

6.4.2. Using a configuration file

For automatic sign in, create a file named proactive_config.ini in your notebook working directory.

Fill your configuration file according to the following format:

[proactive_server]

host=YOUR_HOST

port=YOUR_PORT

[user]

login=YOUR_LOGIN

password=YOUR_PASSWORDSave your changes and restart the ProActive kernel.

You can also force the current kernel to connect using any .ini config file through the #%connect() pragma:

#%connect(path=PATH_TO/YOUR_CONFIG_FILE.ini)(For more information about this format please check configParser)

6.5. Usage

6.5.1. Creating a Python task

To create a new task, use the pragma #%task() followed by the task implementation script written into a notebook

block code.

To use this pragma, a task name has to be provided at least. Example:

#%task(name=myTask)

print('Hello world')General usage:

#%task(name=TASK_NAME, [language=SCRIPT_LANGUAGE], [dep=[TASK_NAME1,TASK_NAME2,...]], [generic_info=[(KEY1,VAL1), (KEY2,VALUE2),...]], [export=[VAR_NAME1,VAR_NAME2,...]], [import=[VAR_NAME1,VAR_NAME2,...]], [path=IMPLEMENTATION_FILE_PATH])\n'Users can also provide more information about the task using the pragma’s options. In the following, we give more details about the possible options:

Language

The language parameter is needed when the task script is not written in native Python. If not provided, Python will be

selected as the default language.

The supported programming languages are:

-

Linux_Bash

-

Windows_Cmd

-

DockerCompose

-

Scalaw

-

Groovy

-

Javascript

-

Jython

-

Python

-

Ruby

-

Perl

-

PowerShell

-

R

Here is an example that shows a task implementation written in Linux_Bash:

#%task(name=myTask, language=Linux_Bash)

echo 'Hello, World!'Dependencies

One of the most important notions in workflows is the dependencies between tasks. To specify this information, use the

dep parameter. Its value should be a list of all tasks on which the new task depends. Example:

#%task(name=myTask,dep=[parentTask1,parentTask2])

print('Hello world')Generic information

To specify the values of some advanced ProActive variables called

Generic Information, you should

provide the generic_info parameter. Its value should be a list of tuples (key,value) that corresponds to the names

and adequate values of the Generic Information. Example:

#%task(name=myTask, generic_info=[(var1,value1),(var2,value2)])

print('Hello world')Export/import variables

The export and import parameters ensure variables propagation between the different tasks of a workflow.

If myTask1 variables var1 and var2 are needed in myTask2, both pragmas have to specify this information as

follows:

-

myTask1should include anexportparameter with a list of these variable names, -

myTask2should include animportparameter with a list including the same names.

Example:

myTask1 implementation block would be:

#%task(name=myTask1, export=[var1,var2])

var1 = "Hello"

var2 = "ActiveEon!"and myTask2 implementation block would be:

#%task(name=myTask2, dep=[myTask1], import[var1,var2])

print(var1 + " from " + var2)Implementation file

It is also possible to use an external implementation file to define the task implementation. To do so, the option path

should be used.

Example:

#%task(name=myTask,path=PATH_TO/IMPLEMENTATION_FILE.py)6.5.2. Importing libraries

The main difference between the ProActive and 'native language' kernels resides in the way the memory is accessed

during blocks execution. In a common native language kernel, the whole script code (all the notebook blocks) is

locally executed in the same shared memory space; whereas the ProActive kernel will execute each created task in an

independent process. In order to facilitate the transition from native language to ProActive kernels, we included the

pragma #%import(). This pragma gives the user the ability to add libraries that are common to all created tasks, and

thus relative distributed processes, that are implemented in the same native script language.

The import pragma is used as follows:

#%import([language=SCRIPT_LANGUAGE]).

Example:

#%import(language=Python)

import os

import pandas| If the language is not specified, Python is considered as default language. |

6.5.3. Adding a fork environment

To configure a fork environment for a task, use the #%fork_env() pragma. To do so, you have to provide the name of the

corresponding task and the fork environment implementation.

Example:

#%fork_env(name=TASK_NAME)

containerName = 'activeeon/dlm3'

dockerRunCommand = 'docker run '

dockerParameters = '--rm '

paHomeHost = variables.get("PA_SCHEDULER_HOME")

paHomeContainer = variables.get("PA_SCHEDULER_HOME")

proActiveHomeVolume = '-v '+paHomeHost +':'+paHomeContainer+' '

workspaceHost = localspace

workspaceContainer = localspace

workspaceVolume = '-v '+localspace +':'+localspace+' '

containerWorkingDirectory = '-w '+workspaceContainer+' '

preJavaHomeCmd = dockerRunCommand + dockerParameters + proActiveHomeVolume + workspaceVolume + containerWorkingDirectory + containerNameOr, you can provide the task name and the path of a .py file containing the fork environment code:

#%fork_env(name=TASK_NAME, path=PATH_TO/FORK_ENV_FILE.py)6.5.4. Adding a selection script

To add a selection script to a task, use the #%selection_script() pragma. To do so, you have to provide the name of

the corresponding task and the selection code implementation.

Example:

#%selection_script(name=TASK_NAME)

selected = TrueOr, you can provide the task name and the path of a .py file containing the selection code:

#%selection_script(name=TASK_NAME, path=PATH_TO/SELECTION_CODE_FILE.py)6.5.5. Adding job fork environment and/or selection script

If the selection scripts and/or the fork environments are the same for all job tasks, we can add them just once using

the job_selection_script and/or the job_fork_env pragmas.

Usage:

For a job selection script, please use:

#%job_selection_script([language=SCRIPT_LANGUAGE], [path=./SELECTION_CODE_FILE.py], [force=on/off])For a job fork environment, use:

#%job_fork_env([language=SCRIPT_LANGUAGE], [path=./FORK_ENV_FILE.py], [force=on/off])The force parameter defines whether the pragma has to overwrite the task selection scripts or the fork environment

already set.

6.5.6. Adding pre and/or post scripts

Sometimes, specific scripts has to be executed before and/or after a particular task. To do that, the solution provides

pre_script and post_script pragmas.

To add a pre-script to a task, please use:

#%pre_script(name=TASK_NAME, language=SCRIPT_LANGUAGE, [path=./PRE_SCRIPT_FILE.py])To add a post-script to a task, use:

#%post_script(name=TASK_NAME, language=SCRIPT_LANGUAGE, [path=./POST_SCRIPT_FILE.py])6.5.7. Create a job

To create a job, use the #%job() pragma:

#%job(name=JOB_NAME)If the job has already been created, the call of this pragma would just rename the already created job by the new provided name.

| It is not necessary to create and assign a name explicitly to the job. If not done by the user, this step is implicitly performed when the job is submitted (check section Submit your job to the scheduler for more information). |

6.5.8. Visualize job

To visualize the created workflow, use the #%draw_job() pragma to plot the workflow graph that represents the job

into a separate window:

#%draw_job()Two optional parameters can be used to configure the way the kernel plots the workflow graph.

inline plotting:

If this parameter is set to off, plotting the workflow graph is done through a

Matplotlib external window. The default value is on.

#%draw_job(inline=off)save the workflow graph locally:

To be sure that the workflow is saved into a .png file, this option needs to be set to on. The default value is

off.

#%draw_job(save=on)Note that the job’s name can take one of the following possible values:

-

The parameter

name's value, if provided -

The job’s name, if created

-

The notebook’s name, if the kernel can retrieve it

-

Unnamed_job, otherwise.

General usage:

#%draw_job([name=JOB_NAME], [inline=off], [save=on])6.5.9. Export the workflow graph in dot format

To export the created workflow into a GraphViz .dot format, use the #%write_dot() pragma:

#%write_dot(name=FILE_NAME)6.5.10. Submit your job to the scheduler

To submit the job to the ProActive Scheduler, the user has to use the #%submit_job() pragma:

#%submit_job()If the job is not created, or is not up-to-date, the #%submit_job() creates a new job named as the old one.

To provide a new name, use the same pragma and provide a name as parameter:

#%submit_job([name=JOB_NAME])If the job’s name is not set, the ProActive kernel uses the current notebook name, if possible, or gives a random one.

6.5.11. List all submitted jobs

To get all submitted job IDs and names, use list_submitted_jobs pragma this way:

#%list_submitted_jobs()6.5.12. Print results

To get the job result(s), the user has to use the #%get_result() pragma by providing the job name:

#%get_result(name=JOB_NAME)Or, by the job ID:

#%get_result(id=JOB_ID)The returned values of your final tasks will be automatically printed.

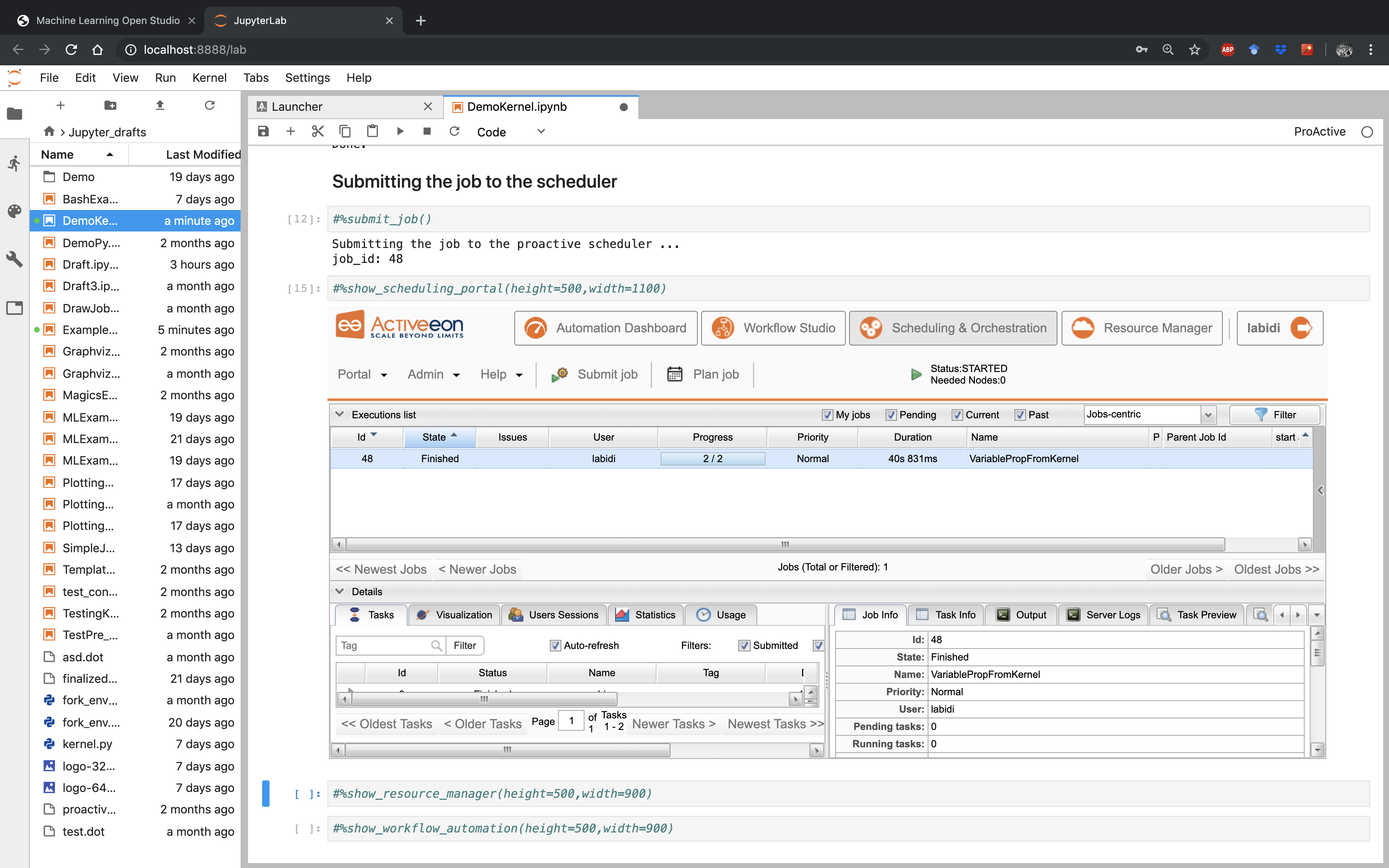

6.6. Display and use ActiveEon Portals directly in Jupyter

Finally, to have the hand on more parameters and features, the user should use ActiveEon Studio portals. The main ones are the Resource Manager, the Scheduling Portal and the Workflow Automation.

The example below shows how the user can directly monitor his submitted job’s execution in the scheduling portal:

To show the resource manager portal related to the host you are connected to, just run:

#%show_resource_manager([height=HEIGHT_VALUE, width=WIDTH_VALUE])For the related scheduling portal:

#%show_scheduling_portal([height=HEIGHT_VALUE, width=WIDTH_VALUE])And, for the related workflow automation:

#%show_workflow_automation([height=HEIGHT_VALUE, width=WIDTH_VALUE])

The parameters height and width allow the user to adjust the size of the window inside the notebook.

|

7. Customize the ML Bucket

7.1. Create or Update a ML Task

Machine Learning Bucket contains various open source tasks that can be easily used by a simple drag and drop.

It is possible to enrich the ML Bucket by adding your own tasks. (see section 4.3)

It is also possible to customize the code of the generic ML tasks. In this case, you need to drag and drop the targeted task to modify its code in the Task Implementation section.

| It is also possible to add or/and delete variables of each task, set your own fork environments, etc. More details available on ProActive User Guide |

7.2. Set the Fork Environment

A fork execution environment is a new Java Virtual Machine (JVM) which is started exclusively to execute a task. Starting a new JVM means that the task inside it will run in a new environment. This environment can be set up by the creator of the task. A new JVMs is set up with a new classpath, new system properties and more customization.

We used a Docker fork environment for all the ML tasks. activeeon/dlm3 was used as a docker container for all tasks. If your task needs to install new ML libraries which are not available in this container, then, use your own docker container or an appropriate environment with the needed libraries.

| The use of docker containers is recommended as that way other tasks will not be affected by change. Docker containers provide isolation so that the host machine’s software stays the same. More details available on ProActive User Guide |

7.3. Publish a ML Task

The Catalog menu provides the possibility for a user to publish newly created or/and update tasks inside Machine Learning Bucket, you need just to click on Catalog Menu then Publish current Workflow to the Catalog.

Choose machine-leaning Bucket to store your newly added workflow on it.

If the Task with the same name already exists in the 'machine-leaning' bucket, then, it will be updated.

We recommend to submit Tasks with a commit message for easier differentiation between the different submitted versions.

| More details available on ProActive User Guide |

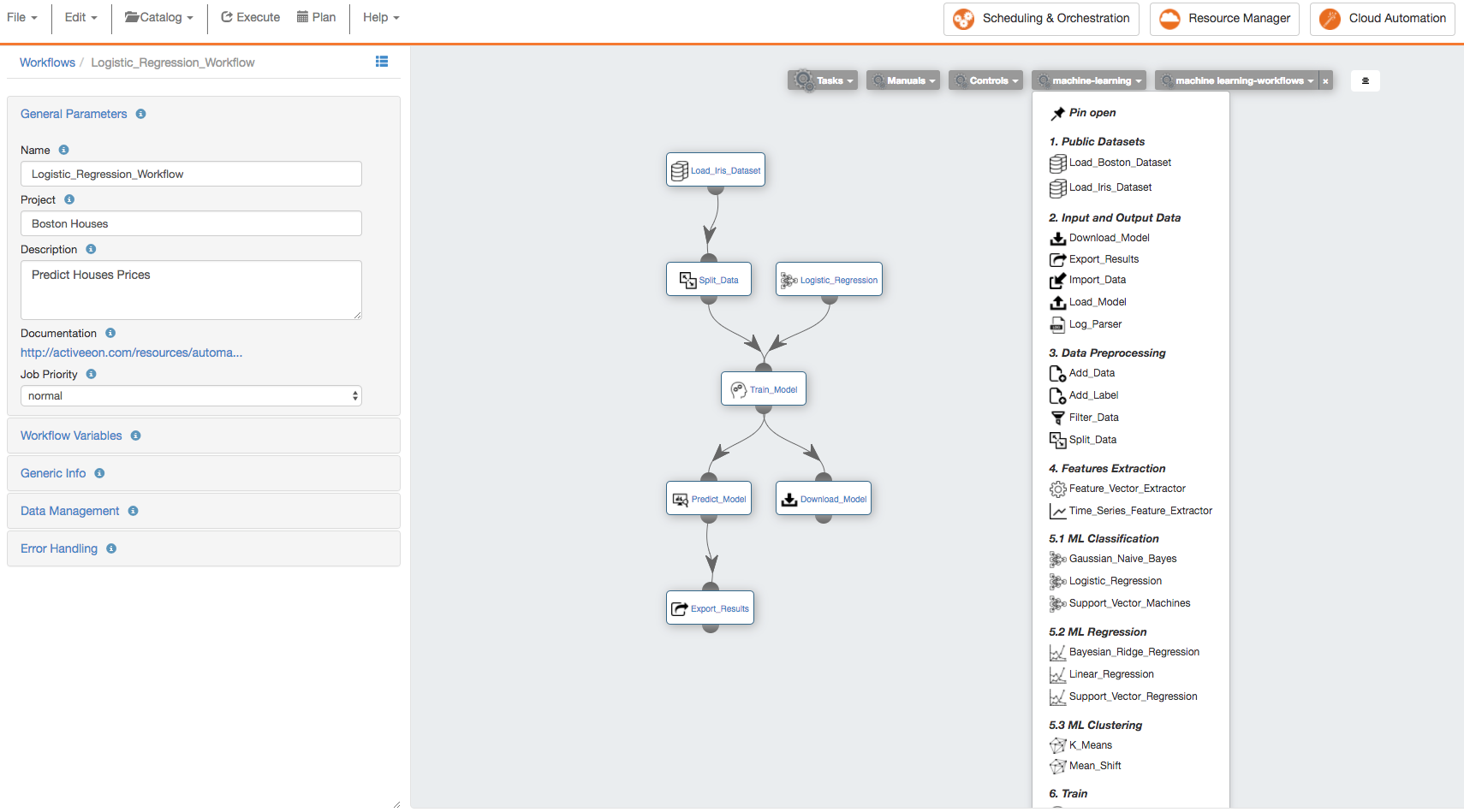

7.4. Create a ML Workflow

The quickstart tutorial on try.activeeon.com shows you how to build a simple workflow using ProActive Studio.

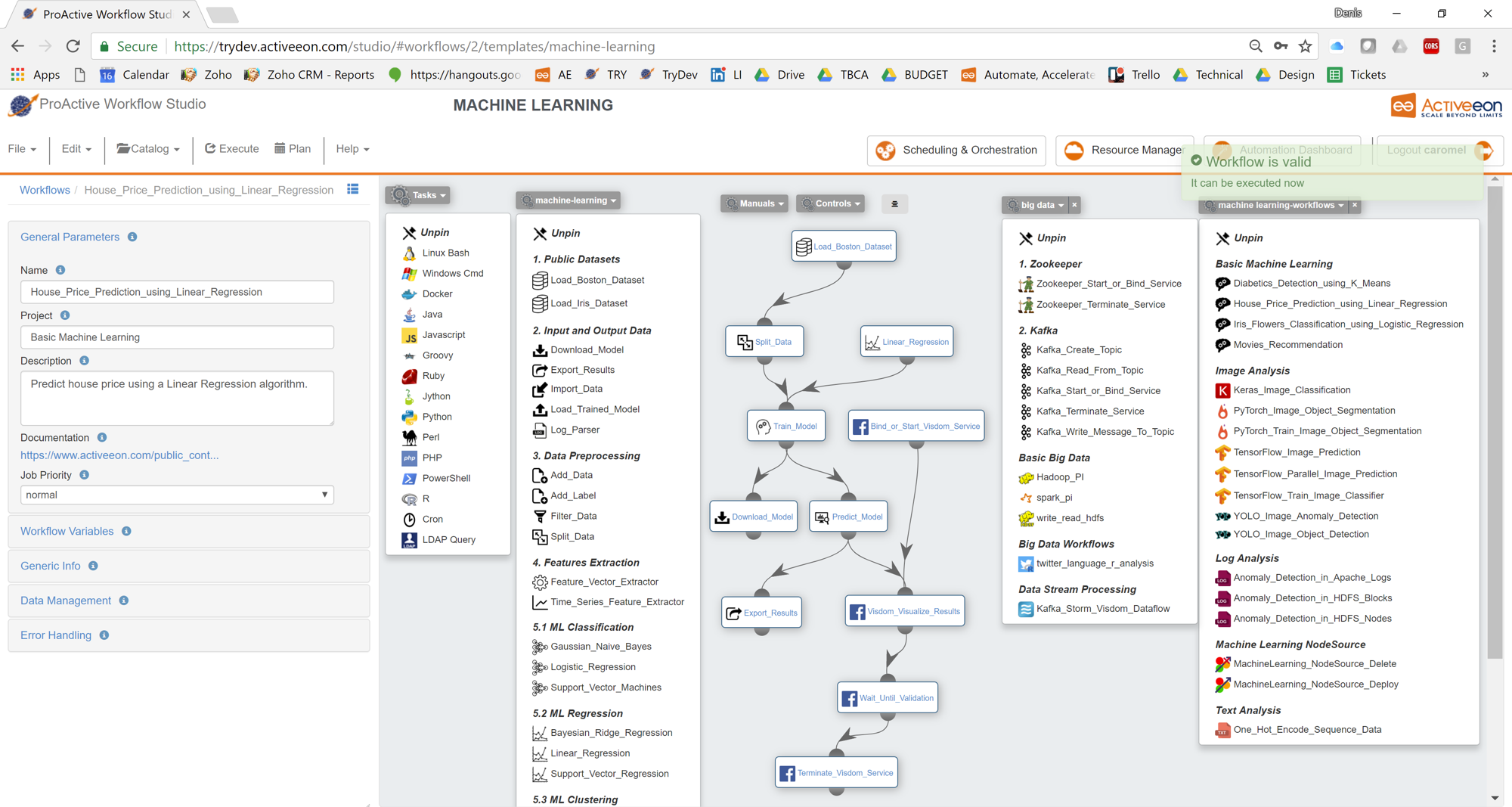

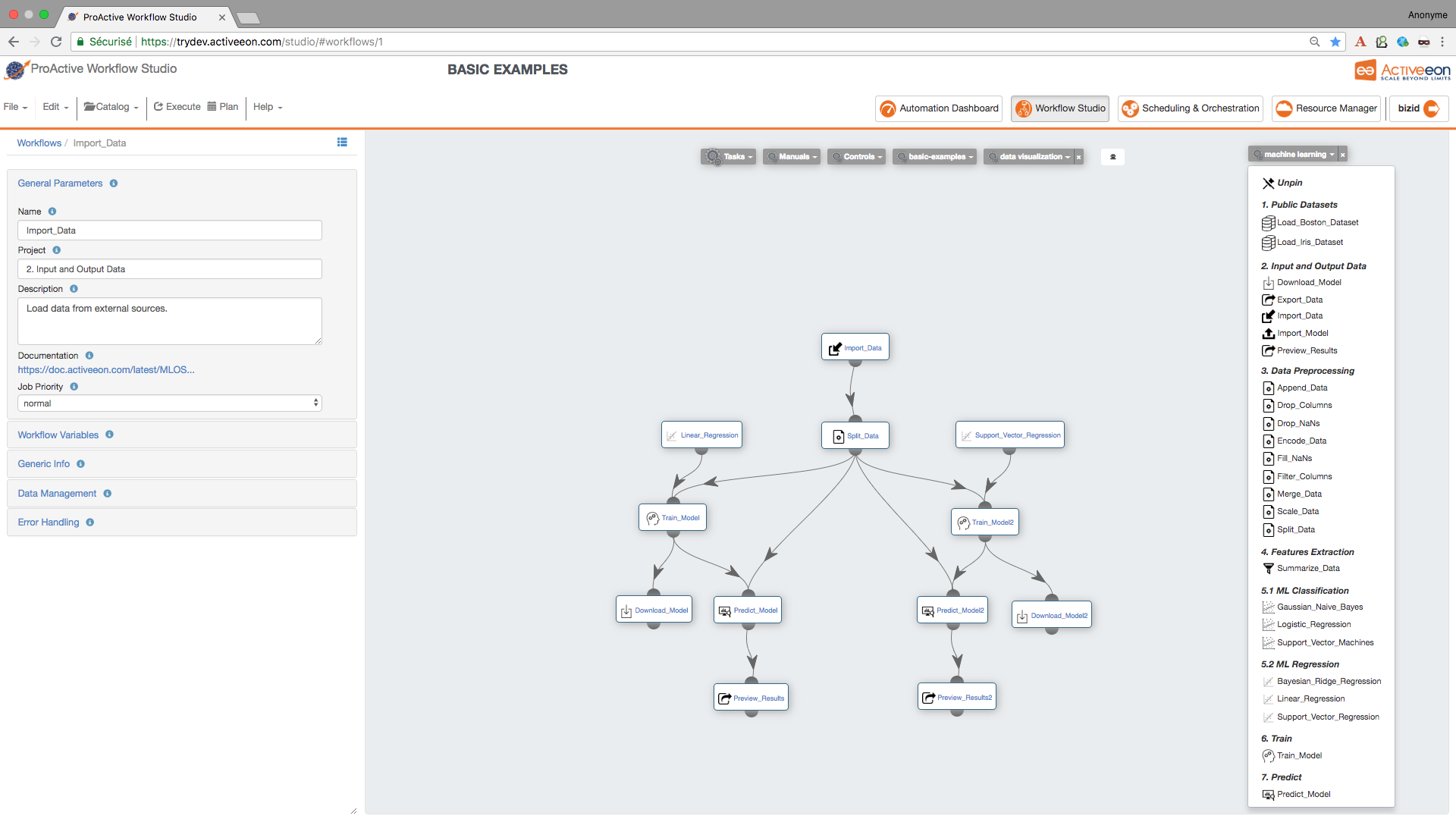

We show below an example of a workflow created with the Studio:

At the left part, are illustrated the General Parameters of the workflow with the following information:

-

Name: the name of the workflow. -

Project: the project name to which belongs the workflow. -

Description: the textual description of the workflow. -

Documentation: if the workflow has a Generic Information named "Documentation", then its URL value is displayed as a link. -

Job Priority: the priority assigned to the workflow. It is by default set toNORMAL, but can be increased or decreased once the job is submitted.

| The workflow represented in the above is available on the 'machine-learning-workflows' bucket. |

8. ML Workflows Examples

The MLOS provides a fast, easy and practical way to execute different workflows using the ML bucket. We present useful ML workflows for different applications in the following sub-sections.

To test these workflows, you need to add the machine-Learning-workflows Bucket as main catalog in the ProActive Studio.

-

Open [ProActive Workflow Studio home page.

-

Create a new workflow.

-

Click on

Catalogmenu thenAdd Bucket as Extra Catalog Menuand selectmachine-learning-workflowsbucket. -

Open this added extra catalog menu and drag and drop the workflow example of your choice.

-

Execute the chosen workflow, track its progress and preview its results.

More details about these workflows are available in this in ActiveEon’s Machine Learning Documentation

8.1. Basic ML

The following workflows present some ML basic examples. These workflows are built using generic ML and data visualization tasks available on the ML and Data Visualization buckets.

Diabetics_Detection_using_K_means: trains and tests a clustering model using Mean_shift algorithm.

House_Price_Prediction_using_Linear_Regression: trains and tests a regression model using Mean_shift algorithm.

Vehicle_Type_Using_Model_Explainability: predicts vehicle type based on silhouette measurements, and apply ELI5 and Kernel Explainer to understand the model’s global behavior or specific predictions.

Iris_Flowers_Classification_using_Logistic_Regression: trains and tests a predictive model using logistic_regressive algorithm.

Movies_Recommendation: create a movie recommendation engine using collaborative filtering algorithm.

Parallel_Classification_Model_Training: trains three different classification models.

Parallel_Regression_Model_Training: trains three different regression models.

8.2. Basic AutoML

The following workflows present some ML basic examples using AutoML generic tasks available in the machine-learning bucket.

Breast_Cancer_Detection_Using_AutoSklearn_Classifier: tests several ML pipelines and selects the best model for Cancer Breast detection.

California_Housing_Prediction_Using_TPOT_Regressor: tests several ML pipelines and selects the best model for California housing prediction.

8.3. Log Analysis

The following workflows are designed to detect anomalies in log files. They are constructed using generic tasks which are available on the machine-learning and data-visualization buckets.

Anomaly_Detection_in_Apache_Logs: detects intrusions in apache logs using a predictive model trained using Support Vector Machines algorithm.

Anomaly_detection_in_HDFS_Blocks: trains and test an anomaly detection model for detecting anomalies in HDFS Blocks.

Anomaly_detection_in_HDFS_Nodes: trains and test an anomaly detection model for detecting anomalies in HDFS Nodes.

Unsupervised_Anomaly_Detection: detects anomalies using an Unsupervised One-Class SVM.

8.4. Data Analytics

The following workflows are designed to feature engineering and fusion.

Data_Fusion_And_Encoding: fuses different data structures.

Data_Anomaly_Detection: detects anomalies on energy consumption by customers.

Desjardins_DataCup_Feature_Engineering: shows a data processing pipeline used for Desjardins Data Cup (https://datacup.ca/). The workflow exports the generated CSV file to the Amazon AWS S3.

Desjardins_DataCup_Model_Training: shows a training step used for Desjardins Data Cup (https://datacup.ca/). The input data is the file generated by the feature engineering workflow.

Diabetics_Results_Visualization_Using_Tableau: visualizes Diabetics Results Using Tableau.

9. Deep Learning Workflows Examples

MLOS provides a fast, easy and practical way to execute deep learning workflows. In the following sub-sections, we present useful deep learning workflows for text and image classification and generation.

You can test these workflows by following these steps:

-

Open [ProActive Workflow Studio home page.

-

Create a new workflow.

-

Click on

Catalogmenu thenAdd Bucket as Extra Catalog Menuand selectdeep-learning-workflowsbucket. -

Open this added extra catalog menu and drag and drop the workflow example of your choice.

-

Execute the chosen workflow, track its progress and preview its results.

9.1. Azure Cognitive Services

The following workflows present useful examples composed by pre-built Azure cognitive services available on azure-cognitive-services bucket.

Emotion_Detection_in_Bing_News: is a mashup that searches for images of a person using Azure Bing Image Search then performs an emotion detection using Azure Emotion API.

Sentiment_Analysis_in_Bing_News: is a mashup that searches for news related to a given search term using Azure Bing News API then performs a sentiment analysis using Azure Text Analytics API.

9.2. Microsoft Cognitive Toolkit

The following workflows present useful examples for predictive models training and test using Microsoft Cognitive Toolkit (CNTK).

CNTK_ConvNet: trains a Convolutional Neural Network (CNN) on CIFAR-10 dataset.

CNTK_SimpleNet: trains a 2-layer fully connected deep neural network with 50 hidden dimensions per layer.

GAN_Generate_Fake_MNIST_Images: generates fake MNIST images using a Generative Adversarial Network (GAN).

DCGAN_Generate_Fake_MNIST_Images: generates fake MNIST images using a Deep Convolutional Generative Adversarial Network (DCGAN).

9.3. Mixed Workflows

The following workflow presents an example of a workflow built using pre-built Azure cognitive services tasks available on the azure-cognitive-services bucket and custom AI tasks available on the deep-learning bucket.

Custom_Sentiment_Analysis: is a mashup that searches for news related to a given search term using Azure Bing News API then performs a sentiment analysis using a custom deep learning based pretrained model.

9.4. Custom AI Workflows - Pytorch library

The following workflows present examples of workflows built using custom AI tasks available on the deep-learning bucket. Such tasks enable you to train and test your own AI models by a simple drag and drop of custom AI task.

IMDB_Sentiment_Analysis: trains a model for opinions identification and categorization expressed in a piece of text, especially in order to determine the opinion of IMDB users regarding specific movies [positive or negative]. NOTE: Instead of training a model from scratch, you can use a pre-trained sentiment analysis model which is available on this link.

Image_Classification: uses a pre-trained deep neural network to classify ants and bees images. The pre-trained model is available on this link.

Language_Detection: involves building an RNN model from a lot of text data of respective languages and then identifying the test data (text) among the trained models.

Fake_Celebrity_Faces_Generation: generates a wild diversity of fake faces using a GAN model that was trained based on thousands of real celebrity photos. The pre-trained GAN model is available on this link

Image_Segmentation: predicts a segmentation model using SegNet network on Oxford-IIIT Pet Dataset. The pre-trained image segmentation model which is available on this link

Image_Object_Detection: detects objects using a pre-trained YOLO v3 model on COCO dataset proposed by Microsoft Research. The pre-trained model is available on this link.

| It is recommended to use an enabled-GPU node to run the deep learning tasks. |

10. References

10.1. ML Bucket

The machine-learning bucket contains diverse generic ML tasks that enable you to easily compose workflows for predictive models learning and testing. This bucket can be easily customized according to your needs.

This bucket offers different options, you can customize it by adding new tasks or update the existing tasks.

| All ML tasks were implemented using Scikit-learn library. |

10.1.1. Public Datasets

Load_Boston_Dataset

Task Overview: Load and return the Boston House-Prices dataset.

| Features | Targets | Dimensionality | Samples Total |

|---|---|---|---|

Real, positive |

Real 5. -50 |

13 |

506 |

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

How to use this task:

-

The Boston House-Prices is a dataset for regression, you can only use it with a regression algorithm, such as Linear Regression and Support Vector Regression.

-

After this task, you can use the Split_Data task to divide the dataset into training and testing sets.

| More information about this dataset can be found here. |

Load_Iris_Dataset

Task Overview: Load and return the iris dataset.

| Features | Classes | Dimensionality | Samples per class | Samples total |

|---|---|---|---|---|

Real, positive |

3 |

4 |

50 |

150 |

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

How to use this task:

-

The Iris is a dataset for classification, you can only use it with a classification algorithm, such as Support Vector Machines and Logistic Regression.

-

After this task, you can use the Split_Data task to divide the dataset into training and testing sets.

| More information about this dataset can be found here. |

10.1.2. Input and Output Data

Download_Model

Task Overview: Download a trained model on your computer device.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

How to use this task: It should be used after the Train_Model tasks.

Export_Data

Task Overview: Export the results of the predictions generated by a classification, clustering or regression algorithm.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Converts the prediction results to HTML, HYPER and CSV file. |

String [CSV, JSON, HTML, TABLEAU] |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

How to use this task: It should be used after Predict_Model tasks.

Import_Data

Task Overview: Load data from external sources.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

|

Enter you URL of the CSV file. |

String |

|

Delimiter to use. |

String |

| Your CSV file should be in a table format. See the example below. |

Import_Model

Task Overview: Load a trained model, and use it to make predictions for new coming data.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Type the URL to load your trained model. default: https://s3.eu-west-2.amazonaws.com/activeeon-public/models/pima-indians-diabetes.model |

String |

How to use this task: It should be used before Predict_Model to make predictions.

Preview_Results

Task Overview: Preview the HTML results of the predictions generated by a classification, clustering or regression algorithm.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

|

Converts the prediction results to HTML or CSV file. |

String [CSV, JSON or HTML] |

How to use this task: It should be used after Predict_Model tasks.

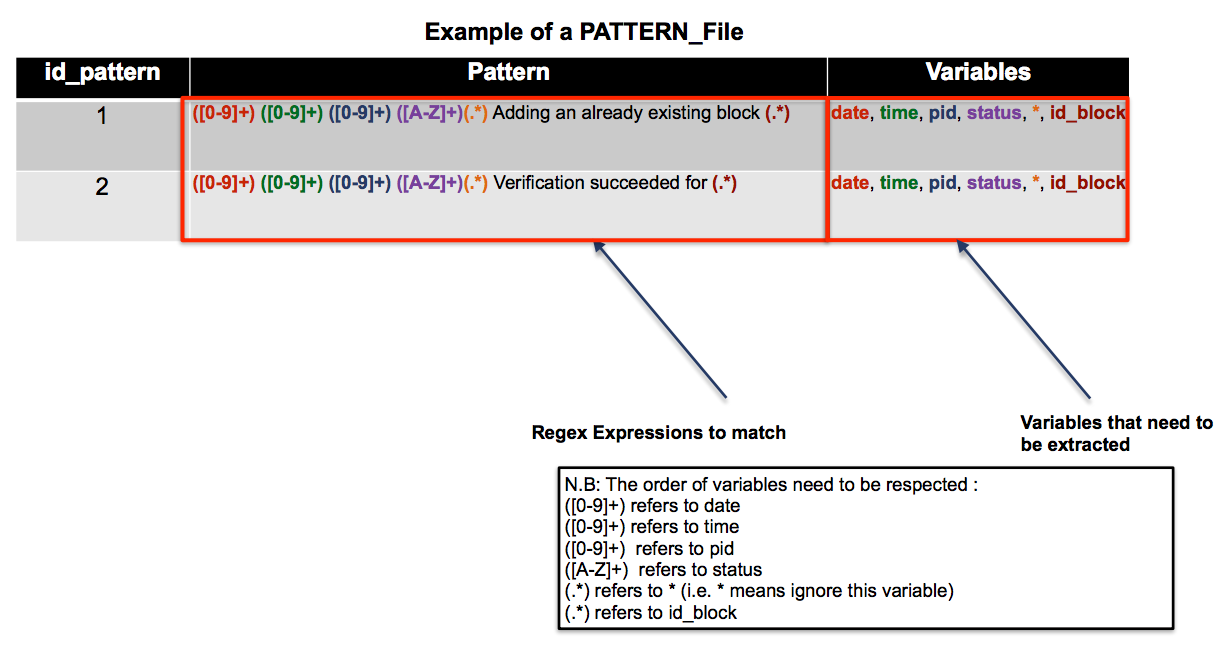

Log_Parser

Task Overview: Convert an unstructured raw log file into a structured one by matching a group of event patterns.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Put the URL of the raw log file that you need to parse. |

String |

|

Put the URL of the CSV file that contains the different RegEx expressions of each possible pattern and their corresponding variables. The csv file must contain three columns (See the example below): A. id_pattern: Integer Specify the column containing the identifier of each pattern B. Pattern: RegEx expression Define the regex expression of each pattern C. Variables: String Specify the name of each variable included in the pattern. N.B: Use the symbol ‘*’ for variables that you need to neglect. (e.g. in the example below the 5th variable is neglected) N.B: All variables specified in each Regex expressions have to be mentioned in the column « Variables » in the right order (use ',' to separate the variable names). |

String |

|

Indicate the extension of the file where you will save the resulted structured logs. |

String [CSV or HTML] |

How to use this task: Could be connected with Query_Data task and Feature_Vector_Extractor tasks.

10.1.3. Data Preprocessing

Append_Data

Task Overview: Append rows of other to the end of this frame, returning a new object. Columns not in this frame are added as new columns.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

Drop_Columns

Task Overview: Drop the columns specified in COLUMNS_NAME variable.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns that need to be dropped. Columns names should be separated by a comma. |

String |

| More details about the source code of this task can be found here. |

Drop_NaNs

Task Overview: Replace inf values to NaNs in a first place then drop objects on a given axis where alternately any or all of the data are missing.

| More details about the source code of this task can be found here. |

Encode_Data

Task Overview: Encode the values of the columns specified in COLUMNS_NAME variable with integer values between 0 and "the number of unique variables"-1.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns that need to be encoded. Columns names should be separated by a comma. |

String |

| More details about the source code of this task can be found here. |

Fill_NaNs

Task Overview: Fill NA/NaN values using the specified method.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

Refers to the value to use to fill holes (e.g. 0). |

Integer |

| More details about the source code of this task can be found here. |

Filter_Columns

Task Overview: Subset columns of a dataframe according to the specified list of coluumns in the COLUMNS_NAME variable.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns to restrict to. Columns names should be separated by a comma. |

String |

| More details about the source code of this task can be found here. |

Merge_Data

Task Overview: Merge DataFrame objects by performing a database-style join operation based on a specific reference column specified in the REF_COLUMN variable.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns to restrict to. Columns names should be separated by a comma. |

String |

| More details about the source code of this task can be found here. |

Scale_Data

Task Overview: Scale a dataset based on a robust scaler or standard scaler.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns to restrict to. Columns names should be separated by a comma. |

List [RobustScaler, StandardScaler] (default=RobustScaler) |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

|

The list of columns that will be scaled. Column names should be separated by a comma. |

String |

| More details about the source code of this task can be found here. |

Split_Data

Task Overview: Separate data into train and test subsets.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

This parameter must be float within the range (0.0, 1.0), not including the values 0.0 and 1.0. default = 0.7 |

Float |

How to use this task: It should be used before Train and Predict tasks.

| More details about the source code of this task can be found here. |

Rename_Columns

Task Overview: Rename the columns of a data frame.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The list of columns that will be renamed. Column names should be separated by a comma. |

String |

Query_Data

Task Overview: Query the columns of your data with a boolean expression.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The query string to evaluate. |

String |

|

Refers to the extension of the file where the resulted filtered data will be saved.. |

String [CSV or HTML] |

| More details about the source code of this task can be found here. |

10.1.4. Feature Extraction

Summarize_Data

Task Overview: Calculate the histogram of a dataframe based on a reference column that need to be specified in the variable REF_COLUMN.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The model that will be used to summarize data. |

List [KMeans, PolynomialFeatures] (default=KMeans) |

|

The column that will be used to group by the different histogram measures. |

String |

| More details about the source code of this task can be found here. |

Tsfresh_Features_Extraction

Task Overview: Calculate a comprehensive number of time series features based on the library TSFRESH.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The column that contains the values of the time series |

String |

|

The column that will be used to group by the different features. |

String |

|

False if you do not need to extract all the possible features extractable by the library TSFRESH. |

Boolean [default = False] |

|

Specifies how many rows of the dataframe will be previewed in the browser to check each task results. |

Int (default=-1) (-1 means preview all the rows) |

| More details about the source code of this task can be found here. |

Feature_Vector_Extractor

Task Overview: Encode structured data into numerical feature vectors whereby ML models can be applied.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If False, the will be ignored, it will not be executed. |

Boolean (default=True) |

|

The ID of the entity that you need to represent (to group by). |

String |

|

The extension of the file where the resulted features will be saved. |

String [CSV or HTML] |

|

The index of column containing the log patterns.[specific to features extraction from logs]. |

String |

|

True if you need to extract count the number of occurrence of each pattern per session. |

Boolean [True or False] |

|

The different variables that need to be considered to extract features according to their content. N.B: separate the different variables with a comma ',' |

String |

|

Refers to the different variables that need to be considered to count their distinct content.. |

String N.B: separate the different variables with a comma ',' |

|

True if you nedd to extract state and count features per session. |

Boolean [True or False] |

How to use this task: Could be connected with Train_Model if you need to train a model using unsupervised ML techniques.

10.1.5. AutoML

TPOT_Classifier

Task Overview: TPOT_Classifier performs an intelligent search over ML pipelines that can contain supervised classification models, preprocessors, feature selection techniques, and any other estimator or transformer that follows the scikit-learn API.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If True, This task code will be executed. |

Boolean (default=True) |

|

Number of iterations to the run pipeline optimization process. |

Integer (default=3) |

|

Function used to evaluate the quality of a given pipeline for the classification problem. |

List (default=accuracy) |

|

Cross-validation strategy used when evaluating pipelines. |

Integer (default=5) |

|

How much information TPOT communicates while it’s running. Possible inputs: 0, 1, 2, 3. |

Integer (default=1) |

How to use this task: It should be connected with Train_Model .

| More information about this task can be found here. |

AutoSklearn_Classifier

Task Overview: AutoSklearn_Classifier leverages recent advantages in Bayesian optimization, meta-learning and ensemble construction to performs an intelligent search over ML classification algorithms.

Task Variables:

Variable name |

Description |

Type |

|

If True, the tasks will be executed on a Docker container. activeeon/dlm3 image is used by default. If False, the required libraries should be installed in the different nodes that will be used. |

Boolean (default=True) |

|

Specifies the docker image that will be used to execute the task. |

String [default="activeeon/dlm3"] |

|

If True, This task code will be executed. |

Boolean (default=True) |

|

Time limit in seconds for the search of appropriate models. . |

Integer (default=30) |

|

Time limit for a single call to the ML model. Model fitting will be stopped if the ML algorithm runs over the time limit. |

Integer (default=27) |

|

If True, the defined resampling strategy will be applied. |

Boolean (default=True) |

|

Strategy to handle overfitting. |

String [default='cv'] |

|

Number of folds fro cross-validation. |

Integer (default=5) |

How to use this task: It should be connected with Train_Model.

|

TPOT_Regressor