All documentation links

ProActive Workflows & Scheduling (PWS)

-

PWS User Guide

(Workflows, Workload automation, Jobs, Tasks, Catalog, Resource Management, Big Data/ETL, …) PWS Modules

Job Planner

(Time-based Scheduling)Cloud Watch

(Event-based Scheduling)Cloud Automation

(PaaS On-Demand, Service deployment and management)

PWS Admin Guide

(Installation, Infrastructure & Nodes setup, Agents,…)

Machine Learning Open Studio (MLOS)

MLOS User Guide

(a complete Data Science and Machine Learning Platform)

1. Overview

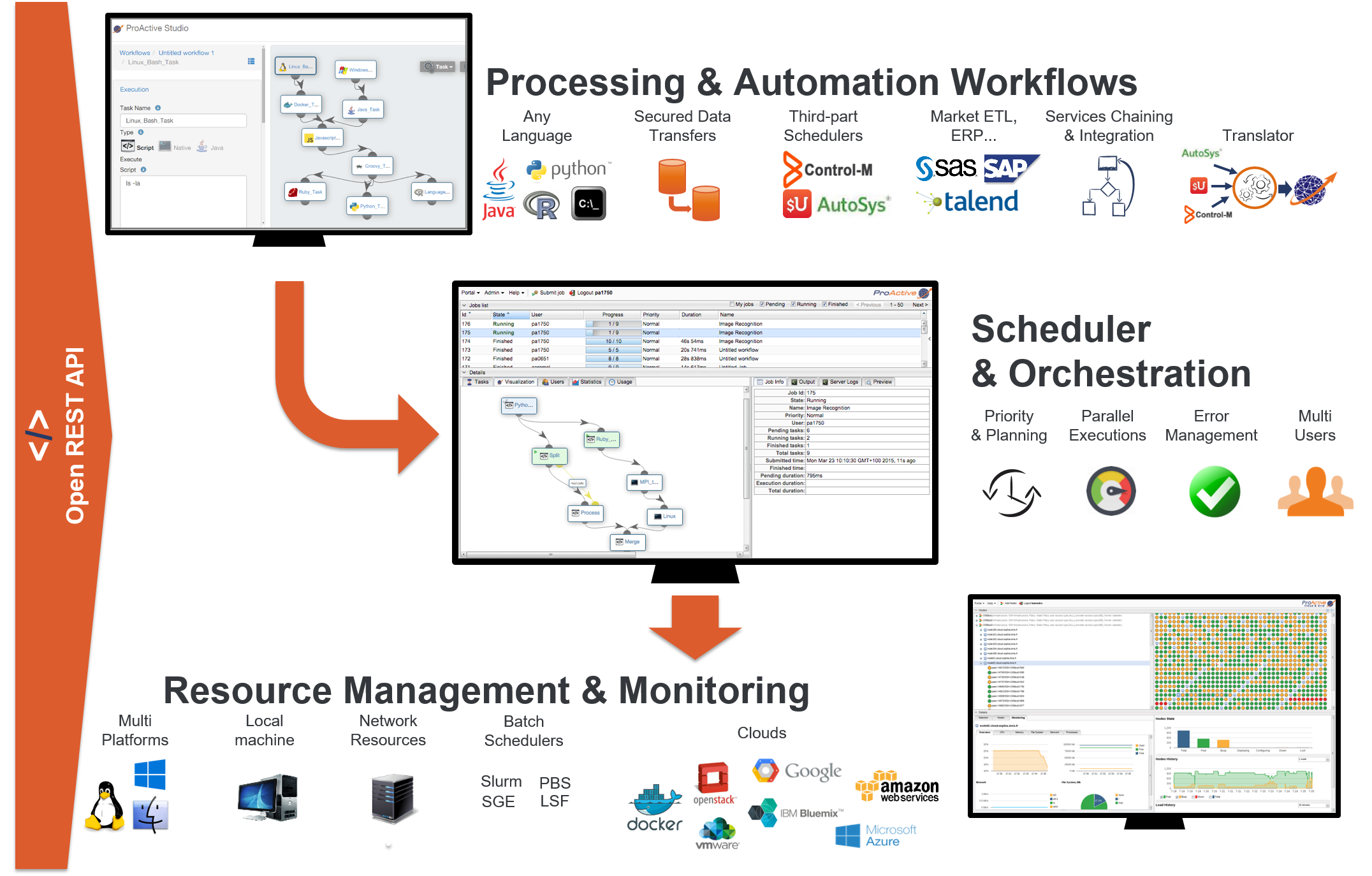

ProActive Scheduler is a comprehensive Open Source job scheduler and Orchestrator, also featuring Workflows and Resource Management. The user specifies the computation in terms of a series of computation steps along with their execution and data dependencies. The Scheduler executes this computation on a cluster of computation resources, each step on the best-fit resource and in parallel wherever its possible.

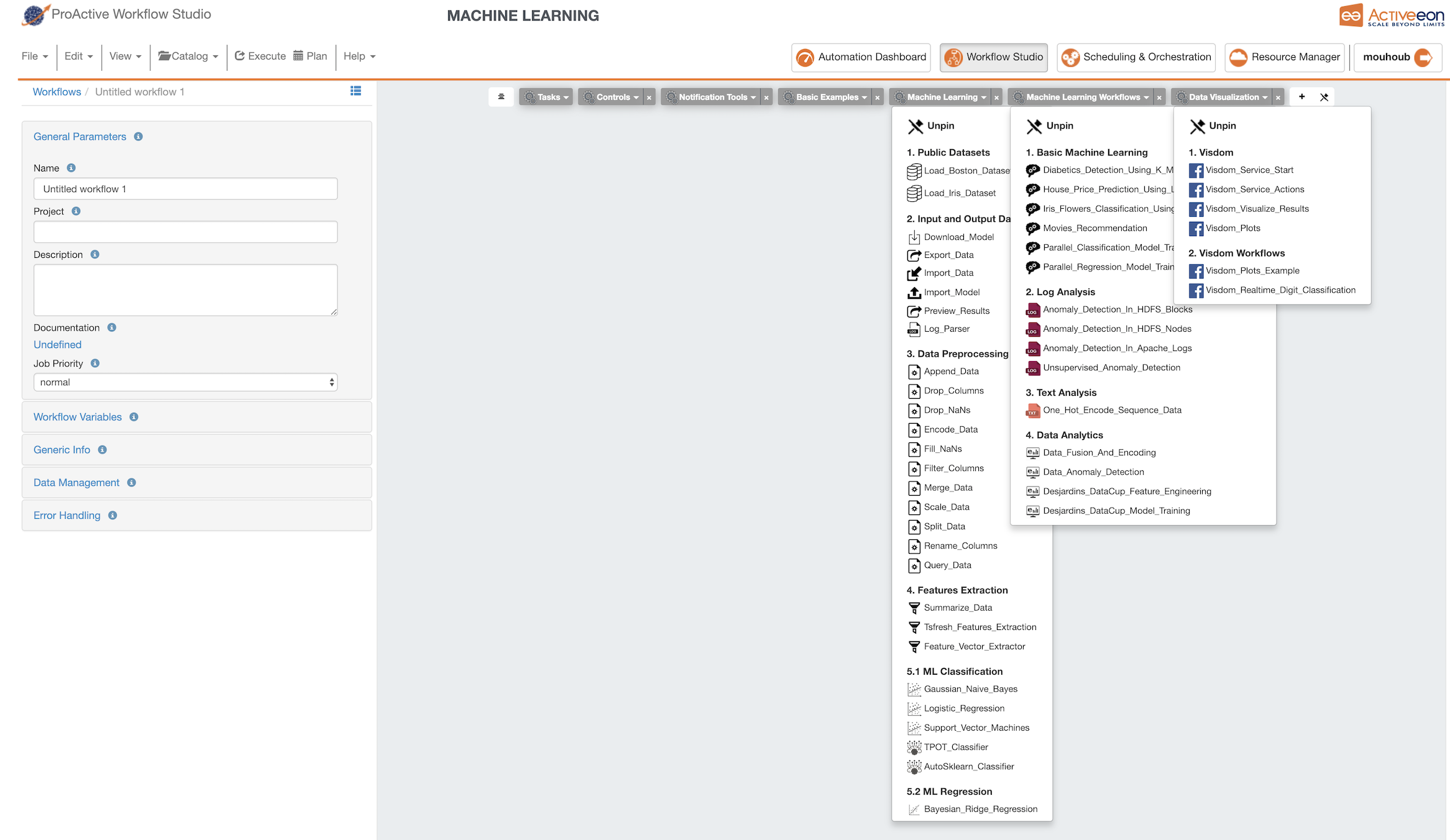

On the top left there is the Studio interface which allows you to build Workflows. It can be interactively configured to address specific domains, for instance Finance, Big Data, IoT, Artificial Intelligence (AI). See for instance the Documentation of Machine Learning Open Studio here, and try it online here. In the middle there is the Scheduler which enables an enterprise to orchestrate and automate Multi-users, Multi-application Jobs. Finally, at the bottom right is the Resource manager interface which manages and automates resource provisioning on any Public Cloud, on any virtualization software, on any container system, and on any Physical Machine of any OS. All the components you see come with fully Open and modern REST APIs.

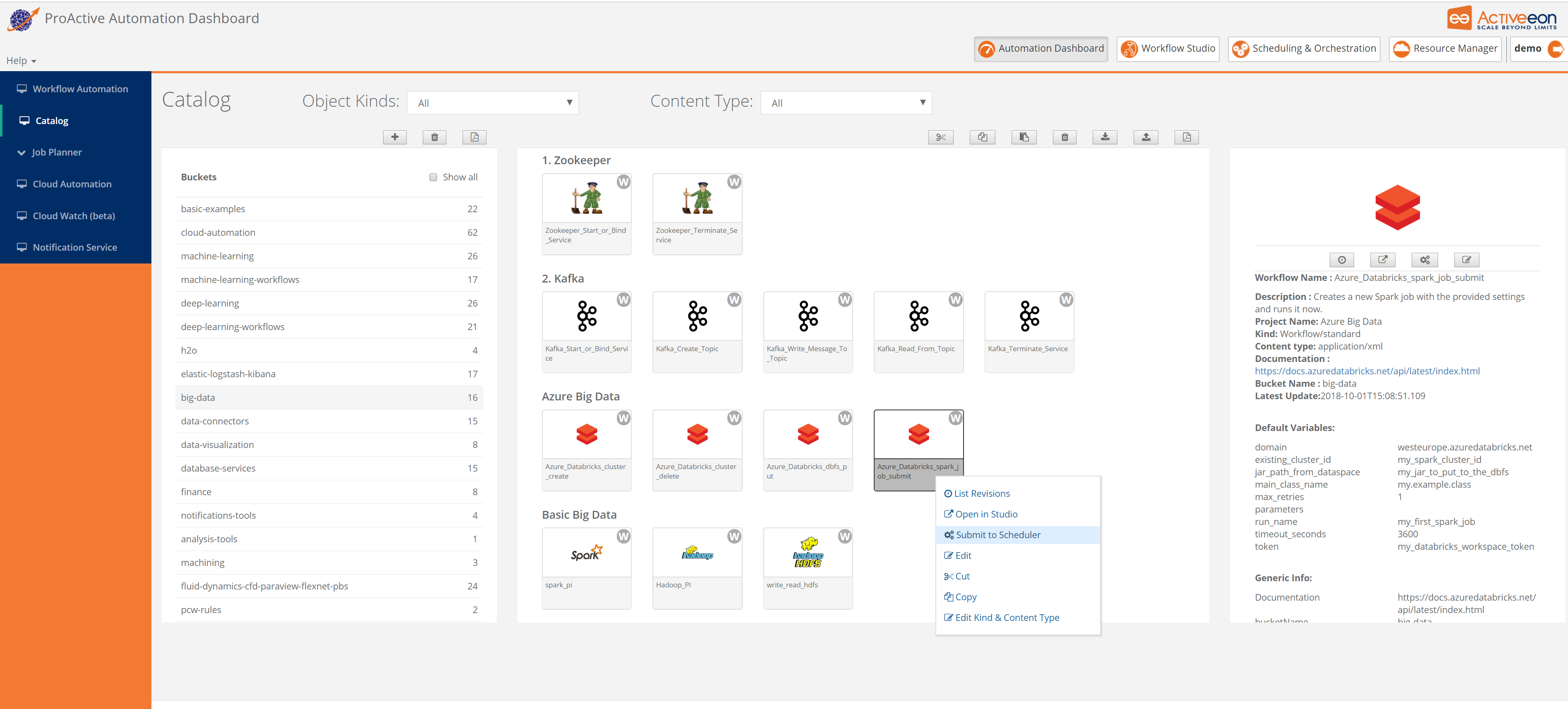

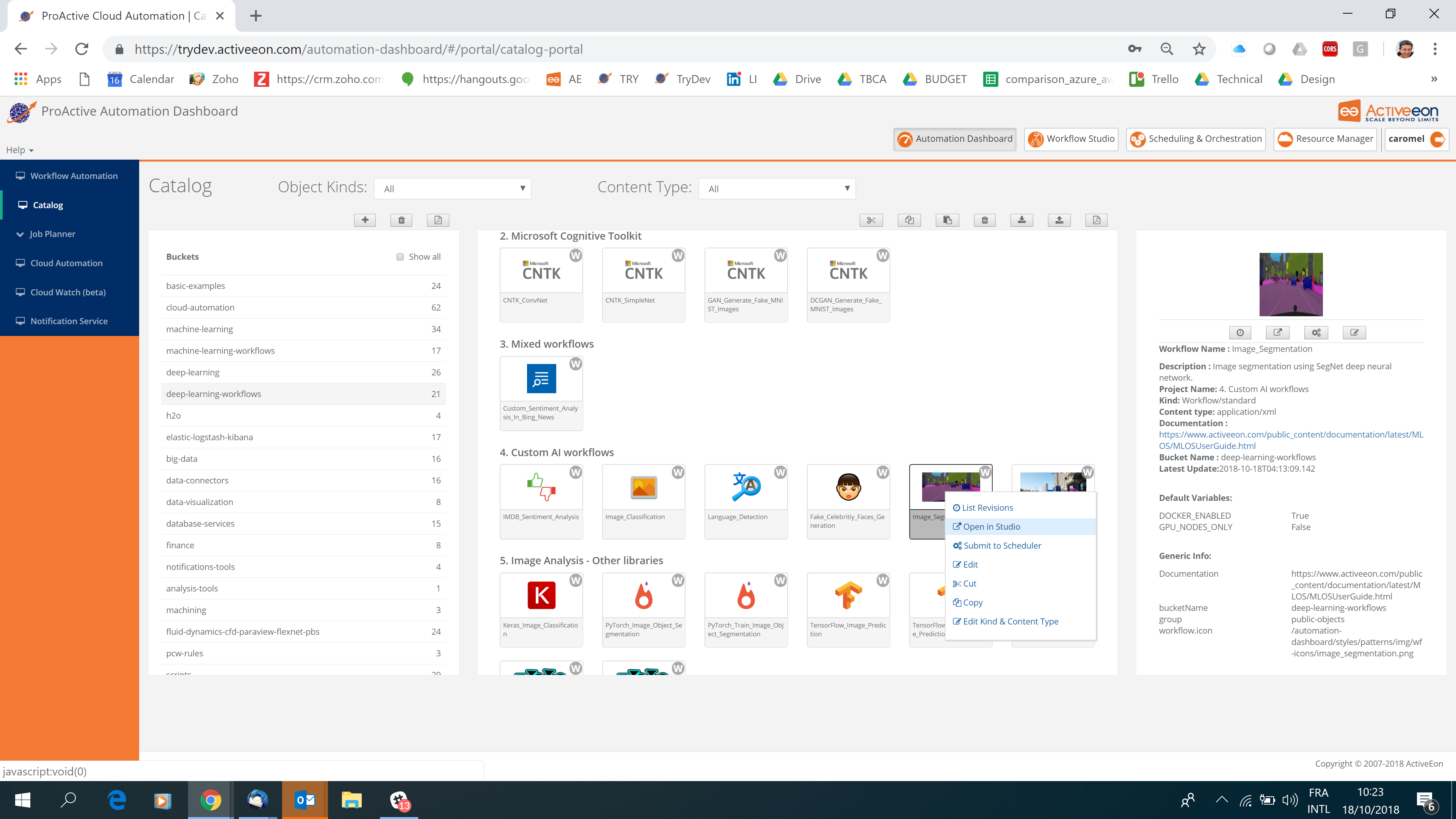

The screenshot above shows the Catalog Portal, where one can store Workflows, Calendars, Scripts, etc. Powerful versioning together with full access control (RBAC) is supported, and users can share easily Workflows and templates between teams, and various environments (Dev, Test, Staging and Prod).

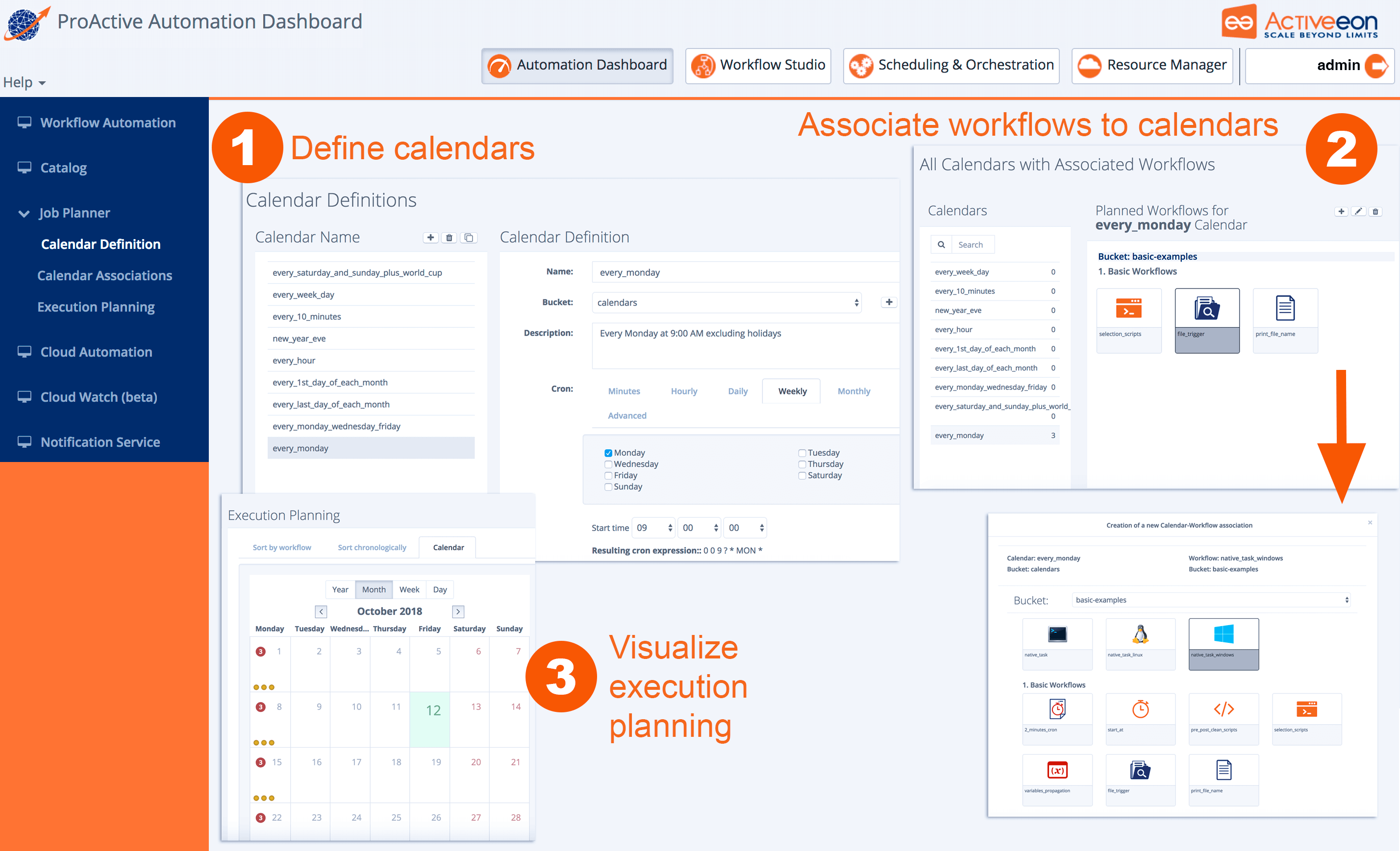

The screenshot above shows the Job Planner Portal, allowing to automate and schedule recurring Jobs. From left to right, you can define and use Calendar Definitions , associate Workflows to calendars, visualize the execution planning for the future, as well as actual executions of the past.

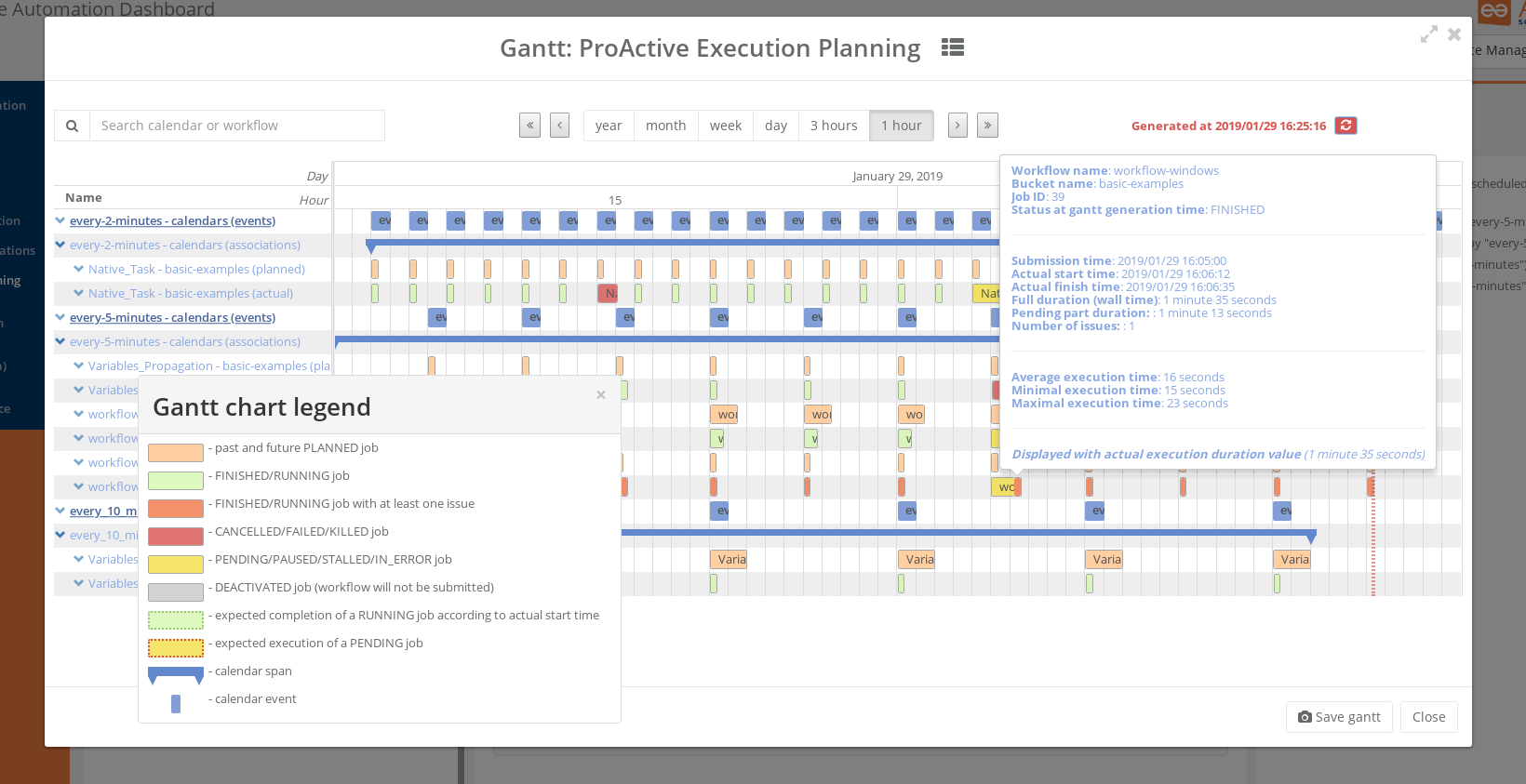

The Gantt screenshot above shows the Gantt View of Job Planner, featuring past job history, current Job being executed, and future Jobs that will be submitted, all in a comprehensive interactive view. You easily see the potential differences between Planned Submission Time and Actual Start Time of the Jobs, get estimations of the Finished Time, visualize the Job that stayed PENDING for some time (in Yellow) and the Jobs that had issues and got KILLED, CANCELLED, or FAILED (in red).

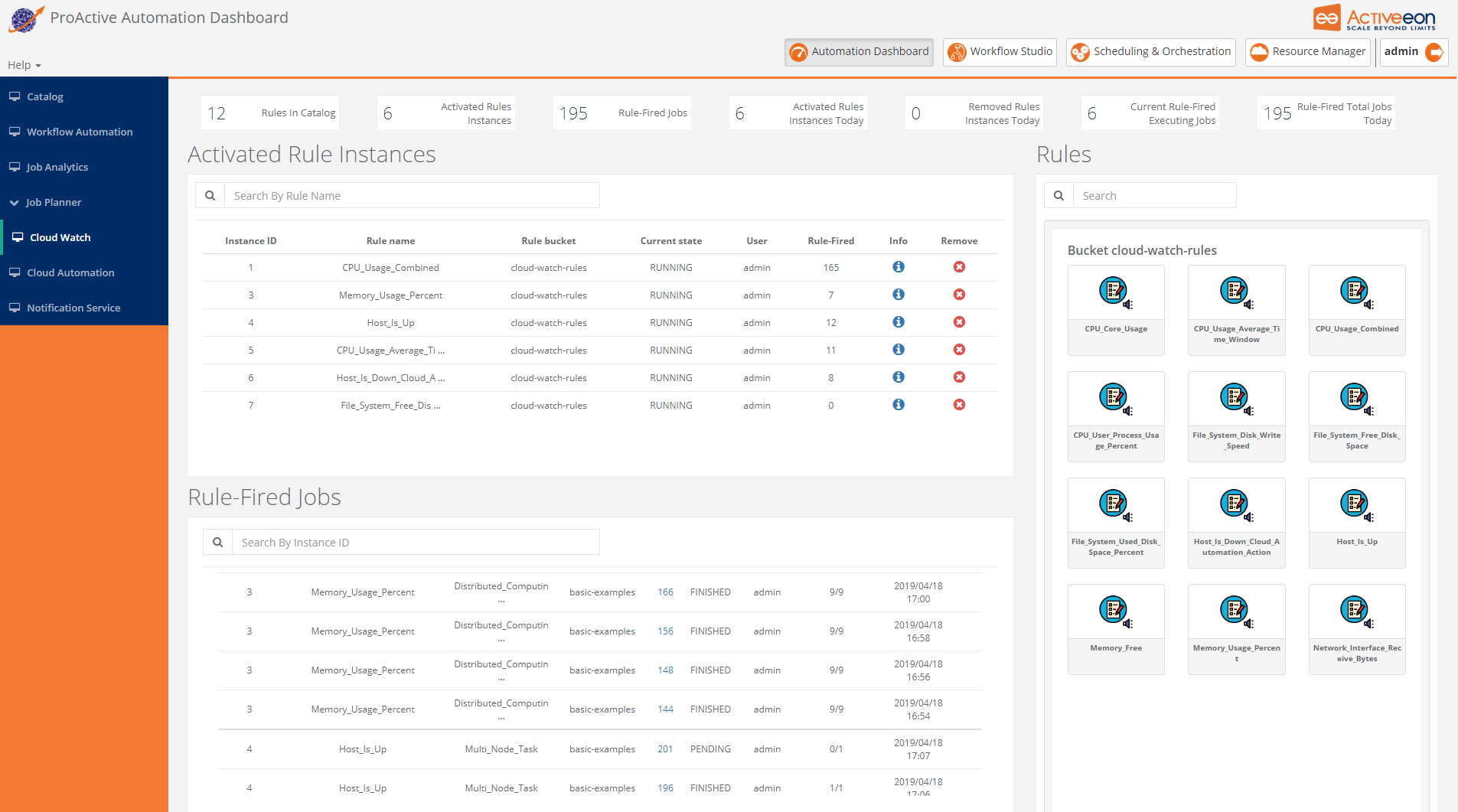

The screenshot above shows the Cloud Watch portal, where a user can manage and benefit from Cloud Watch - smart monitoring system. This ProActive component detects complex events and then triggers user-specified actions according to predefined set of rules.

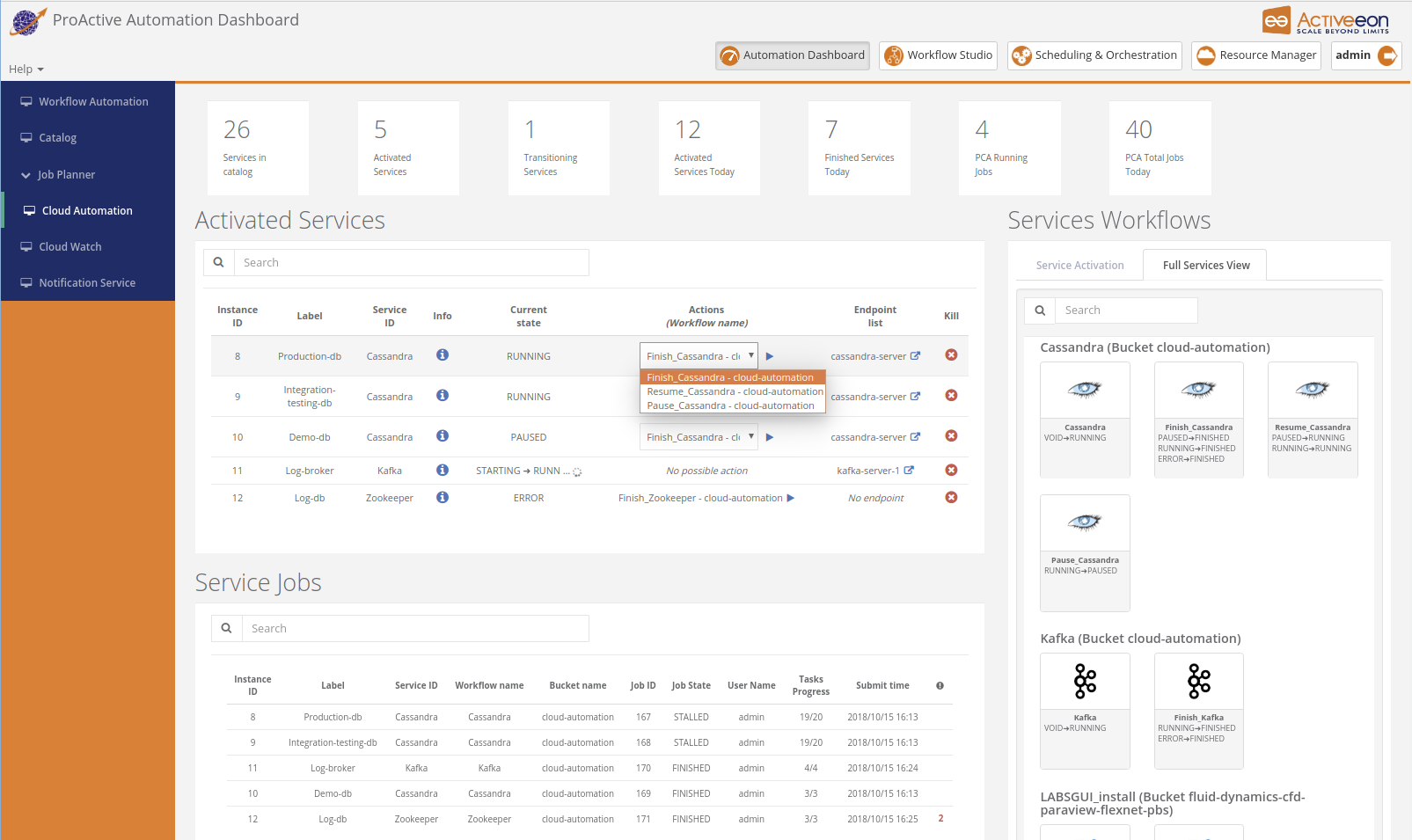

The screenshot above shows the Cloud Automation portal which is actually a PaaS automation tool. It allows you to easily manage any On-Demand Services with full Life-Cycle Management (create, deploy, suspend, resume and terminate).

The screenshots above taken from the Resource Manager portal shows that you can configure ProActive Scheduler to dynamically scale up and down the infrastructure being used (e.g. the number of VMs you buy on Clouds) according to the actual workload to execute.

1.1. Glossary

The following terms are used throughout the documentation:

- ProActive Workflows & Scheduling

-

The full distribution of ProActive for Workflows & Scheduling, it contains the ProActive Scheduler server, the REST & Web interfaces, the command line tools. It is the commercial product name.

- ProActive Scheduler

-

Can refer to any of the following:

-

A complete set of ProActive components.

-

An archive that contains a released version of ProActive components, for example

activeeon_enterprise-pca_server-OS-ARCH-VERSION.zip. -

A set of server-side ProActive components installed and running on a Server Host.

-

- Resource Manager

-

ProActive component that manages ProActive Nodes running on Compute Hosts.

- Scheduler

-

ProActive component that accepts Jobs from users, orders the constituent Tasks according to priority and resource availability, and eventually executes them on the resources (ProActive Nodes) provided by the Resource Manager.

| Please note the difference between Scheduler and ProActive Scheduler. |

- REST API

-

ProActive component that provides RESTful API for the Resource Manager, the Scheduler and the Catalog.

- Resource Manager Web Interface

-

ProActive component that provides a web interface to the Resource Manager. Also called Resource Manager Portal.

- Scheduler Web Interface

-

ProActive component that provides a web interface to the Scheduler. Also called Scheduler Portal.

- Workflow Studio

-

ProActive component that provides a web interface for designing Workflows.

- Job Planner

-

A ProActive component providing advanced scheduling options for Workflows.

- Job Planner Portal

-

A ProActive portal to manage the Job Planner service.

- Bucket

-

ProActive notion used with the Catalog to refer to a specific collection of ProActive Objects and in particular ProActive Workflows.

- Server Host

-

The machine on which ProActive Scheduler is installed.

SCHEDULER_ADDRESS-

The IP address of the Server Host.

- ProActive Node

-

One ProActive Node can execute one Task at a time. This concept is often tied to the number of cores available on a Compute Host. We assume a task consumes one core (more is possible, see multi-nodes tasks, so on a 4 cores machines you might want to run 4 ProActive Nodes. One (by default) or more ProActive Nodes can be executed in a Java process on the Compute Hosts and will communicate with the ProActive Scheduler to execute tasks. We distinguish two types of ProActive Nodes:

-

Server ProActive Nodes: Nodes that are running in the same host as ProActive server;

-

Remote ProActive Nodes: Nodes that are running on machines other than ProActive Server.

-

- Compute Host

-

Any machine which is meant to provide computational resources to be managed by the ProActive Scheduler. One or more ProActive Nodes need to be running on the machine for it to be managed by the ProActive Scheduler.

|

Examples of Compute Hosts:

|

- Node Source

-

A set of ProActive Nodes deployed using the same deployment mechanism and sharing the same access policy.

- Node Source Infrastructure

-

The configuration attached to a Node Source which defines the deployment mechanism used to deploy ProActive Nodes.

- Node Source Policy

-

The configuration attached to a Node Source which defines the ProActive Nodes acquisition and access policies.

- Scheduling Policy

-

The policy used by the ProActive Scheduler to determine how Jobs and Tasks are scheduled.

PROACTIVE_HOME-

The path to the extracted archive of ProActive Scheduler release, either on the Server Host or on a Compute Host.

- Workflow

-

User-defined representation of a distributed computation. Consists of the definitions of one or more Tasks and their dependencies.

- Workflow Revision

-

ProActive concept that reflects the changes made on a Workflow during it development. Generally speaking, the term Workflow is used to refer to the latest version of a Workflow Revision.

- Generic Information

-

Are additional information which are attached to Workflows or Tasks. See generic information.

- Calendar Definition

-

Is a json object attached by adding it to the Generic Information of a Workflow.

- Job

-

An instance of a Workflow submitted to the ProActive Scheduler. Sometimes also used as a synonym for Workflow.

- Job Id

-

An integer identifier which uniquely represents a Job inside the ProActive Scheduler.

- Job Icon

-

An icon representing the Job and displayed in portals. The Job Icon is defined by the Generic Information workflow.icon.

- Task

-

A unit of computation handled by ProActive Scheduler. Both Workflows and Jobs are made of Tasks. A Task must define a ProActive Task Executable and can also define additional task scripts

- Task Id

-

An integer identifier which uniquely represents a Task inside a Job ProActive Scheduler. Task ids are only unique inside a given Job.

- Task Executable

-

The main executable definition of a ProActive Task. A Task Executable can either be a Script Task, a Java Task or a Native Task.

- Script Task

-

A Task Executable defined as a script execution.

- Java Task

-

A Task Executable defined as a Java class execution.

- Native Task

-

A Task Executable defined as a native command execution.

- Additional Task Scripts

-

A collection of scripts part of a ProActive Task definition which can be used in complement to the main Task Executable. Additional Task scripts can either be Selection Script, Fork Environment Script, Pre Script, Post Script, Control Flow Script or Cleaning Script

- Selection Script

-

A script part of a ProActive Task definition and used to select a specific ProActive Node to execute a ProActive Task.

- Fork Environment Script

-

A script part of a ProActive Task definition and run on the ProActive Node selected to execute the Task. Fork Environment script is used to configure the forked Java Virtual Machine process which executes the task.

- Pre Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, before the Task Executable.

- Post Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, after the Task Executable.

- Control Flow Script

-

A script part of a ProActive Task definition and run inside the forked Java Virtual Machine, after the Task Executable, to determine control flow actions.

- Control Flow Action

-

A dynamic workflow action performed after the execution of a ProActive Task. Possible control flow actions are Branch, Loop or Replicate.

- Branch

-

A dynamic workflow action performed after the execution of a ProActive Task similar to an IF/THEN/ELSE structure.

- Loop

-

A dynamic workflow action performed after the execution of a ProActive Task similar to a FOR structure.

- Replicate

-

A dynamic workflow action performed after the execution of a ProActive Task similar to a PARALLEL FOR structure.

- Cleaning Script

-

A script part of a ProActive Task definition and run after the Task Executable and before releasing the ProActive Node to the Resource Manager.

- Script Bindings

-

Named objects which can be used inside a Script Task or inside Additional Task Scripts and which are automatically defined by the ProActive Scheduler. The type of each script binding depends on the script language used.

- Task Icon

-

An icon representing the Task and displayed in the Studio portal. The Task Icon is defined by the Task Generic Information task.icon.

- ProActive Agent

-

A daemon installed on a Compute Host that starts and stops ProActive Nodes according to a schedule, restarts ProActive Nodes in case of failure and enforces resource limits for the Tasks.

2. Get started

To submit your first computation to ProActive Scheduler, install it in your environment (default credentials: admin/admin) or just use our demo platform try.activeeon.com.

ProActive Scheduler provides comprehensive interfaces that allow to:

-

Create workflows using ProActive Workflow Studio

-

Submit workflows, monitor their execution and retrieve the tasks results using ProActive Scheduler Portal

-

Add resources and monitor them using ProActive Resource Manager Portal

-

Version and share various objects using ProActive Catalog Portal

-

Provide an end-user workflow submission interface using Workflow Automation Portal

-

Generate metrics of multiple job executions using Job Analytics Portal

-

Plan workflow executions over time using Job Planner Portal

-

Add services using Cloud Automation Portal

-

Perform event based scheduling using Cloud Watch Portal

-

Control manual workflows validation steps using Notification Portal

We also provide a REST API. and command line interfaces for advanced users.

3. Create and run your computation

3.1. Jobs, workflows and tasks

In order to use Scheduler for executing various computations, one needs to write the execution definition also known as the Workflow definition. A workflow definition is an XML file that adheres to XML schema for ProActive Workflows.

It specifies a number of XML tags for specifying execution steps, their sequence and dependencies. Each execution step corresponds to a task which is the smallest unit of execution that can be performed on a computation resources (ProActive Node). There are several types of tasks which caters different use cases.

3.1.1. Task Types

ProActive Scheduler currently supports three main types of tasks:

-

Native Task, a command with eventual parameters to be executed

-

Script Task, a script written in Groovy, Ruby, Python and other languages supported by the JSR-223

-

Java Task, a task written in Java extending the Scheduler API

For instance, a Script Task can be used to execute an inline script definition or a script file as an execution step whereas a Native Task can be used to execute a native executable file.

| We recommend to use script tasks that are more flexible rather than Java tasks. You can easily integrate with any Java code from a Groovy script task. |

3.1.2. Additional Scripts

In addition to the main definition of a Task, scripts can also be used to provide additional actions. The following actions are supported:

-

one or more selection scripts to control the Task resource (ProActive Node) selection.

-

a fork environment script script to control the Task execution environment (a separate Java Virtual Machine or Docker container).

-

a pre script executed immediately before the main task definition (and inside the forked environment).

-

a post script executed immediately after the main task definition if and only if the task did not trigger any error (also run inside the forked environment).

-

a control flow script executed immediately after the post script (if present) or main task definition to control flow behavior such as branch, loop or replicate (also run inside the forked environment).

-

finally, a clean script executed after the task is finished, whether the task succeeded or not, and directly on the ProActive Node which hosted the task.

3.1.3. Task Dependencies

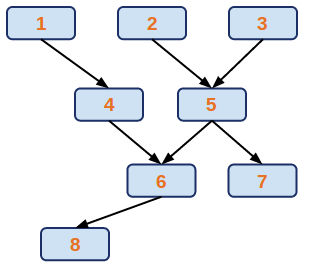

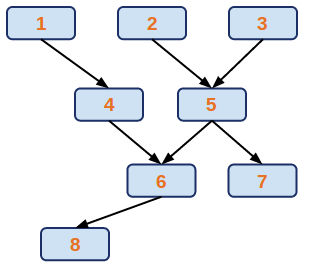

A workflow in ProActive Workflows & Scheduling can be seen as an oriented graph of Tasks:

In this tasks graph, we see that task 4 is preceded by task 1, this means that the ProActive Scheduler will wait for the end of task 1 execution before launching task 4. In a more concrete way, task 1 could be the calculation of a part of the problem to solve, and task 4 takes the result provided by task 1 and compute another step of the calculation. We introduce here the concept of passing data between tasks. This relation is called a Dependency, and we say that task 4 depends on task 1.

We see that task 1, 2 and 3 are not linked, so these three tasks can be executed in parallel, because they are independent from each other.

The task graph is defined by the user at the time of workflow creation, but can also be modified dynamically during the job execution by control flow actions such as Replicate.

A finished job contains the results and logs of each task. Upon failure, a task can be restarted automatically or cancelled.

3.2. Native Tasks

A Native Task is a ProActive Workflow task which main execution is defined as a command to run.

Native tasks are the simplest type of Task, where a user provides a command with a list of parameters.

Using native tasks, one can easily reuse existing applications and embed them in a workflow.

Once the executable is wrapped as a task you can easily leverage some of the workflow constructs to run your executable commands in parallel.

You can find an example of such integration in this XML workflow or you can also build one yourself using the Workflow Studio.

Native application by nature can be tied to a given operating system so if you have heterogeneous nodes at your disposal, you might need to select a suitable node to run your native task. This can be achieved using selection script.

| Native Tasks are not the only possibility available to run executables or commands, this can also be achieved using shell language script tasks. |

3.3. Script Tasks

A Script Task is a ProActive Workflow task which main execution is defined as a script.

ProActive Workflows supports tasks in many scripting languages. The currently supported dynamic languages or backends are Groovy, Jython, Python, JRuby, Javascript, Scala, Powershell, R, Perl, Bash, Any Unix Shell, Windows CMD, Docker File, Docker Compose and Kubernetes.

Though Docker File, Docker Compose and Kubernetes are not really scripting language but rather description languages, ProActive tasks can interpret Docker files, Docker Compose or Kubernetes yaml files to start containers or pods.

|

As seen in the list above, Script Tasks can be used to run native operating system commands by providing a script written in bash, ksh, windows cmd, Powershell, etc…

Simply set the language attribute to bash, shell, or cmd and type your command(s) in the workflow.

In this sense, it can replace in most cases a Native Task.

| You can easily embed small scripts in your workflow. The nice thing is that the workflow will be self-contained and will not require to compile your code before executing. However, we recommend that you keep these scripts small to keep the workflow easy to understand. |

You can find an example of a script task in this XML workflow or you can also build one yourself using the Workflow Studio. The quickstart tutorial relies on script tasks and is a nice introduction to writing workflows.

Scripts can also be used to decorate any kind of task (Script, Java or Native) with specific actions, as described in the additional scripts section.

It is thus possible to combine various scripting languages in a single Task. For example a pre script could be written in bash to transfer some files from a remote file system, while the main script will be written in python to process those files.

Finally, a Script Task may or may not return a result materialized by the result script binding.

3.3.1. Script Bindings

A Script Task or additional scripts can use automatically-defined script bindings. These bindings allow to associate the script inside its workflow context, for example, the ability to know the current workflow variables as submitted by a user, the workflow job id as run inside the ProActive Scheduler, etc.

Bindings allow as well to pass information between various scripts inside the same task or across different tasks.

A script binding can be either:

-

Defined automatically by the ProActive Scheduler before the script starts its execution. In this case, we call it also a provided or input script binding.

-

Needed to be defined by the script during its execution to provide meaningful results. In this case, we call it also a result or output script binding.

Below is an example of Script Task definition which uses both types of bindings:

jobId = variables.get("PA_JOB_ID")

result = "The Id of the current job is: " + jobIdAs we can see, the variables binding in provided automatically by the ProActive Scheduler before the script execution and it is used to compute a result binding as task output.

Bindings are stored internally inside the ProActive Scheduler as Java Objects. Accordingly, a conversion may be performed when targetting (or when reading from) other languages.

For example, Generic Information or Workflow Variables are stored as Java objects implementing the Map interface.

When creating the variables or genericInformation bindings prior to executing a Groovy, JRuby, Jython or Python script, the ProActive Scheduler will convert it to various types accepted by the target language.

In those languages, printing the type of the genericInformation binding will show:

Groovy: class com.google.common.collect.RegularImmutableBiMap JRuby: Java::ComGoogleCommonCollect::RegularImmutableBiMap Jython: <type 'com.google.common.collect.RegularImmutableBiMap'> Python: <class 'py4j.java_collections.JavaMap'>

We see here that Groovy, JRuby and Jython did not perform any conversion, whereas Python (CPython) did. This behavior is expected as CPython script execution is run as a standalone python process and a custom type conversion occurs while the formers are run directly inside the task Java Virtual Machine will full Java type compatibility.

Depending on the script type (task script, selection script, etc…), the script may need to define an output binding to return some information to the scheduler.

Below are some examples of output bindings for various kind of scripts:

-

resultandresultMetadatafor a Script Task main execution script. -

selectedfor a selection script. -

loopfor a Loop construct flow script. -

runsfor a Replicate construct flow script.

The complete list of script bindings (both input and output) is available in the Script Bindings Reference section.

Below are descriptions of some specific scripting language support, which can be used in Script Tasks main execution script or in any Task additional scripts.

3.4. Java Tasks

A workflow can execute Java classes thanks to Java Tasks. In terms of XML definition, a Java Task consists of a fully-qualified class name along with parameters:

<task name="Java_Task">

<javaExecutable class="org.ow2.proactive.scheduler.examples.WaitAndPrint">

<parameters>

<parameter name="sleepTime" value="20"/>

<parameter name="number" value="2"/>

</parameters>

</javaExecutable>

</task>The provided class must extend the JavaExecutable abstract class and implement the execute method.

Any parameter must be defined as public attributes. For example, the above WaitAndPrint class contains the following attributes definitions:

/** Sleeping time before displaying. */

public int sleepTime;

/** Parameter number. */

public int number;A parameter conversion is performed automatically by the JavaExecutable super class. If this automatic conversion is not suitable, it is possible to override the init method.

Finally, several utility methods are provided by JavaExecutable and should be used inside execute. A good example is getOut which allows writing some textual output to the workflow task or getLocalSpace which allows access to the task execution directory.

The complete code for the WaitAndPrint class is available below:

public class WaitAndPrint extends JavaExecutable {

/** Sleeping time before displaying. */

public int sleepTime;

/** Parameter number. */

public int number;

/**

* @see JavaExecutable#execute(TaskResult[])

*/

@Override

public Serializable execute(TaskResult... results) throws Throwable {

String message = null;

try {

getErr().println("Task " + number + " : Test STDERR");

getOut().println("Task " + number + " : Test STDOUT");

message = "Task " + number;

int st = 0;

while (st < sleepTime) {

Thread.sleep(1000);

try {

setProgress((st++) * 100 / sleepTime);

} catch (IllegalArgumentException iae) {

setProgress(100);

}

}

} catch (Exception e) {

message = "crashed";

e.printStackTrace(getErr());

}

getOut().println("Terminate task number " + number);

return ("No." + this.number + " hi from " + message + "\t slept for " + sleepTime + " Seconds");

}

}3.5. Run a workflow

To run a workflow, the user submits it to the ProActive Scheduler. It will be possible to choose the values of all Job Variables when submitting the workflow (see section Job Variables). A verification is performed to ensure the well-formedness of the workflow. A verification will also be performed to ensure that all variables are valid according to their model definition (see section Variable Model). Next, a job is created and inserted into the pending queue and waits until free resources become available. Once the required resources are provided by the ProActive Resource Manager, the job is started. Finally, once the job is finished, it goes to the queue of finished jobs where its result can be retrieved.

You can submit a workflow to the Scheduler using the Workflow Studio, the Scheduler Web Interface or command line tools. For advanced users we also expose REST and JAVA APIs.

| During the submission, you will be able to edit Workflow variables, so you can effectively use them to parameterize workflow execution and use workflows as templates. |

3.5.1. Job & Task states

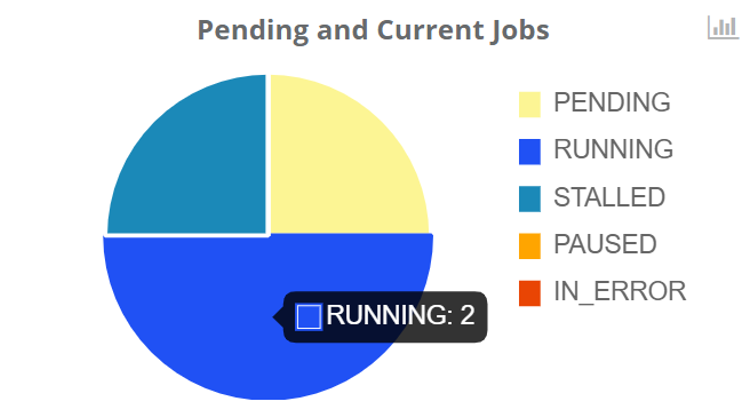

During their execution, jobs go through different states. The screenshot below shows Pending and Current Jobs states summary as seen in Workflow Automation portal.

| State | Name | Description |

|---|---|---|

|

Pending |

The job is waiting to be scheduled. None of its tasks have been Running so far. |

|

Running |

The job is running. At least one of its task has been scheduled. |

|

Stalled |

The job has been launched but no task is currently running. |

|

Paused |

The job is paused waiting for user to resume it. |

|

In-Error |

The job has one or more In-Error tasks that are suspended along with their dependencies. User intervention is required to fix the causing issues and restart the In-Error tasks to resume the job. |

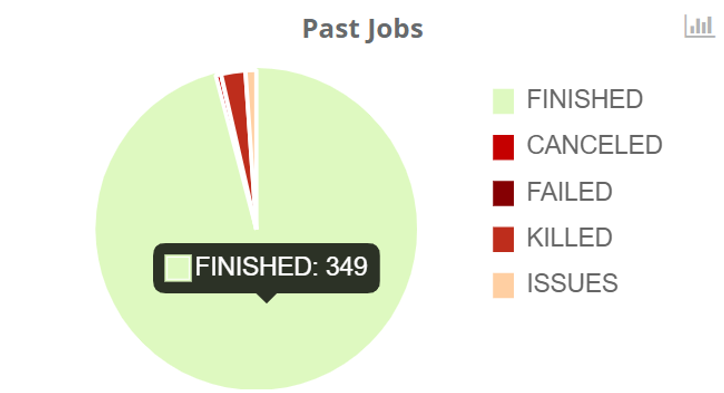

The screenshot below shows Past Jobs states summary as seen in Workflow Automation portal.

| State | Name | Description |

|---|---|---|

|

Finished |

The job is finished. Tasks are finished or faulty. |

|

Cancelled |

The job has been canceled because of an exception. This status is used when an exception is thrown by the user code of a task and when the user has asked to cancel the job on exception. |

|

Failed |

The job has failed. One or more tasks have failed (due to resources failure). There is no more executionOnFailure left for a task. |

|

Killed |

The job has been killed by the user. |

Similarly to jobs, during their execution, tasks go through different states.

| State | Name | Description |

|---|---|---|

|

Aborted |

The task has been aborted by an exception on an other task while the task is running. (job is cancelOnError=true). Can be also in this status if the job is killed while the concerned task was running. |

|

Resource down |

The task is failed (only if maximum number of execution has been reached and the node on which it was started is down). |

|

Faulty |

The task has finished execution with error code (!=0) or exception. |

|

Finished |

The task has finished execution. |

|

In-Error |

The task is suspended after first error, if the user has asked to suspend it. The task is waiting for a manual restart action. |

|

Could not restart |

The task could not be restarted. It means that the task could not be restarted after an error during the previous execution. |

|

Could not start |

The task could not be started. It means that the task could not be started due to dependencies failure. |

|

Paused |

The task is paused. |

|

Pending |

The task is in the scheduler pending queue. |

|

Running |

The task is executing. |

|

Skipped |

The task was not executed: it was the non-selected branch of an IF/ELSE control flow action. |

|

Submitted |

The task has just been submitted by the user. |

|

Faulty… |

The task is waiting for restart after an error (i.e. native code != 0 or exception, and maximum number of execution is not reached). |

|

Failed… |

The task is waiting for restart after a failure (i.e. node down). |

3.5.2. Job Priority

A job is assigned a default priority of NORMAL but the user can increase or decrease the priority once the

job has been submitted. When they are scheduled, jobs with the highest priory are executed first.

The following values are available:

-

IDLE -

LOWEST -

LOW -

NORMAL -

HIGHcan only be set by an administrator -

HIGHESTcan only be set by an administrator

3.6. Retrieve logs

It is possible to retrieve multiple logs from a job, these logs can either be:

-

The standard output/error logs associated with a job.

-

The standard output/error logs associated with a task.

-

The scheduler server logs associated with a job.

-

The scheduler server logs associated with a task.

Unless your account belongs to an administrator group, you can only see the logs of a job that you own.

3.6.1. Retrieve logs from the portal

-

Job standard output/error logs:

To view the standard output or error logs associated with a job, select a job from the job list and then on the Output tab in the bottom right panel.

Click on Streaming Output checkbox to auto-fetch logs for running tasks of the entire Job. The logs panel will be updated as soon as new log lines will be printed by this job.

You cannot select a specific Task in the streaming mode. If you activate streaming while some Tasks are already finished, you will get the logs of those Tasks as well.

Click on Finished Tasks Output button to retrieve logs for already finished tasks. For all the finished Tasks within the Job, or for the selected Task.

The command does work when Job is still in Running state, as well as when Job is Finished.

Logs are limited to 1024 lines. Should your job logs be longer, you can select the Full logs (download) option from the drop down list. -

Task standard output/error logs:

To view the standard output or error logs associated with a task, select a job from the job list and a task from the task list.

Then in the Output tab, choose Selected task from the drop down list.

Once the task is terminated, you will be able to click on the Finished Tasks Output button to see the standard output or error associated with the task.

It is not possible to view the streaming logs of single task, only the job streaming logs are available. -

Job server logs:

Whether a job is running or finished, you can access the associated server logs by selecting a job, opening the Server Logs tab in the bottom-right panel and then clicking on Fetch logs.

Server logs contains debugging information, such as the job definition, output of selection or cleaning scripts, etc. -

Task server logs:

In the Server Logs tab, you can choose Selected task to view the server logs associated with a single task.

3.6.2. Retrieve logs from the command line

The chapter command line tools explains how to use the command line interface. Once connected, you can retrieve the various logs using the following commands. Server logs cannot be accessed from the command line.

-

Job standard output/error logs: joboutput(jobid)

> joboutput(1955) [1955t0@precision;14:10:57] [2016-10-27 14:10:057 precision] HelloWorld [1955t1@precision;14:10:56] [2016-10-27 14:10:056 precision] HelloWorld [1955t2@precision;14:10:56] [2016-10-27 14:10:056 precision] HelloWorld [1955t3@precision;14:11:06] [2016-10-27 14:11:006 precision] HelloWorld [1955t4@precision;14:11:05] [2016-10-27 14:11:005 precision] HelloWorld

-

Task standard output/error logs: taskoutput(jobid, taskname)

> taskoutput(1955,'0_0') [1955t0@precision;14:10:57] [2016-10-27 14:10:057 precision] HelloWorld

-

Streaming job standard output/error logs: livelog(jobid)

> livelog(2002) Displaying live log for job 2002. Press 'q' to stop. > Displaying live log for job 2002. Press 'q' to stop. [2002t2@precision;15:57:13] [ABC, DEF]

3.7. Retrieve results

Once a job or a task is terminated, it is possible to get its result. Unless you belong to the administrator group, you can only get the result of the job that you own. Results can be retrieved using the Scheduler Web Interface or the command line tools.

| When running native application, the task result will be the exit code of the application. Results usually make more sense when using script or Java tasks. |

3.7.1. Retrieve results from the portal

In the scheduler portal, select a job, then select a task from the job’s task list. Click on Preview tab in the bottom-right panel.

In this tab, you will see two buttons:

-

Open in browser: when clicking on this button, the result will be displayed in a new browser tab. By default, the result will be displayed in text format. If your result contains binary data, it is possible to specify a different display mode using <<_assigning_metadata_to_task_result,Result Metadata>.

If the task failed, when clicking on the Open in browser button, the task error will be displayed. -

Save as file: when clicking on this button, the result will be saved on disk in binary format. By default, the file name will be generated automatically using the job and task ids, without an extension. It is possible to customize this behavior and specify in the task a file name, or a file extension using Result Metadata.

The following example gets one png image and add the metadata to help the browser display it and add a name when downloading.

file = new File(fileName)

result = file.getBytes()

resultMetadata.put("file.name", fileName)

resultMetadata.put("content.type", "image/png")

3.7.2. Retrieve results from the command line

The chapter command line tools explains how to use the command line interface. Once connected, you can retrieve the task or job results:

-

Result of a single task: taskresult(jobid, taskname)

> taskresult(2002, 'Merge')

task('Merge') result: true

-

Result of all tasks of a job: jobresult(jobid)

> jobresult(2002)

job('2002') result:

Merge : true

Process*1 : DEF

Process : ABC

Split : {0=abc, 1=def}

4. ProActive Studio

ProActive Workflow Studio is used to create and submit workflows graphically. The Studio allows to simply drag-and-drop various task constructs and draw their dependencies to form complex workflows. It also provides various flow control widgets such as conditional branch, loop, replicate etc to construct workflows with dynamic structures.

The studio usage is illustrated in the following example.

4.1. A simple example

The quickstart tutorial on try.activeeon.com shows how to build a simple workflow using script tasks.

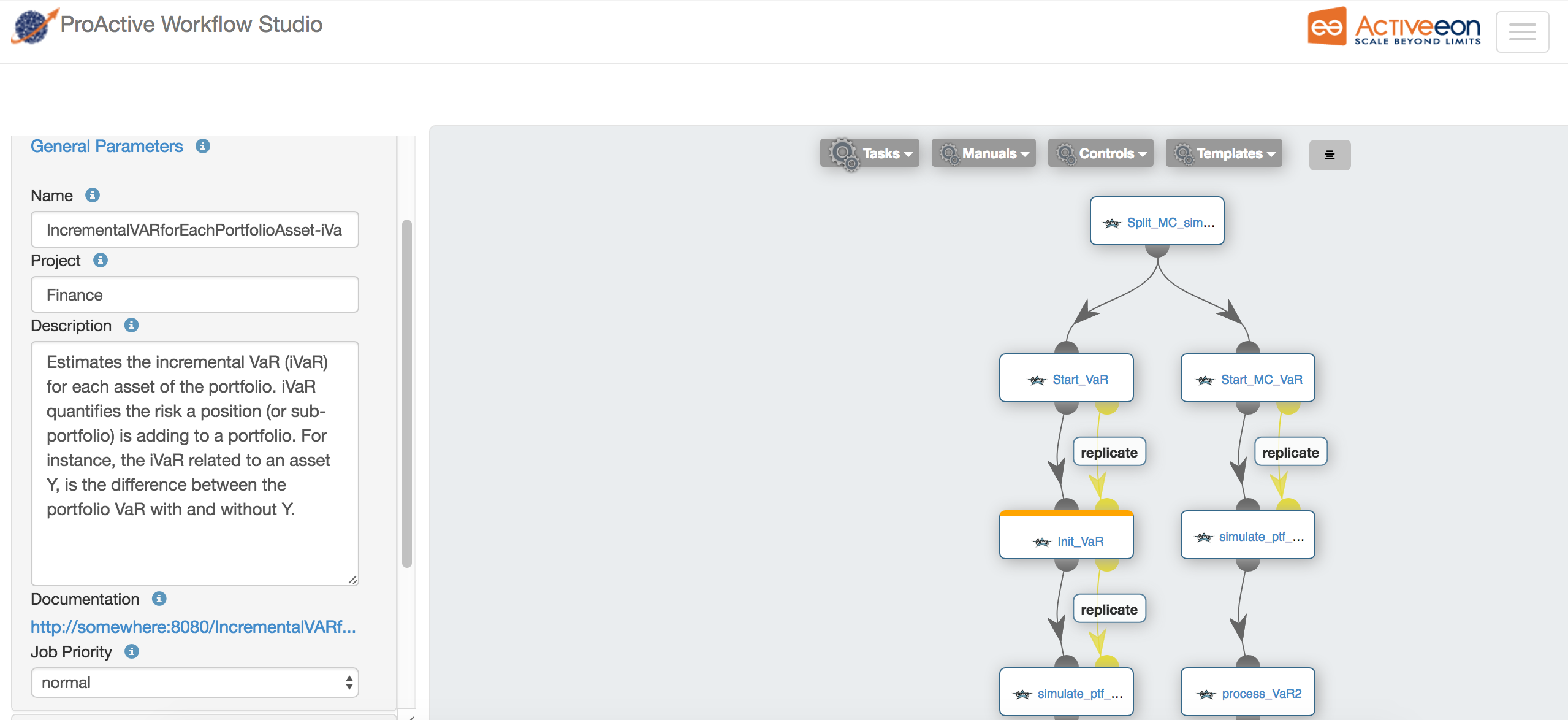

Below is an example of a workflow created with the Studio:

In the left part, are illustrated the General Parameters of the workflow with the following information:

-

Name: the name of the workflow. -

Project: the project name to which belongs the workflow. -

Description: the textual description of the workflow. -

Documentation: if the workflow has a Generic Information named "Documentation", then its URL value is displayed as a link. -

Job Priority: the priority assigned to the workflow. It is by default set toNORMAL, but can be increased or decreased once the job is submitted.

In the right part, we see the graph of dependent tasks composing the workflow. Each task can be selected to show the task specific attributes and defintions.

Finally, above the workflow graph, we see the various palettes which can be used to drag & drop sample task definitions or complex constructs.

The following chapters describe the three main type of tasks which can be defined inside ProActive Workflows.

4.2. Use the ProActive Catalog from the Studio

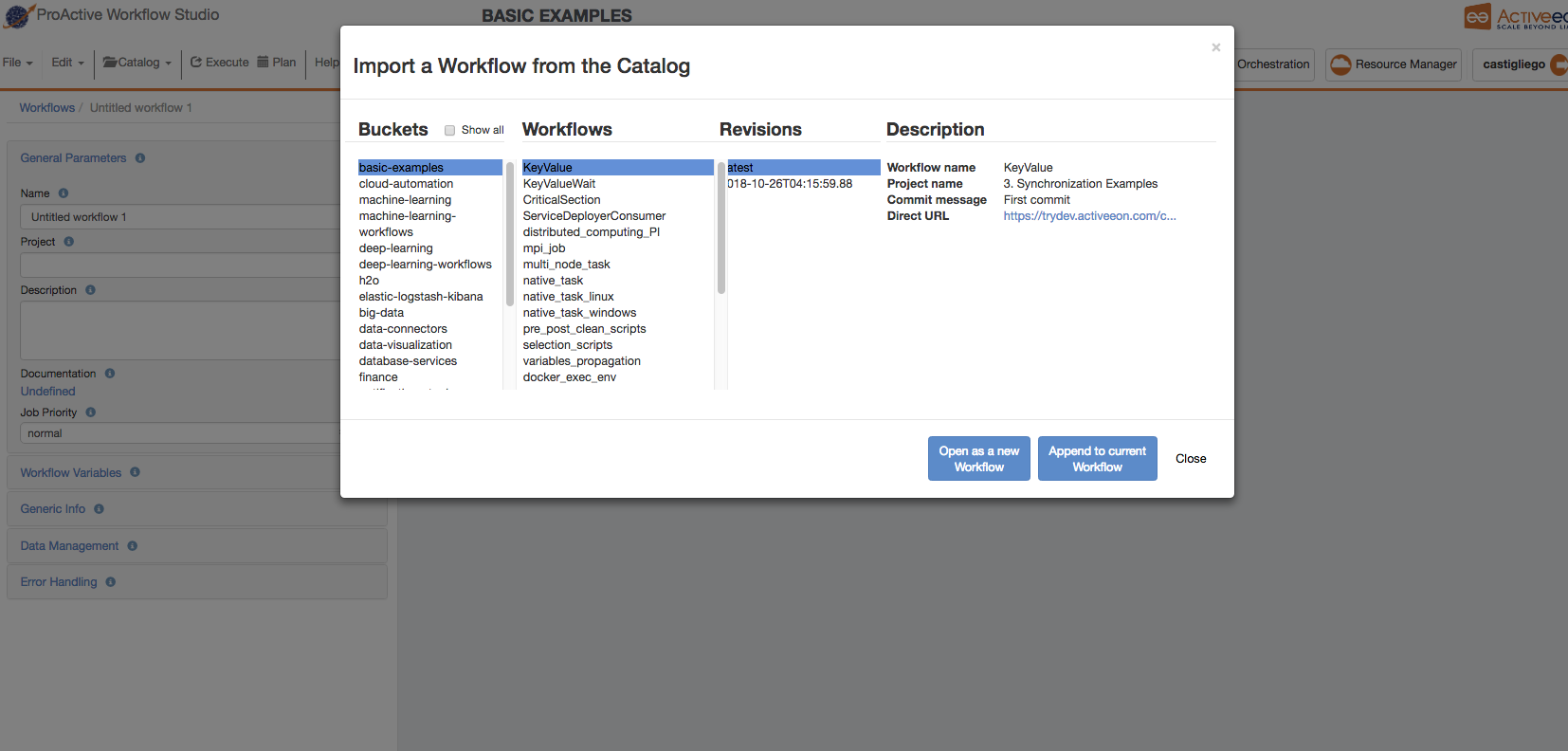

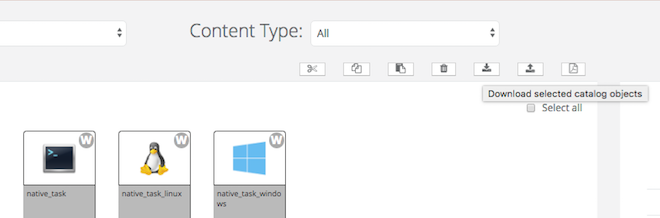

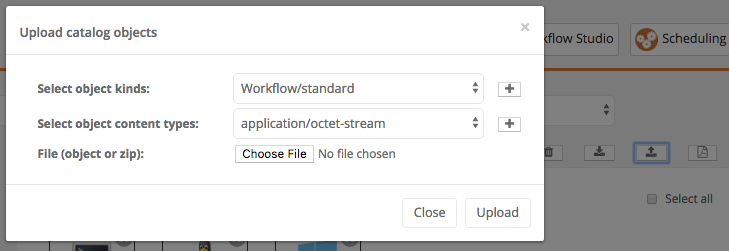

The GUI interaction with the Catalog can be done in two places: the Studio and Catalog Portal. The portals follow the concepts of the Catalog: Workflows are stored inside buckets, a workflow has some metadata and can have several revisions.

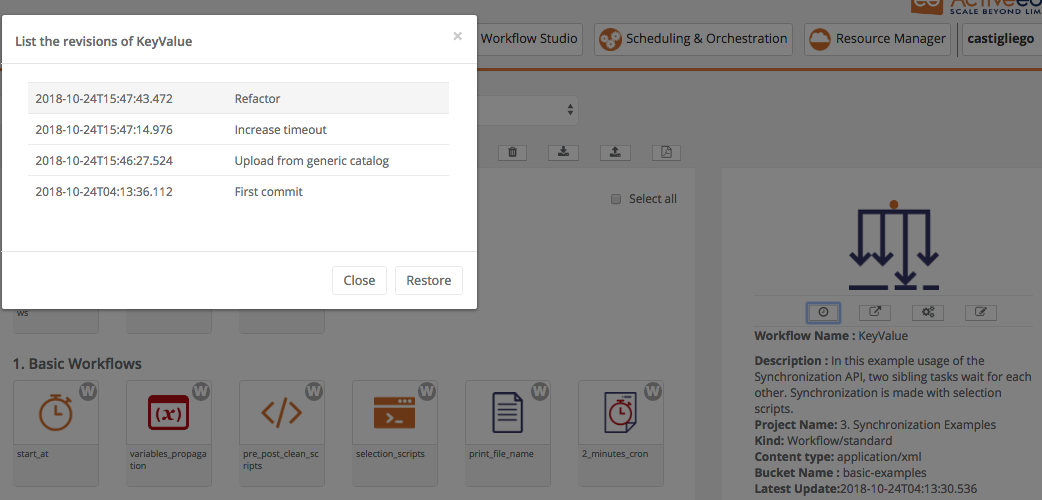

The Catalog view in the Studio or in the Catalog Portal allows to browse all the workflows in the Catalog grouped by buckets and to list all the revisions of a workflow along with their commit message.

Inside the Studio, there is a Catalog menu from which a user can directly interact with the Catalog to import or publish Workflows.

Additionally, the Palette of the Studio lists the user’s favourite buckets to allow easy import of workflows from the Catalog with a simple Drag & Drop.

4.2.1. Publish current Workflow to the Catalog

Workflows created in the studio can be saved inside a bucket in the Catalog by using the Publish current Workflow to the Catalog action.

When saving a Workflow in the Catalog, users can add a specific commit message.

If a Workflow with the same name already exists in the specified bucket, a new revision of the Workflow is created.

We recommend to always specify commit messages at any commit for an easier differentiation between stored versions.

4.2.2. Get a Workflow from the Catalog

When the Workflow is selected from Catalog you have two options:

-

Open as a new Workflow: Open the Workflow from Catalog as a new Workflow in Studio. -

Append to current Workflow: Append the selected Workflow from the Catalog to the Workflow already opened inside the Studio.

4.2.3. Add Bucket Menu to the Palette

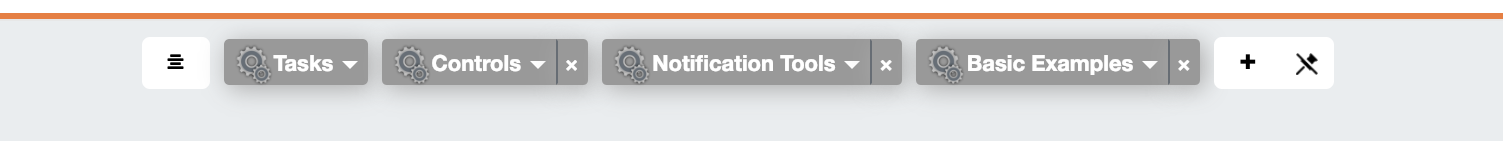

The Palette makes it easier to use workflows stored in a specific Bucket of the Catalog as templates when designing a Workflow.

To add a bucket from the Catalog as a dropdown menu in the Palette: Click on View → Add Bucket Menu to the Palette or you press the ![]() button in the palette.

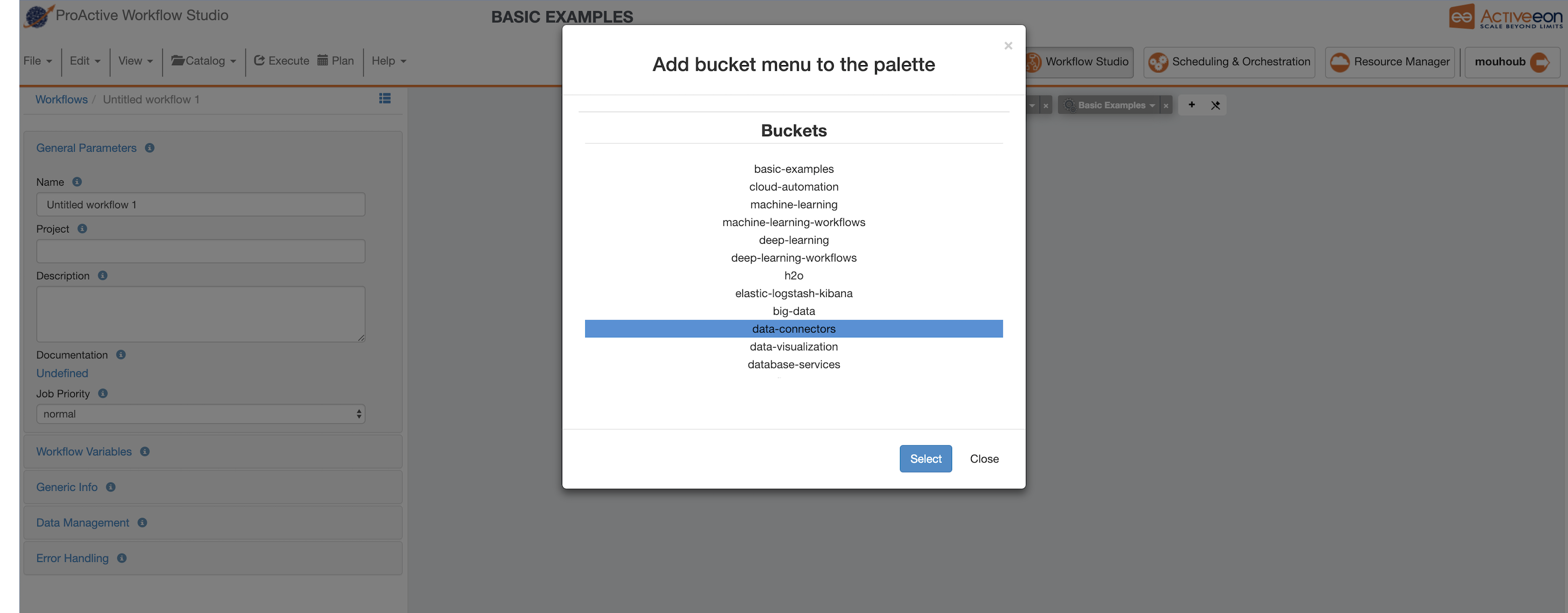

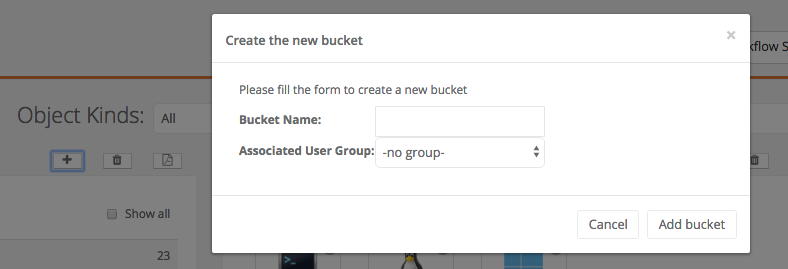

A window will show up to ask you which bucket you want to add to the Palette as in the image below.

button in the palette.

A window will show up to ask you which bucket you want to add to the Palette as in the image below.

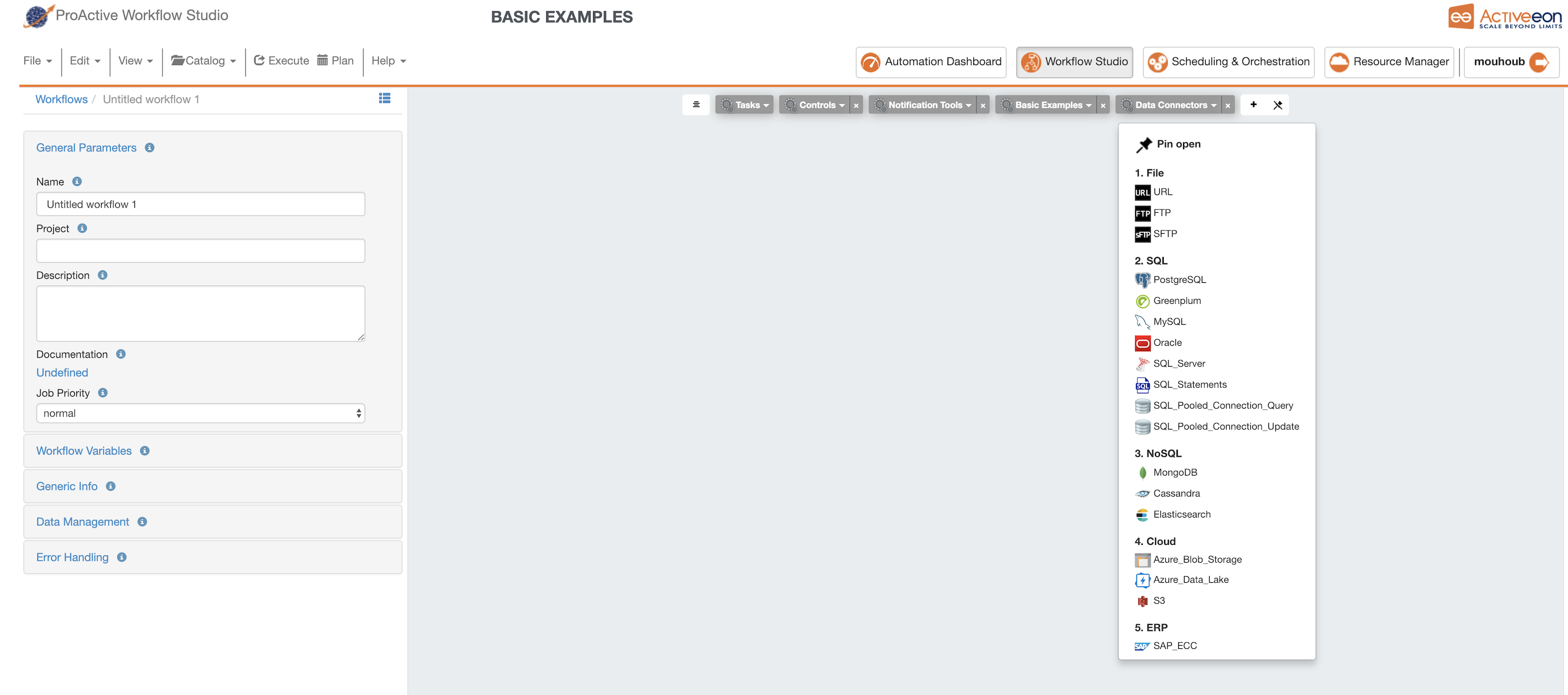

The selected Data Connectors Bucket now appears as a menu in the Studio. See the image below.

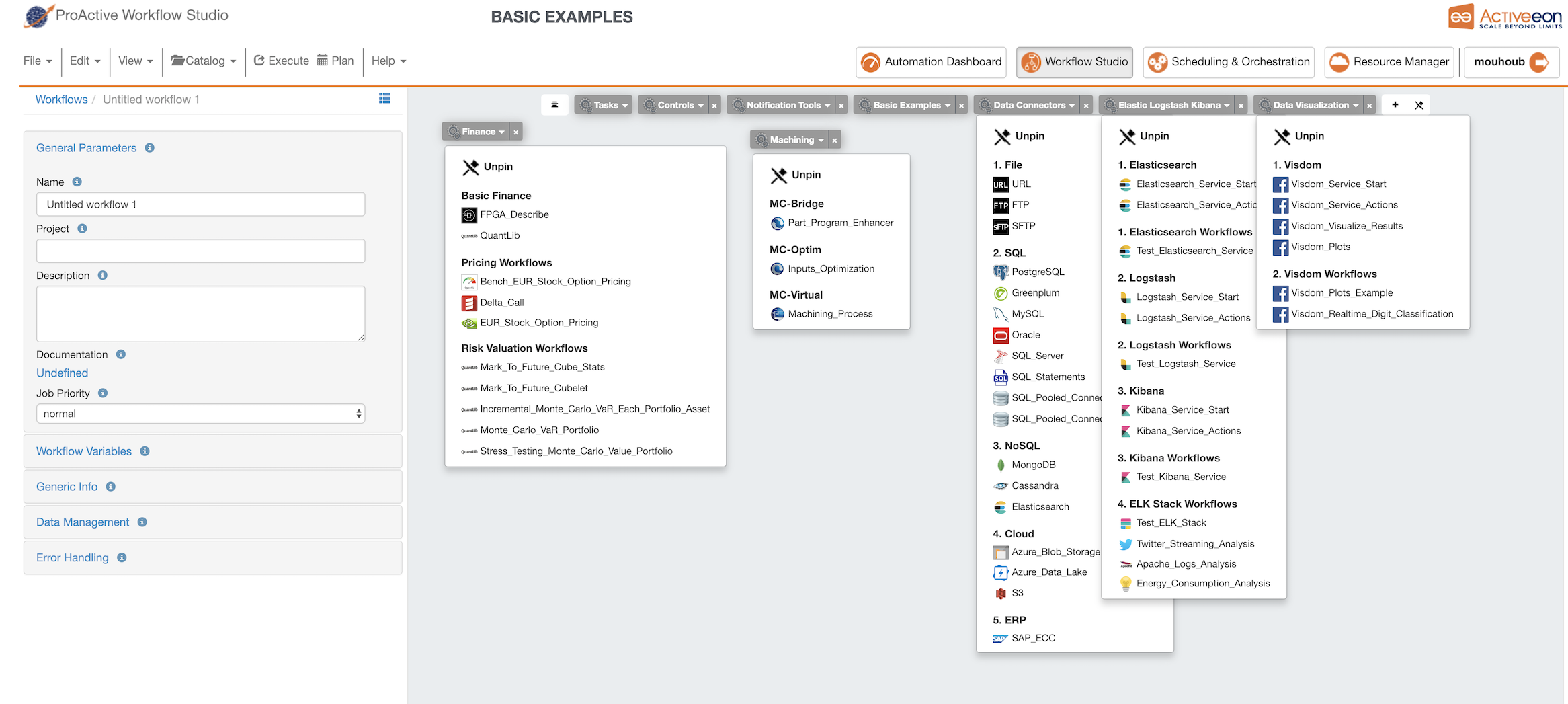

You can repeat the operation as many times as you wish in order to add other specific Menus you might need for your workflow design. See the image below.

4.2.4. Change Palette Preset

The Studio offers the possibility to set a Preset for the Palette. This makes it easier to load the Palette with a predefined set of default buckets.

Presets are generally arranged by theme (Basic Examples, Machine Learning, Deep Learning, Big Data, etc.)

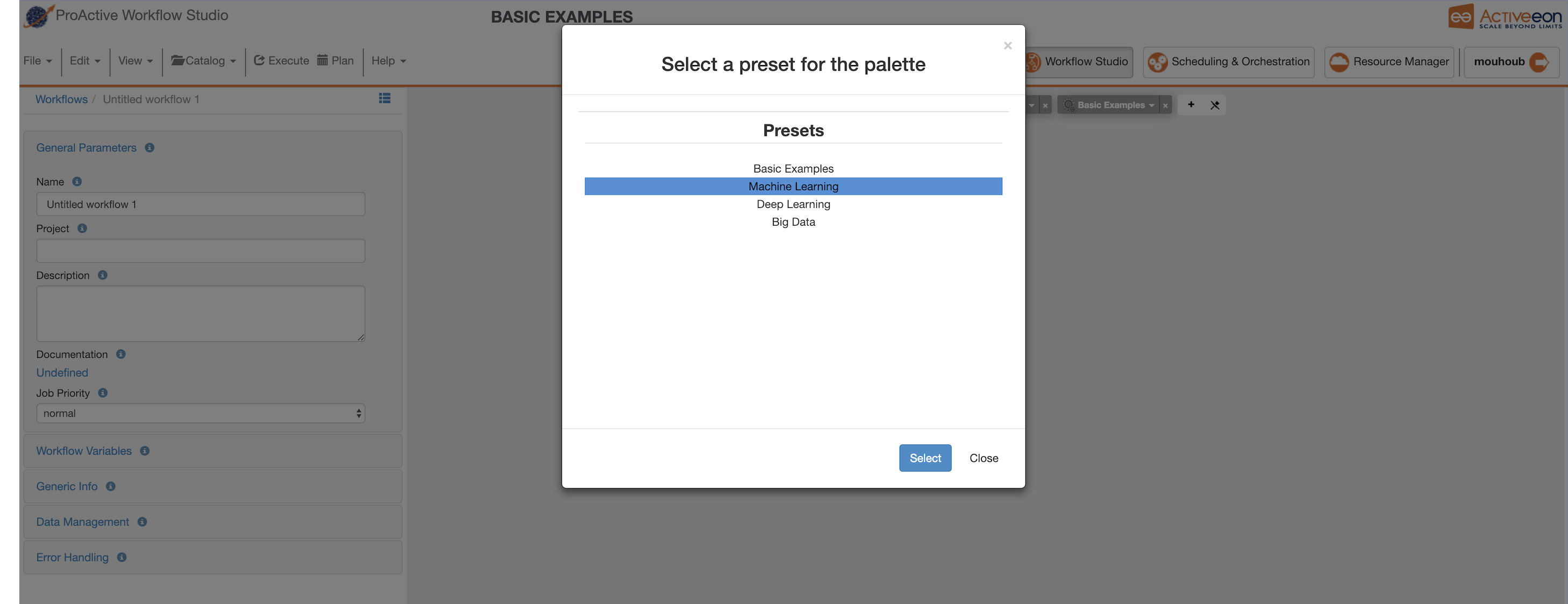

The Palette’s preset can be changed by clicking View → Change Palette Preset. This will show a window with the current list of presets as in the image below.

After changing the preset, its name appears at the top of the Studio and all its buckets are added to the Palette.

5. Workflow concepts

Workflows comes with constructs that help you distribute your computation. The tutorial Advanced workflows is a nice introduction to workflows with ProActive.

The following constructs are available:

-

Dependency

-

Replicate

-

Branch

-

Loop

| Use the Workflow Studio to create complex workflows, it is much easier than writing XML! |

5.1. Dependency

Dependencies can be set between tasks in a TaskFlow job. It provides a way to execute your tasks in a specified order, but also to forward result of an ancestor task to its children as parameter. Dependency between tasks is then both a temporal dependency and a data dependency.

Dependencies between tasks can be added either in ProActive Workflow Studio or simply in workflow XML as shown below:

<taskFlow>

<task name="task1">

<scriptExecutable>

<script>

<code language="groovy">

println "Executed first"

</code>

</script>

</scriptExecutable>

</task>

<task name="task2">

<depends>

<task ref="task1"/>

</depends>

<scriptExecutable>

<script>

<code language="groovy">

println "Now it's my turn"

</code>

</script>

</scriptExecutable>

</task>

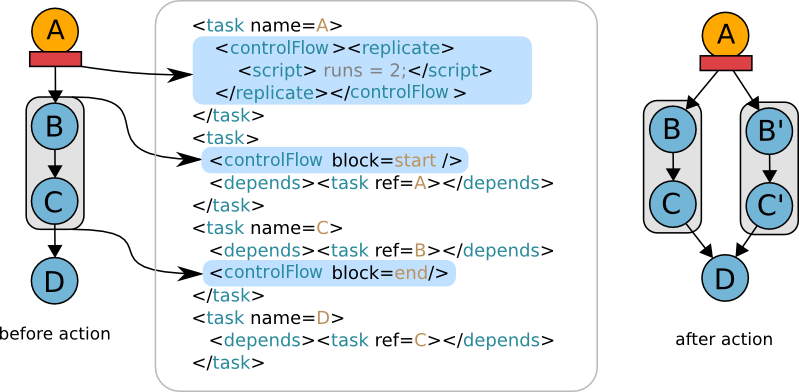

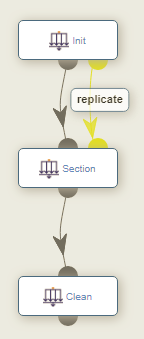

</taskFlow>5.2. Replicate

The replication allows the execution of multiple tasks in parallel when only one task is defined and the number of tasks to run could change.

-

The target is the direct child of the task initiator.

-

The initiator can have multiple children; each child is replicated.

-

If the target is a start block, the whole block is replicated.

-

The target must have the initiator as only dependency: the action is performed when the initiator task terminates. If the target has an other pending task as dependency, the behaviour cannot be specified.

-

There should always be a merge task after the target of a replicate: if the target is not a start block, it should have at least one child, if the target is a start block, the corresponding end block should have at least one child.

-

The last task of a replicated task block (or the replicated task if there is no block) cannot perform a branching or replicate action.

-

The target of a replicate action can not be tagged as end block.

-

The current replication index (from to 0 to

runs) can be accessed via thePA_TASK_REPLICATIONvariable.

| If you are familiar with programming, you can see the replication as forking tasks. |

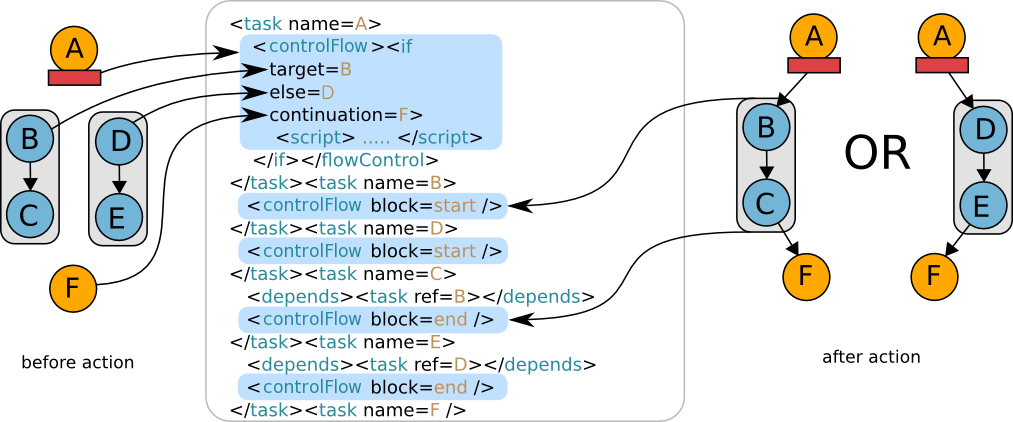

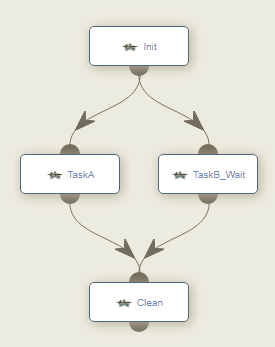

5.3. Branch

The branch construct provides the ability to choose between two alternative task flows, with the possibility to merge back to a common flow.

-

There is no explicit dependency between the initiator and the if/else targets. These are optional links (ie. A → B or E → F) defined in the if task.

-

The if and else flows can be merged by a continuation task referenced in the if task, playing the role of an endif construct. After the branching task, the flow will either be that of the if or the else task, but it will be continued by the continuation task.

-

If and else targets are executed exclusively. The initiator however can be the dependency of other tasks, which will be executed normally along the if or the else target.

-

A task block can be defined across if, else and continuation links, and not just plain dependencies (i.e. with A as start and F as end).

-

If using no continuation task, the if and else targets, along with their children, must be strictly distinct.

-

If using a continuation task, the if and else targets must be strictly distinct and valid task blocks.

-

if, else and continuation tasks (B, D and F) cannot have an explicit dependency.

-

if, else and continuation tasks cannot be entry points for the job, they must be triggered by the if control flow action.

-

A task can be target of only one if or else action. A continuation task can not merge two different if actions.

| If you are familiar with programming, you can see the branch as a if/then/else. |

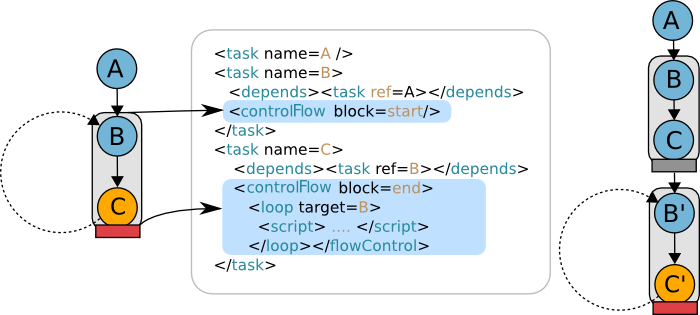

5.4. Loop

The loop provides the ability to repeat a set of tasks.

-

The target of a loop action must be a parent of the initiator following the dependency chain; this action goes back to a previously executed task.

-

Every task is executed at least once; loop operates in a do…while fashion.

-

The target of a loop should have only one explicit dependency. It will have different parameters (dependencies) depending if it is executed for the first time or not. The cardinality should stay the same.

-

The loop scope should be a task block: the target is a start block task, and the initiator its related end block task.

-

The current iteration index (from 0 to n until

loopis false) can be accessed via thePA_TASK_ITERATIONvariable.

| If you are familiar with programming, you can see the loop as a do/while. |

5.5. Task Blocks

Workflows often relies on task blocks. Task blocks are defined by pairs of start and end tags.

-

Each task of the flow can be tagged either start or end

-

Tags can be nested

-

Each start tag needs to match a distinct end tag

Task blocks are very similar to the parenthesis of most programming languages: anonymous and nested start/end tags. The only difference is that a parenthesis is a syntactical information, whereas task blocks are semantic.

The role of task blocks is to restrain the expressiveness of the system so that a workflow can be statically checked and validated. A treatment that can be looped or iterated will be isolated in a well-defined task block.

-

A loop flow action only applies on a task block: the initiator of the loop must be the end of the block, and the target must be the beginning.

-

When using a continuation task in an if flow action, the if and else branches must be task blocks.

-

If the child of a replicate task is a task block, the whole block will be replicated and not only the child of the initiator.

5.6. Variables

Variables can come at hand while developing ProActive applications. Propagate data between tasks, customize jobs, and store useful debug information are a few examples of how variables can ease development. ProActive has basically two groups of variables:

-

Workflow variables, declared on the XML job definition.

-

Dynamic Variables, system variables or variables created at task runtime.

5.6.1. Workflow variables

Workflow variables are declared in the XML job definition. Within the definition we can have variables in several levels, at job level the variables are visible and shared by all tasks. At task level, variables are visible only within the task execution context.

Job variables

In a workflow you can define job variables that are shared and visible by all tasks.

A job variable is defined using the following attributes:

-

name: the name of the variable

-

value: the value of the variable

-

model: the type of the value (optional). See section Variable Model

Variables are very useful to use workflows as templates where only a few parameters change for each execution. Upon submission you can define variable values in several ways from CLI, using the ProActive Workflow Studio, directly editing the XML job definition, or even using REST API.

The following example shows how to define a job variable in the XML:

<job ... >

<variables>

<variable name="variable" value="value" model=""/>

</variables>

...

</job>Job variables can be referenced anywhere in the workflow, including other job variables.

The syntax for referencing a variable is the pattern ${variable_name} (case-sensitive), for example:

<nativeExecutable>

<staticCommand value="${variable}" />

</nativeExecutable>Task variables

Similarly to job variables, Task variables can be defined within task scope in the job XML definition. Task variables scope is strictly limited to the task definition.

A task variable is defined using the following attributes:

-

name: the name of the variable

-

value: the value of the variable

-

inherited: asserts when the content of this variable is propagated from a previous task. If true, the value defined in the task variable will only be used if no variable with the same name is propagated.

-

model: the type of the value (optional). See section Variable Model

When the inherited parameters is true the variable value is propagated by a previous task (See Script variables for more details). Value field can be left empty however it can also work as a default value in case a previous task fails to define the variable.

For example:

<task ... >

<variables>

<variable name="variable" value="value" inherited="false" model=""/>

</variables>

...

</task>Task variables can be used similarly to job variables using the pattern ${variable_name} but only inside the task where the variable is defined.

Task variables override job variables, this means that if a job variable and a task variable are defined with the same name, the task variable value will be used inside the task, and the job variable value will be used elsewhere in the job.

Variable Model

Job and Task variables can define a model attribute which let the workflow designer control the variable value syntax.

A workflow designer can for example decide that the variable NUMBER_OF_ENTRIES is expected to be convertible to an Integer ranged between 0 and 20.

The workflow designer will then provide a default value to the variable NUMBER_OF_ENTRIES, for example "10". This workflow is valid as "10" can be converted to an Integer and is between 0 and 20.

When submitting the workflow, it will be possible to choose a new value for the variable NUMBER_OF_ENTRIES. If the user submitting the workflow chooses for example the value -5, the validation will fail and an error message will appear.

Available Models

The following list describes the various model syntaxes available:

-

PA:BOOLEAN : variable is either "true", "false", "0" or "1".

-

PA:INTEGER , PA:INTEGER[min,max] : variable can be converted to java.lang.Integer, and eventually is contained in the range [min, max].

Examples: PA:INTEGER will accept "-5" but not "1.4", PA:INTEGER[0,20] will accept "12" but not "25". -

PA:LONG , PA:LONG[min,max] : same as above with java.lang.Long.

-

PA:FLOAT , PA:FLOAT[min,max] : same as above with java.lang.Float.

Examples: PA:FLOAT[-0.33,5.99] will accept "3.5" but not "6". -

PA:DOUBLE , PA:DOUBLE[min,max] : same as above with java.lang.Double.

-

PA:SHORT , PA:SHORT[min,max] : same as above with java.lang.Short.

-

PA:URL : variable can be converted to java.net.URL.

Examples: PA:URL will accept "http://mysite.com" but not "c:/Temp". -

PA:URI : variable can be converted to java.net.URI.

Examples: PA:URI will accept "/tmp/file" but not "c:\a^~to" due to invalid characters. -

PA:DATETIME(format) , PA:DATETIME(format)[min,max] : variable can be converted to a java.util.Date using the format specified (see the format definition syntax in the SimpleDateFormat class).

A range can also be used in the PA:DATETIME model. In that case, each bound of the range must match the date format used.Examples:

PA:DATETIME(yyyy-MM-dd) will accept "2014-12-01" but not "2014".

PA:DATETIME(yyyy-MM-dd)[2014-01-01, 2015-01-01] will accept "2014-12-01" but not "2015-03-01".

PA:DATETIME(yyyy-MM-dd)[2014, 2015] will result in an error during the workflow definition as the range bounds [2014, 2015] are not using the format yyyy-MM-dd. -

PA:LIST(item1,item2,…) : variable must be one of the values defined in the list.

Examples: PA:LIST(a,b,c) will accept "a", "b", "c" but no other value. -

PA:REGEXP(pattern) : variable syntax must match the regular expression defined in the pattern. The regular expression syntax is described in class Pattern.

Examples: PA:REGEXP([a-z]+) will accept "abc", "foo", but not "Foo". -

PA:MODEL_FROM_URL(url) : variable syntax must match the model fetched from the given URL. This can be used for example when the model needs to represent a list of elements which may evolve over time and is updated inside a file. Such as a list of machines in an infrastructure, a list of users, etc.

Examples: PA:MODEL_FROM_URL(file:///srv/machines_list_model.txt), if the file machines_list_model.txt contains PA:LIST(host1,host2), will accept only "host1" and "host2", but may accept other values as the machines_list_model file changes. -

PA:CRON : variable syntax must be a valid cron expression as defined in the cron4j manual.

Examples: PA:CRON will accept "5 * * * *" but not "* * * *" (missing minutes sub-pattern). -

PA:SPEL(SpEL expression) : variable syntax will be evaluated by a SpEL expression. Refer to next paragraph.

-

PA:CATALOG_OBJECT : variable syntax must be a valid expression that matches the following pattern:

bucketName/objectName[/revision]. Note that the revision sub-pattern is a hash code number represented by 13 digit.

Examples: PA:CATALOG_OBJECT will accept "bucket-example/object-example/1539310165443" and "bucket-example/object-example" but not "bucket-example/object-example/153931016" (invalid revision number) and "bucket-example/" (missing object name). -

PA:JSON : variable syntax must be a valid JSON expression as defined in JSON doc.

Examples: PA:JSON will accept {"name": "John", "city":"New York"} and empty values like {} or [{},{}], but not ["test" : 123] (Unexpected character ':') and {test : 123} (Unexpected character 't').

Spring Expression Language Model

The PA:SPEL(expr) model allows to define expressions able to validate a variable value or not. Additionally, this model can be used to validate multiple variable values or to dynamically update other variables.

The syntax of the SpEL expression is defined by the Spring Expression Language reference.

For security concerns, we apply a restriction on the authorized class types. Besides the commonly used data types (Boolean, String, Long, Double, etc.), we authorize the use of ImmutableSet, ImmutableMap, ImmutableList, Math, Date types, JSONParser and ObjectMapper for JSON type and DocumentBuilderFactory for XML type.

In order to interact with variables, the expression has access to the following properties:

-

#value: this property will contain the value of the current variable defined by the user.

-

variables['variable_name']: this property array contains all the variables of the same context (for example of the same task for a task variable).

The SpEL expression must return a boolean value, true if the value is correct, false otherwise, returning another type will raise an error.

-

Example of SpEL simple validations:

PA:SPEL(#value == 'abc') : will accept the value if it’s the 'abc' string

PA:SPEL(T(Integer).parseInt(#value) > 10) : will accept the value if it’s an integer greater than 10.Note that #value always contains a string and must be converted to other types if needed.

-

Example of SpEL multiple validations:

PA:SPEL(variables['var1'] + variables['var2'] == 'abcdef') : will be accepted if the string concatenation of variable var1 and var2 is 'abcdef'.

PA:SPEL(T(Integer).parseInt(variables['var1']) + T(Integer).parseInt(variables['var2']) < 100) : will be accepted if the sum of variables var1 and var2 are smaller than 100. -

Example of SpEL variable inference:

PA:SPEL( variables['var2'] == '' ? (variables['var2'] = variables['var1']) instanceof T(String) : true ) : if the variable var2 is empty, it will use the value of variable var1 instead.

-

Example of SpEL variable using ObjectMapper type:

PA:SPEL( (variables['var1'] = new org.codehaus.jackson.map.ObjectMapper().readTree('{"abc": "def"}').get('abc').getTextValue()) instanceof T(String) ): will assign the value 'def' to the variable var1.

-

Example of SpEL variable using DocumentBuilderFactory type:

PA:SPEL( (variables['var'] = T(javax.xml.parsers.DocumentBuilderFactory).newInstance().newDocumentBuilder().parse(new org.xml.sax.InputSource(new java.io.StringReader('<employee id="101"><name>toto</name><title>tata</title></employee>'))).getElementsByTagName('name').item(0).getTextContent()) instanceof T(String) ): will assign the value 'toto' to the variable var1.

Note that the SpEL expression must always return a boolean value, this is why in this last expression we use the

instanceofkeyword to not return a string value.

Generic Information

In addition to variables, another key/value structure can be accessed inside a script: Generic Information.

Generic information semantics differ from Job variables semantics in the following way:

-

Generic information can be accessed inside a script, but cannot be modified.

-

Generic information can be defined at job level or at task level. If the same generic information is defined at job level and at task level, the latter value takes precedence inside the task scope.

-

Generic information cannot be used directly inside the workflow with the syntax ${} (See Job variables).

-

Generic information are used in general internally by the scheduler, for example to provide information to the scheduling loop on how to handle the task. An example of such generic information is the START_AT info used inside Cron Tasks.

-

Generic information can use in their definition job/task variables patterns, pattern replacements can be done at execution time when using task generic information.

Example of generic information definition:

For example:

<task ... >

<genericInformation>

<info name="ginfo" value="${test}"/>

</genericInformation>

...

</task>Icon management variables

There are specific variables that are dedicated to icon management.

The icon for Job level is specified inside Workflow Generic Information -workflow.icon. The task related icon is described inside Task Generic Information task.icon.

Job and task icons should be related to their specific nature. These variables are used in the ProActive portals for correct visualization of workflows with icons.

5.6.2. Dynamic Variables

As opposed to Workflow variables, dynamic variables are created or manipulated directly when executing scripts,

through the use of the variables script binding map (see the Script Bindings chapter or Script Bindings Reference for more information about script bindings).

We have mainly two types of dynamic variables:

-

ProActive system variables, declared by the ProActive scheduler.

-

Script variables, created after adding values to the

variablesmap.

ProActive system variables

Some variables are implicitly defined by the Scheduler to retrieve runtime information about a job or a task.

Here is the list of ProActive system variables:

Variable name |

Description |

Type |

|

The current job ID. |

String |

|

The current job name. |

String |

|

The current task ID. |

String |

|

The current task name. |

String |

|

The current iteration index, when using looping, starts at 0. |

Integer |

|

The current iteration index, when using a replication, starts at 0. |

Integer |

|

The path to the progress file, used to set the task’s progress. You can import and use the utility class |

String |

|

The path to Scheduler home, where the Scheduler or the Node is installed. |

String |

|

The path to the hostfile when using a multi nodes task. |

String |

|

The number of acquired nodes when using a multi nodes task. |

Integer |

|

The username of the ProActive user who has submitted the job. |

String |

They can be used inside the workflow with the pattern syntax, for example:

<task ...>

...

<forkEnvironment workingDir="/opt/${PA_JOB_ID}"></forkEnvironment>

...

</task>Script variables

In addition to the ability to declare variables directly inside job XML definition, it is also possible to

dynamically read and write new variables while executing a task script with the variables map. This map of variables is bound to

hash type depending on the script engine you are using, for instance in native

Java as Map.

In Groovy as a global map, see below:

String var = variables.get("one_variable")

variables.put("other_variable", "foo")In the Groovy example above the first line retrieve the value of variable

one_variable from the variables map. The second line create a new entry other_variable with value foo.

The variables map is propagated down the execution chain. If a task modifies a variable in the variables map,

or add a new variable, all dependent tasks will have access to this modification.

For example:

// task1

String var = variables.get("one_variable")

variables.put("one_variable", "foo")

variables.put("other_variable", "bar")// task2 depends on task1

println variables.get("one_variable") // will display "foo"

println variables.get("other_variable") // will display "bar"If a task depends on several tasks and each task modifies the same variable, the final value of the variable which is propagated down the execution chain, depends on the order of task execution. Therefore, the users need to take appropriate measures to prevent any undesired effects such as race conditions.

System variables can also be accessed from the variables map, for example:

println variables.get("PA_JOB_ID") // will display the id of the current jobIf using a Bash shell script, variables are accessible through environment variables.

#!/bin/bash

echo $variables_PA_TASK_NAME| In native tasks and Bash/CMD script engines, variables can be read but not written to. |

For Java native script you can set any Java serializable object as a variable value. They will be converted into strings using toString() method when required, for instance, to make those values available as environment variables in native tasks.

The field variable tag has an inherited field, if this field is set as true a task variable will read

its value from the variables map instead of the value field in the XML definition.

In this case, the value field becomes optional and work as a default value.

Below a XML schema that shows two tasks: first and second. Task first insert a new

variable using variables.put("inherited_var", "somevalue").

Task second declares inherited_var

but with defaultvalue, this value will be overwritten by the first task, variables.put("inherited_var", "somevalue").

Defining a default value might be useful if for some reason the first task fails before inserting the inhereted_var

on variables map. In this last case the defaultvalue remains unchanged.

<task name="first" >

<scriptExecutable>

<script>

<code language="groovy">

<![CDATA[

variables.put("inherited_var", "somevalue")

]]>

</code>

</script>

</scriptExecutable>

</task>

...

<task name="second">

<variables>

<variable name="inherited_var" value="defaultvalue" inherited="true"/>

</variables>

<depends>

<task ref="before"/>

</depends>

...

</task>Dynamic replacements

We’ve seen in Job variables that we can use the ${varname} syntax to create and access variables.

Dynamic replacement is in charge to resolve variables just before the execution of task script, or

in case of job variables, just before submitting the job.

We can also combine recursive definitions of variables.

For example, one could write:

<job ...>

...

<variables>

<variable name="job_var" value="hello" model=""/>

<variable name="job_var2" value="${job_var}" model=""/>

<variable name="job_var3" value="${job_var2}" model=""/>

</variables>

...

</job>

The dynamic replacement will resolve job_var3 to hello just before submitting the job to the scheduler.

| We can use job variable references in task variables but not otherwise. |

When dynamic replacing a task script variable the resolution happens just before running the task. So we can

use the variables map value. The example below will print "hello world" because hello is recursively

resolved at job level assigning hello world to inherited_var. When task_2 starts its local

variable task_var2 is dynamically replaced by hello world, i.e., the content of inherited_var that was

inserted in the variables map by the previous task.

<job ... >

<variables>

<variable name="job_var" value="hello" model=""/>

<variable name="job_var2" value="${job_var}" model=""/>

</variables>

<taskFlow>

<task name="task_1" >

<scriptExecutable>

<script>

<code language="groovy">

<![CDATA[

variables.put("inherited_var", "\${job_var2} world")

]]>

</code>

</script>

</scriptExecutable>

</task>

<task name="task_2">

<variables>

<variable name="task_var2" value="${inherited_var}" inherited="false"/>

</variables>

<depends>

<task ref="task_1"/>

</depends>

<scriptExecutable>

<script>

<code language="groovy">

<![CDATA[

println ""+variables.get("task_var2")

]]>

</code>

</script>

</scriptExecutable>

</task>

</taskFlow>

</job>Note that we can combine recursive definitions of variables.

For example, one could write:

<task ...>

...

<forkEnvironment workingDir="/opt/${DIRECTORY_${PA_TASK_REPLICATION}}"></forkEnvironment>

...

</task>In that case, the variable DIRECTORY_0 or DIRECTORY_1, etc (depending on the replication index) will be used in the working dir attribute.

Pattern variable replacements may be performed at submission time or at execution time:

-

A replacement performed at execution time means that the replacement is executed only when the task enclosing the replacement is executed.

-

A replacement performed at submission time means that the replacement is directly executed when the job is submitted to the scheduler.

Replacements directly using global job or task variables will always be performed at submission time.

In the following example, the description replacement is performed at submission time:

<job ... >

<variables>

<variable name="description" value="My ProActive workflow"/>

</variables>

...

<task ... >

<description>${description}</description>

</task>

</job>Replacements using system variables, such as the workingDir example above, will always be performed at execution time.

5.7. Task result

Another way to propagate data from a task to another relies on result variable. Anywhere in a task (usually at the end) you can set a value to a reserved variable named result. This value will be available in tasks depending on it. If a task has one or several dependencies, results will always be an array.

Assuming that we have two tasks task1 and task2 written in Groovy:

// task1

result = "task1";// task2

result = "task2";and task3 that depends on tasks task1 and task2, then, you can access result values defined by the parents as follows:

// task3

println(results[0]);

// will print "task1"

println(results[1]);

// will print "task2"| results will be aggregated according to the order declared in the dependency list. Consequently, if the xml depends tag of task3 contains the list [task1, task2] (see the xml example below), then results[0] will contain the result of task1 and results[1] the result of task2. On the contrary, if the depends list is [task2, task1], then results[0] will contain the result of task2 and results[1] the result of task1. |

<depends>

<task ref="task1"/>

<task ref="task2"/>

</depends>For nearly all script languages, the results variable contains a list of TaskResult java object. In order to access the result value, the value() method of this object must be called. Example for Python/Jython:

print results[0].value()5.7.1. Assigning metadata to task result

Result metadata can contain additional information associated with the result. In order to store metadata information, use the following syntax, if task2 depends on task1:

// task1

result = "task1";

resultMetadata.put("mymetadata", "myvalue")// task2

println(results[0].getMetadata());It is up to the user code to decide the metadata semantics, but some specific metadata can have a meaning when downloading or previewing results from the Scheduler portal:

-

file.name: the name of the file, including the extension, which will be used when storing the result in binary format.

// fileNameTask file = new File("balloon13.png") result = file.getBytes() resultMetadata.put("file.name","balloon13.png") -

file.extension: the extension, which will be appended to the automatically generated name, when storing the result in binary format

// fileExtensionTask file = new File("balloon13.png") result = file.getBytes() resultMetadata.put("file.extension",".png") -

content.type: the display format, which will be used when previewing the file in the browser. Open the following link for the complete list of mime-types.

// contentTypeTask file = new File("balloon13.png") result = file.getBytes() resultMetadata.put("content.type","image/png")

A Task result can be added to the list of results of a Job, see section Result List below.

5.8. Job Results

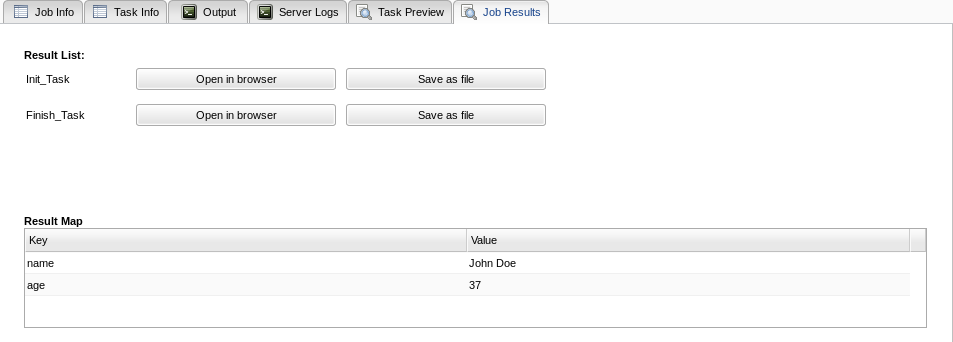

Job Results are composed of two elements: Result List and Result Map. Each task can contribute as for Result List as for Result Map. Once the job is finished, you can download/visualize Job Results from the Scheduler portal:

In the figure above, the label in Result List corresponds to the task name that generated the value.

5.8.1. Result List

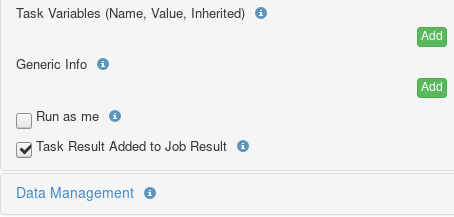

Each task has its own result (see section Task result). However, some task results can be marked as Task Result Added to Job Result. In that case, this task result will be added to the Result List. You can mark your task in the Studio:

In the Job xml schema it is available as preciousResult:

<task name="Init_Task" preciousResult="true" >5.8.2. Result Map

In addition to the Results List, a Job can also store some results into a dedicated key-value map, which is called Result Map. Every task can contribute to this Result Map. It is a write-only map, in such a way that a task cannot see what other tasks already wrote to the result map. When several tasks write the same key to the result map, the last write always takes precedence. This map contains String as a key, and Serializable as value.

Consider the following groovy example.

Task 1 writes to Result Map:

resultMap.put("filename", "results.txt")

resultMap.put("latestError", "no error")Task 2 writes to Result Map:

resultMap.put("latestError", "wrong user id")Once the job is finished, Result Map would contain:

"filename" -> "results.txt"

"latestError" -> "wrong user id"5.9. Control Flow Scripts

To perform a control flow action such as if, replicate or loop, a Control Flow Script is executed on the ProActive node. This script takes the result of the task as input; meaning a Java object if it was a Java or Script task, or nothing if it was a native task.

The script is executed on the ProActive node, just after the task’s

executable. If the executable is a Java Executable and returns a result,

the variable result will be set in the script’s environment so that

dynamic decisions can be taken with the task’s result as input. Native

Java objects can be used in a Groovy script.

loop = resultSimilarly to how parameters are passed through the result variable to the script, the script needs to define variables specific to each action to determine what action the script will lead to.

-

A replicate control flow action needs to define how many parallel runs will be executed, by defining the variable

runs:

// assuming result is a java.lang.Integer

runs = result % 4 + 1The assigned value needs be a strictly positive integer.

-

An if control flow action needs to determine whether the if or the else branch is selected, it does this by defining the boolean variable

branch:

// assuming result is a java.lang.Integer

if (result % 2) {

branch = "if"

} else {

branch = "else"

}The assigned value needs to be the string value if or else.

-

The loop control flow action requires setting the

loop, which will determine whether looping to the statically defined target is performed, or if the normal flow is executed as would a continue instruction do in a programming language:

loop = result as BooleanThe assigned value needs to be a boolean.

Failure to set the required variables or to provide a valid control flow script will not be treated gracefully and will result in the failure of the task.

5.10. Loop and Replicate awareness

When Control Flow actions such as replicate or loop are performed, some tasks are replicated. To be able to identify replicated tasks uniquely, each replicated task has an iteration index, replication index, and a tag. In addition to help to identify uniquely, these tags are useful to filter tasks by iterations for example.

5.10.1. Task name

First, those indexes are reflected inside the names of the tasks themselves. Indeed, task names must be unique inside a job. The indexes are added to the original task name as a suffix, separated by a special character.

-

If a task named T is replicated after a loop action, the newly created tasks will be named T#1, T#2, etc. The number following the # separator character represents the iteration index.

-

The same scheme is used upon replicate actions: newly created tasks are named T*1, T*2, and so on. The number following the * separator represents the replication index.|

-

When combining both of the above, the resulting task names are of the form: T#1*1, T#2*4, etc., in that precise order.

5.10.2. Task tag

Tags are assigned automatically by the scheduler when a task is created by replication from another task. They are designed to reflect the task that initiated the replication for the first time, the type of replication (loop or replicate) and the iteration index or replication indexes. So the tag is formed like this: (LOOP|REPLICATE)-Name_of_the_initiator-index.

-

If the task T1 initiates a loop that contains the tasks T2 and T3, then the tasks T2#1 and T3#1 will have the tag LOOP-T1-1. The tasks T2#2 and T3#2 will have the tag LOOP-T1-3.

-

If the loop is a cron loop, the index is replaced by the resolved time of the initiated looping. For example, in a cron loop that was initiated the 21/12/2015 at 14h00, the task T1#1 will have the tag LOOP-T1#1-21_12_15_14_00.

-

If the task T1 replicates a block that contains the tasks T2 and T3, then the tasks T2*1 and T3*1 will have the tag REPLICATE-T1-1. The tasks T2*2 and T3*2 will have the tag REPLICATE-T1-2.

-

If the task T1#1, inside a loop, replicates tasks, the new tasks will have the tags REPLICATE-T1#1-1, REPLICATE-T1#1-2, etc…

-

If the replicated task T1*1 initiates a loop inside a replicate, the new created tasks will have the tags LOOP-T1*1-1, LOOP-T1*1-2, etc…

5.10.3. Task definition

Those indexes are also available as workflow variables. They can be obtained using the variable names:

-

PA_TASK_REPLICATION

-

PA_TASK_ITERATION

Here is how to access those variables:

-

Native Executable arguments:

<staticCommand value="/path/to/bin.sh">

<arguments>

<argument value="/some/path/${PA_TASK_ITERATION}/${PA_TASK_REPLICATION}.dat" />-

Dataspace input and output:

<task name="t1" retries="2">

<inputFiles>

<files includes="foo_${PA_TASK_ITERATION}_${PA_TASK_REPLICATION}.dat" accessMode="transferFromInputSpace"/>

</inputFiles><outputFiles>

<files includes="bar_${PA_TASK_ITERATION}_${PA_TASK_REPLICATION}.res" accessMode="transferToOutputSpace"/>| Scripts affected by the variable substitutions are: Pre, Post, Control Flow. No substitution will occur in selection scripts or clean scripts. |

5.10.4. Task executable

The iteration and replication indexes are available inside the executables launched by tasks.

In script tasks, the indexes are exported through the following workflow variables:

PA_TASK_ITERATION and PA_TASK_REPLICATION.

int it = variables.get("PA_TASK_ITERATION")

int rep = variables.get("PA_TASK_REPLICATION")In a similar fashion, environment variables are set when launching a

native executable: PAS_TASK_ITERATION and PAS_TASK_REPLICATION:

#!/bin/sh

myApp.sh /path/to/file/${variables_PAS_TASK_ITERATION}.dat5.11. Example: Embarrassingly Parallel problem

An Embarrassingly Parallel problem is a problem that is easy to split into smaller independent tasks. With ProActive Scheduler you can tackle this type of problem with the Replicate construct.

| Familiar with MapReduce? Well, a workflow using replication uses similar concepts. |

The Advanced workflows is an example of an embarrassingly parallel problem where the computation is easily distributed across ProActive Nodes.

5.12. Example: MPI application